by Admin | Jun 30, 2019 | AI-Government, News

Delegation of the Boston Global Forum, including Governor Michael Dukakis, Mr. Nguyen Anh Tuan, Professor Thomas Patterson, Professor Nazli Choucri, Professor Thomas Creely, and Mr. Allan Citryn, attended AI World Government Conference and Expo in Washington DC. The BGF is a part of the strategic alliance of this event. BGF hosted the Summit of AI World Government with the topic “AI Governance, Big Data, and Ethics”. After Governor Michael Dukakis give opening remarks, Prof. Thomas Patterson presented the AI World Society-G7 Summit Initiative. He focused on the AI-Government model and AI-Citizen, in which AI-Citizen as new concepts. AI-Government affects the public through improvement of public services, such as health care and education. This impact, however, deals only with individuals as subjects – recipients of government action. AI also has the capacity to empower individuals and make them more responsible for their actions. In this sense, AI is a mechanism for enhancing individuals as citizens rather than merely as subjects.

As we envision the AI World Society (AIWS), it is a society where innovation, creativity and dedication are promoted and given material support, and in which individuals who contribute to society through innovation, creativity and dedication are heard, recognized and rewarded.

We also envision it as a society that increases citizens’ opportunities to influence governmental decisions and to hold government accountable for its actions. Citizen participation is not a substitute for representative institutions, but the AIWS model expands the range of decisions in which citizens are directly and materially involved. AI in this context should support the self- organization of citizens in structures of civil society and those for political action, thus contributing to a more vibrant and open society and a living democracy.

AI-Citizen would seek to nurture innovation, creativity and dedication and the ability to organize for a common purpose; develop a mechanism for rewarding innovation, creativity and dedication, getting organized for public interest purposes; develop ways for individuals to participate more fully and actively in government decisions, parliamentary and other democratic activity and civil society; and provide ways for individuals to hold government and other actors accountable for decisions affecting them and society generally.

Prof. Patterson introduced the Social Value Reward (or Social Value Recognition) SVR. This system is in contrast with Social Credit System of China. Social Value Reward is for citizens and by citizens. Mr. Nguyen Anh Tuan, CEO of BGF, emphasized that the Social Credit System of China government is anti-democratic, and the world need a democratic system to replace the Social Credit System of China.

Social Value Reward (SVR) System would provide a way for citizens to track their contributions to society, as well as a way for society to acknowledge those contributions. It would allocate reward based on citizens’ adherence to norms such as their dedication and their innovative, creative contributions. It stands in sharp contrast to China’s “social credit” system, which is a mechanism of state control. Based on a blockchain system, SVR would not have government input or be accessible by government. Reward would be allocated by civic-minded non-governmental organizations. The system is used to recognize and honor citizens for their contributions to society. Punitive action is prohibited. SVR would also permit citizens to evaluate the leaders of governmental institutions, governments, non-government organizations, and firms for their contributions to society. SVR would accord with The European Union’s General Data Protection Regulation (GDPR) and The Ethics Guidelines for Trustworthy Artificial Intelligence (AI) issued by the European Commission’s High-Level Expert Group on Artificial Intelligence.

Professor Patterson informs that BGF are discussing about The New Social Contract for AI, Data, Internet society.

BGF delegation attended AI World Government.

Governor Michael Dukakis gives opening remarks at the Summit of AI World Government.

by Editor | Jul 21, 2019 | News

Professor Alex Sandy Pentland, co-founder of the Social Contract 2020, directs the MIT Connection Science and Human Dynamics labs and previously helped to create and direct the MIT Media Lab and the Media Lab Asia in India. He is one of the most-cited scientists in the world, and Forbes recently declared him to be one of the “7 most powerful data scientists in the world”, along with Google founders and the Chief Technical Officer of the United States. He co-led the World Economic Forum discussion in Davos that led to the EU privacy regulation GDPR, and was central in forging the transparency and accountability mechanisms in the UN’s Sustainable Development Goals. He has received numerous awards and prizes such as the McKinsey Award from Harvard Business Review, the 40th Anniversary of the Internet from DARPA, and the Brandeis Award for work in privacy.

Professor Alex Sandy Pentland will speak at AI World Conference and Expo on October 23, 2019 in Boston. The Boston Global Forum and Michael Dukakis Institute for Leadership and Innovation are Strategic Alliance Hosts of this event at AIWorld.com.

by BGF | Jun 15, 2019 | AI World Society Summit

Mr. Paul Nemitz, Principal Advisor, Doctorate General for Justice and Consumers, the European Commission

Mr. Paul Nemitz presented the principles of creating Artificial Intelligence Law, layer 4 of the 7-layer Artificial Intelligence Society model at AIWS-G7 Summit Initiative on April 25, 2019.

Legal concepts for AI – Layer 4 of AI World Society

“I would say you know the Sun goes down all the line. Today, I have a very very long discussion but we don’t need such discussion on AI when you see it. You know at the same time, I think we evaluate so high on AI, we will see sunny to produce masada suit claimed that they use is what’s up percent of those who they think to do AI. Actually, it is not very related to right now. We say we work on AI, but actually, this is not so much. So why? AI, we are nevertheless having this high degree of activity proposed by companies also governments and academia. Because, on one hand there’s promise I say it’s useless promise that this technology will solve mental problems and on the other hand there are people describe apocalyptic risks coming from this technology and in the middle of all these and policymakers and we’ve heard already today about French vision and I think exactly the same who had the task to maximize the potential for society growth employment but also panic interest, we want those two areas and also want to minimize the risks. And I think that’s what I am playing focus on a little bit on maybe what can go off and Essex contribute people offense. Let me say that my friends and here just a word about the Commission of European have a strategy for AI says every member state should have strategy of AI and we are I think usually inspiring each other there is a very iterative process in Europe between as a member says and your opinion and so misrepresented strategy of AI, April of last year against we build chapters daily in France Industrial Policy Research aspects for Super Readers just research. Second the preparation the labor market we need people to acquire skills like non learning also the older generation supposed to develop but was to block and use AI and say not only as customer but as citizen who understands the risks batches when using this technology so there this is firing skills not only to elastic anticipating Commerce path and I think that’s because interest here the project of AI was excited because it work with this technology in the future processes of governance, the Public interest and democracy answer ethics and law for AI mean that it’s great disadvantage of Europe that we understand Essex debates and making laws while others develop the technology and we had. This is in fact the disclosure which I have been a witness of woman working in public service this has being said in relation to the internet because it’s related to other technologies, energies. It’s the reoccurring discourse. It has very deep discourse related to environmental protection was that in the nineties away invention by Ernst Heinrich, the primary laws sensible precaution with it was not as huge catastrophe autonomic development and the promises were made before AI. I said when AI was directly on the GDR a new commission then was confronted, for example, by study from American Chamber of Commerce who said if you’ve been and his law who top it means minus five percent of GDP (General Data Protection). So, I would say together with Islamic promises of technology go announcements of a growing interest that if you apply the physical precaution the huge negative consequences. That’s interest driven fiscals, empirical reality is completely different if we look back presented the environmental movement and its successes. We have seen that being environmentally sustainable as an enterprise means also to reduce oscillation and it has become a huge driver for profitability not only for your incomes our companies worldwide to become very logically sustainable and therefore resource efficient in term of using as little as possible natural resources. And in the end son were only convinced when he’s sober of the greening of GE (General Electric) went around the world. It’s still not for today that you can marry in a very productive way with climate change detection and with economic growth. So it’s clear that we will never convince oil but I will say that there’s a large division in Europe sending from the time of logic movements and atomic energy with also the peace movement at the time already end and start with the 70s was the great book of Anna’s principle of responsibility with in New York. Useless research that the principle of recoil as part of the invasion force is the secret of success and novelty because a client disciplines as part of it which is integral population ensures long term sustainability but also for economic and social sustainability of the technology for business. And the empirics in this, I could not develop further but the most striking the greening of industry now.

Let me turn before I come to what this means AI wanted an energy utterances of course historically speaking pure has completely different outlook on personal data and related right of individual information self-determination then America that better watch out. But because Europe has completed little history, big part of Europe has lived through communist dictatorships, Germany as it passes the pictures and in both of these dictatorships, the aim of government was to know everything about everybody and the historical experience raises the sensitivity about collection and is this historical background which led to the creation of the legal concept of information acidification as fundamental ideas to the liberty which at that time was not written in any constitution, by judges and courts in Europe. And when I say by judges and courts in Europe, you can really go through the different layers of human rights, Cosmo based computing convention you write the system when men essays of this convention which are the used magic plus few more controls from outside. By contrast, all comparison right through prostitution, …

Or together six years to get this regulation, this because to any mention the lobby trying to avoid this at all cost. I would say historically, were fortunate situation we had vice president which was from conservative you would say that Republicans, I agreed to a paternity was funded me, it’s a liberal democrat and then of course we had that what’s known as data quad which thoughtful for everybody what these technologies today allow and all together this was historical not a moment to get these instruments who I would say and this is not our speculation. But, I can tell you before the whole insemination process but really was …and so at this moment, when we are a very American contribution energy pathways that need branch out with this. They all what we have learned from America with learning our course in relation to AI because the discourse that America mainly because only a Nixon money and on the other hand to have China also develop the technology but not only for money but a successful communist dictatorship control the people. Good or dream of Communist Party since 1917 fulfilled communist ensure technology AI, had China so too soaring sister. All these things, I think this discourse has a few defect and here in Boston and how we considered which is. This of course truth to describe America is just one the commitment not represented by the stock market number one, two, three, four, five and six Apple, Google, Facebook, Amazon and so on. But there’s a lot very very good work and very very good thinking in America which we had profited from anywhere and in 2000, all property in the world on AI and today’s discussion of the Penn College has been produced here both with the focus on making AI useful for democracy and for public interest, my home, even talented advertisement, was the great money, right now is just one example, I made. I would like to quote another example, which is very very important which is right now the workers, FEC, I arrived in Boston and learned that Facebook makes traditional marketing many billion dollars for possible kind of the entities because Facebook did not follow up on its promises relating to price. I have to say I have a great extent of the work of ABC over the years network a lot together with some colleagues on where you see it from here and generally under President Obama they had 3pm – … when we negotiate – negotiator for the Commission on privacy shield very professional and of course, the great advantage of the FTC in comparison to your Kindle rotational service is that it has an experience of rigorous enforcement in competition against the nutritionist of the rules of protecting against the legal agreements between trust and this experience hopefully. Now this is horrible in California views Christ and I would love to see this running from a magician lock transiting to privacy taking place in Europe. So I think we’re all looking forward to the FTC and doing its job under the rule of law in America by American law maybe also making reference to say America – commitments for Europe peaceful undersigned these commitments relating to respecting privacy rules of Europe. in Asia, a privacy rules which provide equivalent protection will be produced when they process as the same as in America. And so you see it is potentially to show that this green is actually means something by amazing the spire also on this non respect of this Agreement such numbers say it’s family by the FTC but it also has the chance to bring America into the lead as it was recently speaking what would say on has its own perspective on young development of academic analysis of facts back into the little privacy by demonstrating that is not only portrait rules on books but also to fossils. So therefore I would say not necessary education opposition between Europe and America on these issues but we are struggling on both sides of the Atlantic with the same changes for public interests the same problems and we can learn a lot of culture and this line can also take place in relation to the future things of AI in relation to greater protection of privacy. I think first of all the interest today will approach because this is probably after the Internet, The second, big ware of technology, where in everything which is done we have to see in terms of society. Internet policy already is actually society policy some, some ponies, Balaji policy. The Internet reaches today by our mobile devices everywhere, it is a forum for public debate in democracy, it allows for people without centralized information, it’s much more than just something that should be left to technologists and to be debated under the heading emoji that is present. It is true as we hear the AI will be excreted pervasive as electricity, we have to look at AI in the same way as we look at rules which we made in legislation because AI will continue in these rules according to which decisions are made because it will take largely decisions, this technology, this program. And if it doesn’t take decisions, it will guide people as some would say not or manipulate in a certain direction. So suddenly, it will have huge effects both our individual freedoms and our actions but also collectively on how privacy protection and that is the first reason why there’s so much debate about AI. Actually, an efficiency economy and also innovation processes which may arise. The second reason why the debate is so intense as over all is because we are struggling with the new change of autonomy of technology. Who will be responsible for the new cause energies which may be caused by a program which lurks and therefore it was in the direction, it was not intended by the Creator who will be responsible for. But images which has a program creates economic, it is physical environmental damages but also under original without damages? So this question of liability had already led to European Commission to set up a special working group with experts on civil law liability thoughts and the question which is both obviously is true AI be a subject of strict liability. We have Europe like a United States very strict rules on product liability to pay we applied layout and here also on all the other issues in a very broad thinking process which is a process interactive learning on what interests them in debates philosophers, lawyers, scientists, sociologists, anthropologists and the technological world mostly academic capacity are not companies but also in an iterative learning process in three or four direction. One of those products was in the union between European member states and six governments for civil society and one the other hand of course the global process which was so empty described by colleagues absolute general content bench consul-general illness. So in this learning process the Commission has focused on the essays we assembled a group of 52 independent experts well aspire coil, business leader consciously also business representatives in this group, they have produced two papers which have been made public. The second paper being iteration of paper and the Commission has just dated this paper on the 8th of April in a communication made naturally the content of these principles of ethics. It’s all so that it’s now the communication of unit permission on the table or is it will be one building of AI and Trust. And the principles which we would like to see in this trustworthy AI from the outset integrated evolved about three billion dollars we want to be trustworthy because it acts lawfully. So, one could say these programs to never take action which a human commit them but media law. And that’s not small right in the business climate in the world we’re in business rules future managers are taught about disruptive innovation and innovation to disrupt the law. And it is probably not the concept which we can afford with AI. I’m not saying we’ll see a lot of disrupting the law in the Internet age, you wish or a natural pose equal three billion euros AI on Google for not all, we have to of course the decision on Apple to pay taxes and Ireland 11 billion euros taxes are paid. You are followed to the based on copyright of Google just coming in but they’re doing whatever they want. So there’s an issue in the digital world, in the Internet business of disruptive little, I would say that’s who want an extreme state and they say that is something where we have to be careful to apply this thinking also to AI. Yes, you want to be innovative what innovators describe but not in the thing you get so not wellness first seconds beyond the law, the development of the technology should be density based not all of this good and necessary society and equal each loss. The loss, the law is strongest Ward and needed to be able to enforce but there are other things which will be on anesthetics answers we want this technology to be reversed, they need to perform as intended and what we have said. That’s also not qualified the more complex the technology it’s more able to get. The more will be issues of homelessness. One of the greatest scientists in a mission and many here in Harvard, MIT when he says this again from the example of a lot of America when says the most important on AI will be continues control of the systems because we must follow them to see how they learn and how they mutate how they behave to be able to learn about what we have done wrong and how to correct. But as a principle of responsibility and one could say principle V Prakash. Putting a technology into the world is extremely powerful that we learn to mutate on web or the other we wanted to do this. That is the whole efficiency game but the enemy also to follow it. Before our greatness almost pure it must be taken off the market.

So there are three basic principles: lawfulness, robustness and ethic orientation. And then the group and now you will be permission has further develop these principles into seven more operational rules. The first is that AI should stand under human agency head, human forces so that the humanism and this is not only the term of the emergency channel but if I can come back to the GDR (General Data Rules) where we can article automated decision making you will dignity as human right requires people a possibility to say you know how to make a decision related to them and the solvation decision making touches everyone at alliance. Why? Because we follow all conception of human being as a out of the Bible as a human enlightenment , the Latin entity of our institutions cannot imagine human as subject to become objects of technology, object of technology basic ecology control them and decides all that paper it may be vision to do it, to control it with some technology but it is not the nature the human being who is responsible and who acts and who has freedom and participation democracy has decided that the state private entities decisions over individual in all this way is individual successful, the right people human decision rating and the right to appeal this. Second robustness of course include safety where you can do great harm, great efforts, and investments must be made. Then of course, privacy, they are fictional data governance. And I would say that as regards possible they identify human being it’s possible that human beings GDR contains already a high percentage of rules which we need to work well with AI will go as far as saying it’s very very difficult. By then, five additional rules, one would employ AI when it processes data beyond GDP are. Why? Because it contains this fight community decision-making, it contains human decision-making, the right of jail to order information, it contains very hard-working rights to information about the program which decides which they topped injured, what is the content of the algorithm, what is the purpose was significant of decision making. All this, you can ask to see under Julia at least in Europe and the protection authorities have job to make sure that this happens. Angelina also contains the very mod rights to delete data or rectified it, you can ask that your data has to be collected somewhere and use as no specific interface which says this data could be sold and this must be a parliamentary law which says this look at our Swedish you say I don’t like Facebook everyone, I’m not going to use the general social network and then Facebook must be the heat in the people of justice is a great professional friends about exactly this duty the French Data Protection Authority killed at the time a mature woman is a very high to attack. The world you have to delete information about people and that’s it. Who doesn’t know we don’t think we have to believe we always think we make it invisible in Europe because business would be extraterritorial we want to be able to continue information America addresses and clear signal division as lost as means thief and logic imposes. But if you have to eat a data and therefore, provide of the law you can’t show anywhere. Though this case went up through the French courts and it’s not before you people of justice and let’s see what you got justice inside of this. But I would say it’s as traumatic case as the case is not yet people what certainly will come there may be to see the young knew who the French head of AI Facebook famously ones. Twitter, we are making 300 trillions predictions today Facebook demonstrate the computing how Facebook has my answer to that has been Yes. And all these relations are master data on individuals and everybody should have the right to see the predictions about themselves and also the right to the least of these predictions and I’m sure this will also become part of litigation. But one thing is clear in the world of AI, we’re predicting of us would be much more important collecting and we’re influencing people will be what they predicted. If these information and tools on people, I’m not falling under the rights of Judea and therefore people have the right to see them and know all of them but also ask for deletion the right data protection in the world AI will be very active. So I’m very convince is that the costs we’ll take the right decisions here and when I talks to Americans, then many Americans were extremely worried about these potentials what people can be done to buy those who own data and make predictions what influence whether it’s the same of swimming or whether it’s big business smoothing and by the way again the learning from America, the best book right on the market comes from Harvard Business School professor on all this in the private sector not government and it’s called Surveillance Catches and other professors who can do both of how this is the fantastic book which I suggest for everyone to meet to understand into which power transformations. All of us as individuals we are living already and how we work tomorrow if we don’t build up the system of both serving democracy and the country of the rule of law economic rights in term of making our political system and I have to say not much an American system from technological systems but also system which protects us as individuals. So GDPR help in this path into the world of rule by technology but the question after the ethics, principles which I will trust compete and then I will discuss especially with you is can we leave it beyond GDPR and what do we need you lot. But first, what are other SS principles which were downright AI resolute. So we discussed human agency oversight, robustness and safety privacy data governance force transparency. And here, very important we all need to know are we dealing with you or are we meeting with the Machine? And this is something which I would say cannot be left to voluntary ethics. You know one company doesn’t like that, no, this is something where we need a lock which makes it here. But those who are brought is technology is when these frequently served I know who’s human beings. But human must be made aware “hello, this is machine” speaking and this is of course our thoughts. You only extremely bond when we come into the democratic environment and closer and closer to elections. And just imagine to start your telephone in the morning, you look at Facebook and Twitter and there are all messages in paper, one candidate that person what she produced that’s almost so I think we’re ready for that reason but also for reasons of transparency contracting and for reasons of human dignity, we need to make aware right our sheets and your message was the sheer speed. The velocity had non discrimination was mentioned is a huge debate in American values makers coming from New York, AI now cross America silicon so on. The scientists in New York University, a great technology scrutiny I think that America has huge advantage over Europe because both American technologists or you are closer to take you’re social scientists, your loyalty technology that was all of course also closer to the daily our technology pincer to the risks of technology and also to the abusive and sixth principle and this is where the paper falls very nicely, societal well-being, environmental well-being and how to village. But so on accountability just tightly back and a great friend of Julia England the great reformer was the jobless. Honestly when then, I just started, want to start a new school for the backup where she received a 20 million from the Craig Newmark and you know again, something, we only can dream of in Europe she developed this concept of protect accountability journalism which is absolutely necessary these days because let’s be honest, if we don’t have strong technical ability journalism, many of us including myself we will not be able to do our job is a problem in the technological world which goes beyond that say commercial interests or abuse of technology which is a symbol that the technology becomes complicated you need mediators to explain and understand in doing, in helping understand policymakers, in helping understand other parts of science to take a view on the technology. So Julia Angwin has achieved very very great things you remember when in the beginning of GDP a solicit six or eight years ago, the world phenomena started a series of articles or investigative journalism under the title what they know and they were Facebook, Google so on and Julia Angwin is in each of these series brought up stunning revelations of what they know, what they do with it? She presented I think if I remember correctly was the one who brought to the world that there are many websites which automatically raise Isssac you got it was evident Utah. Now, Windows computer the assumption of this error websites be well paid, one more money will x-ray surprises fantastic journalism very difficult to document. He was documented. You know, for me, I have seen these things because that’s great learning, the price of the father who starts to get these mails, baby diapers, baby nourishing, smooth the mailings, the commercial ratings from once of our Alice verse of pregnancy of the daughter because the daughter had been grown faster shinning shots looking at these things the maids come home and so you know, the companies profilers, the technological automate profile of the human pregnant dead end the day. So this is another stunning example of. I have seen all this and men Americans given to the world because when asked this serious fight through anger into Worcester Charlotte was very vigilant, I wish her well in the present and this comes on studying her visual difficulties she encounters in their comics. So, I wanted to discuss with you as the last contribution to today’s conference. The question, do we need new law? Or can we just meet with all the ethics and I would say, we need to be able to set it or have to search for the parameters for decision there is a parameter which exists in American law and also in many countries in Europe which I will call the parameter as in shape. So if something is dissention, it needs to be dealt with by Democratic legislature because that’s what democracy is about. Democracy is not the policies unexcited by the executor or by business and for the rest members of Parliament’s Congress Senate. You know, they can’t walk. Democracy means ruled by the people through their representative and the principle as charity says everything which is important in society, the end needs to be cited there. And what is the criteria for deciding what is important?

That criteria, first, whenever, there is an insurance into some liberties, economic rights, does the Americans sentence no taxation without representation of the centrality to immediately rise a concern of people? Second, if there are important considerations of the good functioning of the state of democracy.

So if these are all parameters, what new law may we need on AI and of course ideally, agreed globally, around the world. I also say heavy beam in public law issues and primatologist in shipping in creepiest, strongest American coordinators. Of course, nobody in Europe, Zetterberg takes commitment and Nola nobody in America that we will only give ourselves rules in our democratic process only if they are already rigid we agreed internationally because that would mean we have to wait for 20 years.

As you can see shilling, tripping is probably the best example of a sector where the principle that rules must come from AI all as legs were hugely structural under regulation of sugar. So yes, we will go of course, the Commission is already starting this on AI. You know, there are many motor installs of diplomacy which are being put in place 200 starts to take us long and maybe one day we will arrive at some international law rules on AI. I don’t think that there is a precondition for doing what is necessary in democracy from time to time, they need to be solid. IBM decided of GDPR, of course, in parallel, we had worked on the convention one way into the house of Euro which goes beyond the international organization. For example, Canada has also signed up and we have a number of South American countries who looking at the convention of data protection pipes in the US has signed up to the protocol of the Council of Europe on data security, cyber crime in the same way in US a sign up to the convention of the US could also sign up to the convention 108 on data protection of the Council of Europe.

So because of Europe is a classic instrument or US go beyond US in union and create international consensus which have before so flawed and sometimes also happy. So yes, of course, we will make broad consensus and as he amount of finding and discussion phase. But I would say in the same way that we need a healthy criticism of the same as the internet must be named undivided because if you go to carve his dispensable, it means no democracy can take any rule autonomously because that leads to fragmentation of these. That we have a conflict between those two principles and GDPR clearly was the step where decided the rules the same ways we have done this before. I don’t think that we were for the better others would be ready to precondition lawmaking when it is necessary a democratic process to the prior international law.

So, when we think about therefore applying the principle essentiality, it’s not so clear and not so easy to find new areas where is necessary because as I said already whenever AI treats posted a job our study think of the little resolution element protection. What about if AI doesn’t need us to date, should we extend P principles of transparency of data protection to AI working with small class today. I think that is a legitimate question which needs to be discussed about and I could imagine there are many who say yes, some of the principles should be extended and you need the law not only because of the principle in essentially we need more also to create level playing field and that is of course the very very important comes on economic development you want a level patriot where everybody plays by the same rules and regulation that’s not the case you don’t have level playing. So that would be my finally remark. Why? Back in his article of services of March washing paws sandy say we need laws. We need laws on privacy, we need laws on content regulation, we need laws portability by the way and you have a right to the possibilities of your data on one side to the other. I would say, first there is for some learning in people, in organizations and it’s good that Silicon Valley moves on from the very irresponsible, that’s just steward that’s great sense and that’s excuse beta-2, we take responsibility we are corporate citizens, we are working with democracy not against it and if you read the book or honor to work about democracy attitudes in Silicon Valley. Remember our business school professor has been very very boring. So, I would say this hopefully an element of learning that’s good. This may be also hazardous energy which pertains to let me change here because in the stock economy where technology moves very fast. If you realize that when the constant public scrutiny to be ethical to be good to do the right thing, you start thinking about never think why us always why not everybody and maybe this argument that the rule should apply to everybody. This is also important the argument before the increasingly for those who previously I continue that from my own experience. Bobby tells me that against…so where does this leave us at the end you want to never pay wrong foods to be in cost also against those who don’t want to pay ball and they are we learn from America all the time. Because when it comes to the enforcement of laws, America certainly a great example but pretty tough and we want democracy to live it that means also Parliament’s packed to do the job we want to see them acting, you want to see them taking responsibility and put good rules in place. We don’t want Parliament’s and shouldn’t ask in the public interest. I think we can be happy if Parliaments come up with laws and take responsibility and discourse which are the one ancestor. That’s an issue the democracy we have not enough people engaging. Democracy we have no people on street, the issue alone, we have all bind in the hold an autocratic tendencies and so on.

Yes, we have problem with democracy but if we have a problem with democracy, one way to revive democracy is also to say let’s democracy do it. So I mean my personal who is here very clear the neoliberal discourse of we don’t want laws it can all be done in several relation ethic codes and so on.

This part of how the modern economy driven discourse has undermined the democracy, so if you want democracy, I think you have to work with democracy and make it useful and come up with good laws which provide the principle of ethics which requires the principle essentiality which comes in where we are convinced that we want the level playing field and where we are therefore convinced that if we want rules which are impossible. And I think the task before us on AI is to identify those fields where this is necessary. In Europe, I can say I don’t find much I gave you one example, where convinced that we need party or that is transparency principle maybe that the platforms have to take responsibility that one sees the bot ís the machine and also on the Internet those who employer and outside the platforms just directed as the dream we must make this transparent their life by the way any newspaper which takes paid advertisement must make here is this journalistic contribution or am I doing here PR. Yes, so we impose this burden or quote on our press which by the way come peace with the platforms and the technology often very unfair terms and we don’t have such a principle yet relating to the internet generally and opportunity AI. So I think now that’s why example in America probably a little bit more work is necessary because you don’t have rules like GDPR but for Europe I would say that to give frame to AI. It is possible and to define a few areas, the laws help innovation by GDPR.

Thank you very much for your attention!”

by Admin | May 6, 2019 | News

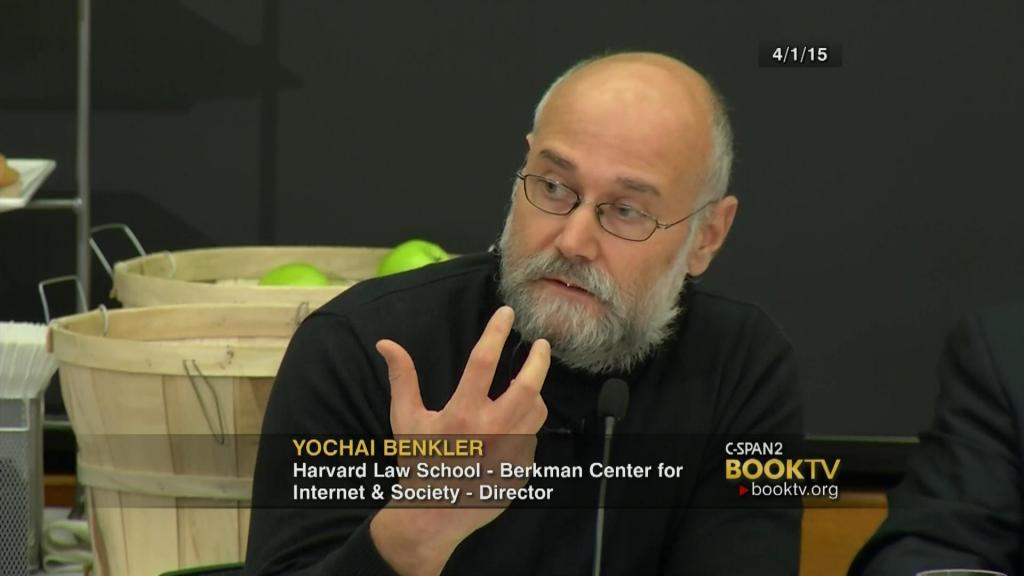

Industry has mobilized to shape the science, morality and laws of artificial intelligence. On 10 May, letters of intent are due to the US National Science Foundation (NSF) for a new funding programme for projects on Fairness in Artificial Intelligence, in collaboration with Amazon. In April, after the European Commission released the Ethics Guidelines for Trustworthy AI, an academic member of the expert group that produced them described their creation as industry-dominated “ethics washing”. In March, Google formed an AI ethics board, which was dissolved a week later amid controversy. In January, Facebook invested US$7.5 million in a centre on ethics and AI at the Technical University of Munich, Germany.

Companies’ input in shaping the future of AI is essential, but they cannot retain the power they have gained to frame research on how their systems impact society or on how we evaluate the effect morally. Governments and publicly accountable entities must support independent research, and insist that industry shares enough data for it to be kept accountable.

Algorithmic-decision systems touch every corner of our lives: medical treatments and insurance; mortgages and transportation; policing, bail and parole; newsfeeds and political and commercial advertising. Because algorithms are trained on existing data that reflect social inequalities, they risk perpetuating systemic injustice unless people consciously design countervailing measures. For example, AI systems to predict recidivism might incorporate differential policing of black and white communities, or those to rate the likely success of job candidates might build on a history of gender-biased promotions.

Inside an algorithmic black box, societal biases are rendered invisible and unaccountable. When designed for profit-making alone, algorithms necessarily diverge from the public interest — information asymmetries, bargaining power and externalities pervade these markets. For example, Facebook and YouTube profit from people staying on their sites and by offering advertisers technology to deliver precisely targeted messages. That could turn out to be illegal or dangerous. The US Department of Housing and Urban Development has charged Facebook with enabling discrimination in housing adverts (correlates of race and religion could be used to affect who sees a listing). YouTube’s recommendation algorithm has been implicated in stoking anti-vaccine conspiracies. I see these sorts of service as the emissions of high-tech industry: they bring profits, but the costs are borne by society. (The companies have stated that they work to ensure their products are socially responsible.)

From mobile phones to medical care, governments, academics and civil-society organizations endeavour to study how technologies affect society and to provide a check on market-driven organizations. Industry players intervene strategically in those efforts.

When the NSF lends Amazon the legitimacy of its process for a $7.6-million programme (0.03% of Amazon’s 2018 research and development spending), it undermines the role of public research as a counterweight to industry-funded research. A university abdicates its central role when it accepts funding from a firm to study the moral, political and legal implications of practices that are core to the business model of that firm. So too do governments that delegate policy frameworks to industry-dominated panels. Yes, institutions have erected some safeguards. NSF will award research grants through its normal peer-review process, without Amazon’s input, but Amazon retains the contractual, technical and organizational means to promote the projects that suit its goals. The Technical University of Munich reports that the funds from Facebook come without obligations or conditions, and that the company will not have a place on the centre’s advisory board. In my opinion, the risk and perception of undue influence is still too great, given the magnitude of this sole-source gift and how it bears directly on the donor’s interests.

Today’s leading technology companies were born at a time of high faith in market-based mechanisms. In the 1990s, regulation was restricted, and public facilities such as railways and utilities were privatized. Initially hailed for bringing democracy andgrowth, pre-eminent tech companies came under suspicion after the Great Recession of the late 2000s. Germany, Australia and the United Kingdom have all passed or are planning laws to impose large fines on firms or personal liability on executives for the ills for which the companies are now blamed.

This new-found regulatory zeal might be an overreaction. (Tech anxiety without reliable research will be no better as a guide to policy than was tech utopianism.) Still, it creates incentives for industry to cooperate.

Governments should use that leverage to demand that companies share data in properly-protected databases with access granted to appropriately insulated, publicly-funded researchers. Industry participation in policy panels should be strictly limited.

Industry has the data and expertise necessary to design fairness into AI systems. It cannot be excluded from the processes by which we investigate which worries are real and which safeguards work, but it must not be allowed to direct them. Organizations working to ensure that AI is fair and beneficial must be publicly funded, subject to peer review and transparent to civil society. And society must demand increased public investment in independent research rather than hoping that industry funding will fill the gap without corrupting the process.

by BGF | Sep 2, 2018 | News

While critics argued that Google was stepping closer to the “business of war” due to a contract with the US Defense Department, the company has responded by banning development for AI that could be used for weapons.

As AI becomes more and more powerful, Google’s leaders have shown their concerns by preventing the creation of AI software which can be used for weapons. The action is considered to set a new ethical guideline to technology companies around the world seeking for superiority in self-driving cars, automated assistants, robotics and military AI.

According to the Independent, to prevent AI from becoming harmful for international law and human rights, Google asserted that the company will not persist in developing AI. The Independent states, however, that cybersecurity, training, veterans’ health care, search and rescue, and military recruitment are some spheres in which Google is going to cooperate with governments.

It is unclear how the company would seek to follow its rules under the principle. Seven core tenets for AI application are referenced by chief executive Sundar Pichai, consisting of being socially beneficial, being built and tested for safety, and avoiding creating or reinforcing unfair bias. The company is to evaluate projects by examining how closely the technology developed can be adapted to harmful use.

In fact, Google’s Web tools are largely developed based on the use of AI, such as image searching or automatic translation. There are possibilities that the tools themselves could easily violate the ethical principles. For example, users of Google Duplex can use it to mimic someone’s voice over the phone to make dinner reservations.

However, the Pentagon’s technological researchers and engineers say other contractors will still compete to help develop technology for the military and national defense. According to John Everett, Deputy Director of Information Innovation Office of the Defense Advanced Research Projects Agency, organizations are free to choose to participate in the AI exploration.

AI should be used for good purposes. Toward this aim, MDI has built the AIWS Initiative to establish a society with the best and most effective AI application, bringing the best to humans.

by dickpirozzolo | Dec 17, 2017 | Global Cyber Security Day

By Dick Pirozzolo, Boston Global Forum Editorial Board

Public policy rather than gee-whiz technology enhances cybersecurity

Toomas Hendrik Ilves, the former president of Estonia, was named World Leader in Artificial Intelligence and International Cybersecurity by the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation. The award was presented to him at the third annual Global Cybersecurity Day conference held at Harvard University on December 12th 2017.

Pres. Ilves was recognized for fostering his nation’s achievements in developing cyber-defense strategies, and for establishing Estonia’s pre-eminence as a world leader in cyberspace technology, defense and safe Internet access. Indeed, Estonia’s cybersecurity and access principals that focus on assured identity in every transaction have become a model for other nations around the world.

Pres. Ilves, who is currently affiliated with Stanford University, was also recognized for his leadership before the United Nations, calling for greater urgency in combating the climate change, the need for safety of the Internet, and the plight of migrants and refugees – especially children.

A leader in cybersecurity

Recognizing Pres. Ilves for his contributions, Michael Dukakis, chairman of the Boston Global Forum and a former Massachusetts governor, stated, “We  believe we are kindred spirits in our pursuit of a world in which we share in the concern for our fellow citizens worldwide. I also believe the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation can play a vital role in helping you communicate your message and inspire others by participating with leading thinkers and scholars from Harvard and MIT who share your vision for a clean, safe and transparent Internet.”

believe we are kindred spirits in our pursuit of a world in which we share in the concern for our fellow citizens worldwide. I also believe the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation can play a vital role in helping you communicate your message and inspire others by participating with leading thinkers and scholars from Harvard and MIT who share your vision for a clean, safe and transparent Internet.”

During Pres. Ilves’s term term in office, Estonia became a world leader in cybersecurity-related knowledge. The country now ranks highest in Europe and fifth in the world in cybersecurity, according to the 2017 cybersecurity index, compiled by the International Telecommunication Union. The country also hosts the headquarters of the NATO Cooperative Cyber Defense Centre of Excellence.

Also honored for contributing to the advancement of Artificial Intelligence and Cybersecurity was Prof. John Savage who was awarded Distinguished Global Educator for Computer Science and Security on the 50th Anniversary of Brown University’s Computer Science Department.

During his keynote address, Pres. Ilves pointed out that national defense was once based on distance and time, but today, “We are passing the limits of physics in all things digital,” while laws and governmental policies have failed to keep up. He reminded the delegates that 145 million adults recently had all their financial information stolen without intervention by the US government.

“Today 4.2 billion people are online using computers that are 3.5 billion times more powerful than when online communication started out 25 years ago with 3,500 academics who were using BITNET, the 1981 precursor to the modern Internet.”

“Today 4.2 billion people are online using computers that are 3.5 billion times more powerful than when online communication started out 25 years ago with 3,500 academics who were using BITNET, the 1981 precursor to the modern Internet.”

Protecting its citizens has always been the responsibility of the state and is part of our social contract. “We give up certain rights for protection, but we have been slow to get there in the digital world. When it comes to the cyber world, we are too focused on technology,” rather than policies that will enhance our safety on the Internet.

“Estonia’s cybersecurity technology is not advanced, but we are ahead on implementation,” he said adding, “There is a huge difference between what we do and other countries – our focus was not on the gee whiz technology,” but on implementation of a system that relies on positive identity, which is the foundation of the country’s cybersecurity program. Additionally, all bureaucratic dealings are online and, with assured identity, Estonia has eliminated the need to request personal information repeatedly. Once personal information is on file, Estonian law prohibits any agency from requesting that that information ever again. An Estonian can get his or her birth, obtain a driving license, alloy for a building permit and register for school without having to fill out the same information repeatedly.

This is in sharp contrast to the US. Pres. Ilves joked that even though he lives at the Silicon Valley, the center of advanced technology where Facebook,  Google and Tesla are within a one mile radius, “When I went to register my daughter for school I had to bring an electric bill to prove I lived there. It struck me that everything I experienced was identical to the 1950s save for the photocopy.”

Google and Tesla are within a one mile radius, “When I went to register my daughter for school I had to bring an electric bill to prove I lived there. It struck me that everything I experienced was identical to the 1950s save for the photocopy.”

He continued, “When Estonia emerged out of the fall of the Soviet Union in 1991, “we were operating with virtually no infrastructure, even the roads built during the Soviet era were for military purposes. By 1995 to 96 [however] all schools were online with computer labs so that all student could have access to computers even though they could not afford to buy them.”

By the late 1990s Estonia determined, “The fundamental problem with cyber security is not knowing who you are talking to. So we started off with a strong identity policy; everyone living in Estonia has a unique chip-based identity card using two factor authentication with end-to-end encryption.” This is more secure than using passwords which can be hacked.

“A state-guaranteed identity program seems to be the main stumbling block for security elsewhere. My argument is that a democratic society, responsible for the safety of the citizens, must make it mandatory to protect them.” Moreover, Estonia’s mandatory digital identity offers numerous benefits, for example, “We don’t use checks in Estonia.”

Decentralized Data Centers

“In Estonia, we could not have a centralized database for economic reasons. Every ministry has its own servers, but everything is connected to everything else including your identity.” Even if someone breaks into the system, the person “is stuck in one room and cannot get into the rest of the system.”

“In Estonia, we could not have a centralized database for economic reasons. Every ministry has its own servers, but everything is connected to everything else including your identity.” Even if someone breaks into the system, the person “is stuck in one room and cannot get into the rest of the system.”

Known as X-Road, this decentralized system is the backbone of e-Estonia. Claim the developers, “It’s the invisible yet crucial environment that allows the nation’s various e-services databases, both in the public and private sector, to link up and operate in harmony. It allows databases to interact, making integrated e-services possible.”

The system is so well integrated that Pres. Ilves claims it streamlines submitting paperwork for various needs to a point where it saves every Estonian 240 working hours a year by not having to fill out tedious forms.

Nearby Finland has joined in implementing such a system along with Panama, Mexico, and Oman.

Pres. Ilves added that, Blockchain technology is used to store personal information to assure the integrity of the data. “I might not like it if someone sees my bank account or blood type, but if they do it is not as bad as changing my financial records or blood type – which cannot be done.”

Estonia further assures the safety of its data by having an extraterritorial server in Luxembourg where the information is duplicated outside its borders. As a result of its legal and policy approach to security, “Estonia is the most cyber secure country in Europe, Russia the most secure in Eurasia and China the most in Asia. Estonia is also the most democratic.”

International Cyber Agreements

Joseph Nye, Harvard University Distinguished Service Professor, Emeritus and former Dean of the Harvard’s Kennedy School of Government explored

ways nations can develop cybersecurity and cyber-attack norms, drawing parallels between cyber and nuclear technology norms, threats and international agreements. “It took two decades to develop norms for nuclear war. We’re now about two decades into cyber depending on how you count.”

Nye recalled that cybersecurity problems emerged in the mid-1990s when web browsers became widely available sparking the “huge benefits and huge vulnerabilities” of cyberspace about two decades ago.

He noted, that with establishing norms to harness the destructive power of nuclear technology, “The first efforts centered around UN treaties.” though “Russia defeated UN-centered efforts after the Cuban missile crisis.”

Nye told some 40 delegates at the World Cybersecurity Day event, that the beginning of real efforts to set norms around nuclear technology, came with test ban treaties, which were essentially focused on environmental concerns over detonating nuclear bombs in the atmosphere. That came in the 1960s. “It wasn’t until the 1970s that SALT (Strategic Arms Limitation Talks) produced something that began to set constraints.”

Turning to cybersecurity, global efforts to limit cyberattacks by states, “especially against critical infrastructure” began in 2015 in a report taken to the UN Group of 20 the world’s most powerful economies made up of 19 nations and the European Union. In 2017, however, they failed to reach consensus due largely to difficulties between the US and Russia. China backed off as well.

Setting cyberspace norms

Nye explained that “a norm is a collective expectation of a group of actors. It is not legally binding, and differs from international law. Norms can also be common practices that develop from collective expected behavior and rules of conduct.”

Nye explained that “a norm is a collective expectation of a group of actors. It is not legally binding, and differs from international law. Norms can also be common practices that develop from collective expected behavior and rules of conduct.”

While large groups of nations have tended to achieve little in terms of establishing norms in cyberspace, bilateral agreements offer promise. “The US and China have very different views on Internet rules regarding [say] freedom of speech. For years the US corporations complained about cyber espionage being undertaken to steal American companies’ intellectual property and giving it to Chinese businesses,” Nye said, recalling that, at first there were denials but the issue became a top priority when the Edward Snowden affair let China off the hook. At that time China totally blocked IP theft.

The US further stated that it would sanction Chinese companies unless their government took a position against stolen IP. Then, with a US-China summit coming up 2015—the US made it clear that if the meeting was to succeed, intellectual property theft, had to stop because of its corrosive impact on fair trade. “Espionage is one thing, but corrupting the trade system is different than stealing other secrets.” What’s more, Internet espionage is, “quick, cheap and you don’t have to worry about your spy getting caught.”

Finally, when XI Jinping and President Obama met in September of 2015, China agreed to no longer acquire intellectual property. “While some IP spying continues on the margins, there has been a discernable reduction since the meeting,” said Nye.

The benefit of bilateral agreements Nye emphasized is, “They don’t stay in a box but become the kernel of the broader game of establishing wider norms,” noting that while broad multi-nation global agreements may have failed, bilateral agreement between states with very different views have succeeded. “Progress may not be made by a large global agreement such as convening 40 states. Finding ways states can negotiate concrete decisions between themselves and broadening them to encompass more nations is a much more plausible approach”

International Law for Cyberspace

Nazli Choucri, Professor of Political Science, MIT and Director, Global System for Sustainable Development noted that, while it is a long way from norms to international law, it is especially important to recognize the important contributions of the Tallinn [Estonia] Manual 2.0 on International Law Applicable to Cyber Operations for Cyberspace Operations, to current thinking about order in a world of disorder.

Nazli Choucri, Professor of Political Science, MIT and Director, Global System for Sustainable Development noted that, while it is a long way from norms to international law, it is especially important to recognize the important contributions of the Tallinn [Estonia] Manual 2.0 on International Law Applicable to Cyber Operations for Cyberspace Operations, to current thinking about order in a world of disorder.

When reading the the four part Manual it should come as no surprise that the state and the state system serves as anchor and entry point for the entire initiative.

Part I is on general international law and cyberspace, and begins with Chapter 1 on sovereignty.

Part II focuses on specialized regimes of international law and cyberspace.

Part III is on international peace and security and cyber activities, and

Part IV on the law of armed conflict.

Each Part is divided into Chapter (some of which are further divided into Sections), and each Chapter consists of specific Rules. It is at level of Rules that the substantive materials are framed as explicit directives – points of law.

This approach — presented in the best tradition of linear text –records the meaning of each Rule, Rule by Rule and its connections to other Rules. A document of nearly 600 pages, the Manual amounts to a daunting task for anyone who wishes to understand it in its entirety, or even in its parts.. Further, the text-as-conduit may not do justice to what is clearly a major effort. It is difficult to track salient relationships, mutual dependencies, or reciprocal linkages among directives presented as Rules. For these reasons, researchers at MIT found ways of representing the content of the 600 pages of the Manual in several different visual representations that are derived from the text.

The purpose is to understand the architecture underlying the legal frame of the Tallinn Manual. One type of representation consists of network views ofthe Rules – all 154 of them in one visual form and in one page. And there are many more.

Source: Nazli Choucri and Gaurav Agarwal, “Analytics for Smart Grid Cybersecurity 2017 IEEE International Symposium on Technologies for Homeland Security, Waltham, MA. http://ieee-hst.org

No longer are we dealing with rather dry text form of equally dry legal narrative. Rather we are looking at colorful networked representations of how the various Rules connect to each other – and to some extent why. This brief summary does little justice to process or product. At the same time, however, it points to new ways of understanding the value of 600 pages of text.

Cybersecurity and Executive Order

By definition text undermines attention to feedback, delays, interconnections, cascading effects, indirect impacts and the like – all embedded deep text. This is true for Tallinn Manual 2.0 as it is for responses to Presidential Executive Order (EXORD 2017).

The text-form may be necessary, but it is not sufficient. In fact, it may create barriers to understanding, obscure the full nature of directives, and generate less than optimal results – all of which prevent good results. If there is a summary to be made, it is this Table.

Other avenues to cyber defense

Prof. Derek Reveron of the Naval War College said, “Cybersecurity challenges the way we think about domestic and foreign boundaries. The military looks outward but with cyber threats boundaries have less meaning.”

He added that effectively combating cyber threats can be hampered by “tension between intelligence agencies and Cyber Command which is charged with responding. Cyber Command might be able to attack ISIS in cyberspace, but then the intelligence community will lose assets. Attacks also needs clearance from Congress,” thus delaying action.

“Cybersecurity measures also challenge our idea of what’s public and what’s private,” said Reveron noting that cyberspace is monitored and run by corporate entities that are global not national—companies are more important than governments” in defending cyberspace, he said.

Additionally, it is difficult to isolate malicious cyberattacks to determine their source and privacy and freedom come into play as well when deploying cyber defensive measures outside the US. “In China and Russia, for example, internet freedom is a threat to authoritarianism,” he observed, adding, “Google had to give up some of its values in China that that it has in the US.”

Reveron underscored several practical cyber-defense rules of the road to consider:

- characterize the threshold for action and understand the adversaries’ thresholds for reactions

- to avoid escalation, governments should maintain the monopoly on cyber-attacks not companies

- critical infrastructure attacks will have a local impact, so if the power goes out in Cambridge, we need a connection between local and national responders

- within a country there must be collaboration across all entities—banks, telecom, retailers and the like

- practice comprehensive resilience to prepare municipalities and individual states for cyber attacks

- enhance the cybersecurity of developing countries by making their systems more resilient and their citizens more digitally savvy.

A recent paper on the subject Principles for a Cyber Defense Strategy by Derek S. Reveron, Jacquelyn Schneider, Michael Miner, John Savage, Allan Cytryn, and Tuan Anh Nguyen is available on the Boston Global Forum Website.

During the meeting, Tuan Nguyen introduced the launch of the Artificial Intelligence World Society, an offshoot of the Michael Dukakis Institute for Leadership and Innovation.

During the meeting, Tuan Nguyen introduced the launch of the Artificial Intelligence World Society, an offshoot of the Michael Dukakis Institute for Leadership and Innovation.

Global Cybersecurity Day was created to inspire the shared responsibility of the world’s citizens to protect the Internet’s safety and transparency. As part of this initiative, BGF and the Michael Dukakis Institute for Leadership and Innovation also calls upon citizens of goodwill to follow BGF’s Ethics Code of Conduct for Cyber Peace and Security (ECCC).

Boston Global Forum , a think tank with ties to Harvard University faculty, includes scholars, business leaders and journalists. BGF is chaired by former Massachusetts Gov. Michael Dukakis, a national and international civic leader and BGF’s cofounder As an offshoot of The Boston Global Forum, The Michael Dukakis Institute for Leadership and Innovation (MDI) was born in 2015 with the mission of generating ideas, creating solutions, and deploying initiatives to solve global issues, especially focused on Cybersecurity and Artificial Intelligence.

by dickpirozzolo | Dec 15, 2017 | News

Former Estonian president, Toomas Hendrik Ilves, was named World Leader in Artificial Intelligence and International Cybersecurity by the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation, at the third annual Global Cybersecurity Day conference held at Harvard University on December 12th 2017.

Pres. Ilves was recognized for fostering his nation’s achievements in developing cyber-defense strategies for all nations, and for establishing Estonia’s pre-eminence as a world leader in cyberspace technology, defense and safe Internet access. Indeed, Estonia’s cyber security and access principals that focus on assured identity in every transaction have become a model for other nations around the world

Pres. Ilves, who is currently affiliated with Stanford University, was also recognized for his leadership before the United Nations, calling for greater urgency in combating the climate change, the need for safety of the Internet, and the plight of migrants and refugees – especially children.

A leader in cybersecurity

Recognizing Pres. Ilves for his contributions, Michael Dukakis, chairman of the Boston Global Forum and a former Massachusetts governor, stated, “We  believe we are kindred spirits in our pursuit of a world in which we share in the concern for our fellow citizens worldwide. I also believe the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation can play a vital role in helping you to communicate your message and inspire others by participating with leading thinkers and scholars from Harvard and MIT who share your vision for a clean, safe and transparent Internet.”

believe we are kindred spirits in our pursuit of a world in which we share in the concern for our fellow citizens worldwide. I also believe the Boston Global Forum and the Michael Dukakis Institute for Leadership and Innovation can play a vital role in helping you to communicate your message and inspire others by participating with leading thinkers and scholars from Harvard and MIT who share your vision for a clean, safe and transparent Internet.”

During Pres. Ilves’s term as the Estonian president, his country became a world leader in cybersecurity-related knowledge. Estonia now ranks highest in Europe and fifth in the world in cybersecurity, according to the 2017 cybersecurity index, compiled by the International Telecommunication Union. The country also hosts the headquarters of the NATO Cooperative Cyber Defense Centre of Excellence.

Also honored for contributing to the advancement of Artificial Intelligence and Cybersecurity was Prof. John Savage who was awarded Distinguished Global Educator for Computer Science and Security on the 50 Anniversary of the Brown University Computer Science Department.

During his keynote address, Pres. Ilves pointed out that national defense was once based on distance and time, but today, “We are passing the limits of physics in all things digital,” while laws and governmental policies have failed to keep up. He reminded the delegates that 145 million adults recently had all their financial information stolen without intervention by the US government.

“Today 4.2 billion people are online using computers that are 3.5 billion times more powerful than when online communication started out 25 years ago with 3,500 academics who were using BITNET, the 1981 precursor to the modern Internet.”

“Today 4.2 billion people are online using computers that are 3.5 billion times more powerful than when online communication started out 25 years ago with 3,500 academics who were using BITNET, the 1981 precursor to the modern Internet.”

Protecting its citizens has always been the responsibility of the state and is part of our social contract. “We give up certain rights for protection, but we have been slow to get there in the digital world. When it comes to the cyber world, we are too focused on technology,” instead of policies that will enhance our safety on the Internet.

“Estonia’s cybersecurity technology is not advanced, but we are ahead on implementation,” he said adding, “There is a huge difference between what we do and other countries – our focus was not on the gee whiz technology.” but rather implementation of a system that relies on positive identity, which is the foundation of the country’s cybersecurity program. Additionally, all bureaucratic dealings are online and, with assured identity, Estonia has eliminated the need to request personal information repeatedly. Once personal information is on file, Estonian law prohibits any agency from requesting that that information ever again. An Estonian can get a driving license, building permit and register for school without having to fill out the same information repeatedly.

This is in sharp contrast to the US. Pres. Ilves joked that even though he lives at the Silicon Valley, the center of advanced technology where Facebook,  Google and Tesla are within a one mile radius, “When I went to register my daughter for school I had to bring an electric bill to prove I lived there. It struck me that everything I experienced was identical to the 1950s save for the photocopy.”

Google and Tesla are within a one mile radius, “When I went to register my daughter for school I had to bring an electric bill to prove I lived there. It struck me that everything I experienced was identical to the 1950s save for the photocopy.”

He continued, “When Estonia emerged out of the fall of the Soviet Union in 1991, “we were operating with virtually no infrastructure, even the roads built during the Soviet era were for military purposes. By 1995 to 96 [however] all schools were online with labs so that all student could have access to computers even though they could not afford to buy them.”

By the late 1990s Estonia determined, “The fundamental problem with cyber security is not knowing who you are talking to. So, we started off with a strong identity policy; everyone living in Estonia has a unique chip-based identity card using two factor authentication with end-to-end encryption.” This is more secure than using passwords which can be hacked.

“A state-guaranteed identity program seems to be the main stumbling block for security elsewhere. My argument is that a democratic society, responsible for the safety of the citizens, must make it mandatory to protect them.” Moreover, Estonia’s mandatory digital identity offers numerous benefits, for example, “We don’t use checks in Estonia.”

Decentralized Data Centers

“In Estonia, we could not have a centralized database for economic reasons. Every ministry had its own servers, but everything is connected to everything else including your identity.” Even if someone breaks into the system, the person “is stuck in one room and cannot get into the rest of the system.”

“In Estonia, we could not have a centralized database for economic reasons. Every ministry had its own servers, but everything is connected to everything else including your identity.” Even if someone breaks into the system, the person “is stuck in one room and cannot get into the rest of the system.”

Known as X-Road, this decentralized system is the backbone of e-Estonia. Claim the developers, “It’s the invisible yet crucial environment that allows the nation’s various e-services databases, both in the public and private sector, to link up and operate in harmony. It allows databases to interact, making integrated e-services possible.”

The system is so well integrated that Pres. Ilves claims it streamlines submitting paperwork for various needs to a point where it saves every Estonian 240 working hours a year by not having to fill out tedious forms.

Nearby Finland has joined in implementing such a system along with – Panama, Mexican, and Oman.

Pres. Ilves added that, Blockchain technology is to store personal information to assure the integrity of the data. “I might not like it if someone sees my bank account or blood type, but if they do it is not as bad as changing my financial records or blood type – which cannot be done.”

Estonia further assures the safety of its data by having an extraterritorial server in Luxembourg where the information is duplicated outside its borders.As a result of its legal and policy approach to security, “Estonia is the most cyber secure country in Europe, Russia the most secure in Eurasia and China the most in Asia. Estonia is also the most democratic.”

International Cyber Agreements

Joseph Nye, Harvard University Distinguished Service Professor, Emeritus and former Dean of the Harvard’s Kennedy School of Government explored

waysnations can develop cybersecurity and cyber-attack norms, often drawing parallels between cyber and nuclear technology, norms, threats and international agreements. “It took two decades to develop norms for nuclear war. We are now about two decades on cyber depending on how you count.”

Nye recalled that cybersecurity problems emerged in the mid-1990s when web browsers became widely available sparking the “huge benefits and huge vulnerabilities” of cyberspace about two decades ago.

He noted, that with establishing norms to harness the destructive power of nuclear technology, “The first efforts centered around UN treaties.” through “Russia defeated UN-centered efforts after the Cuban missile crisis.”

Nye told some 40 delegates at the World Cybersecurity day event, that the beginning of real efforts to set norms around nuclear technology, came with test ban treaties, which were essentially focused on environmental concerns over detonating nuclear bombs in the atmosphere came in the 1960s. “It wasn’t until the 70s that SALT (Strategic Arms Limitation Talks) produced something that began to set constraints.”

Turning to cybersecurity, global efforts to limit cyberattacks by states, “especially against critical infrastructure” began in 2015 in a report taken to the UN Group of 20 of the world’s most powerful economies; 19 nations and the European Union. In 2017, however, they failed to reach consensus due largely to difficulties between the US and Russian and China backed off as well.

Setting cyberspace norms

Nye explained that “a norm is a collective expectation of a group of actors. It is not legally binding, and differs from international law. Norms can also be common practices that develop from collective expected behavior and rules of conduct.”

Nye explained that “a norm is a collective expectation of a group of actors. It is not legally binding, and differs from international law. Norms can also be common practices that develop from collective expected behavior and rules of conduct.”

While large groups of nations have tended to achieve little in terms of establishing norms in cyberspace, bilateral agreements offer promise. “The US and China have very different views on internet rules regarding [say] freedom of speech. For years the US corporations complained about cyber espionage being undertaken to steal American companies’ intellectual property and giving it to Chinese businesses,” Nye said, recalling that, at first there were denials but the issue became a top priority when the Edward Snowden affair let China off the hook. At that time China totally blocked IP theft.