by Editor | May 31, 2020 | Event Updates

On May 27, at 8:45 am, EDT, at the World Bank’s event, the Prosperity Collaborative was official launched. Dr. Marcello Estevão, Global Director, Macroeconomics, Trade & Investment, the World Bank gave keynote speech about this coalition.

The website of the World Bank wrote: “The Prosperity Collaborative’s inaugural online event shared strategies that can help tax bureaus manage the crisis and serve their constituents. Our first online panel will focus on how tax administrations can remain resilient and responsive to new demands during the pandemic. Our second online panel explores how innovative technology can help leaders in public finance build stronger, more effective institutions.

This event was hosted by the Prosperity Collaborative, a coalition of the World Bank, MIT, EY, the Digital Impact and Governance Initiative at New America, and the Boston Global Forum Michael Dukakis Institute for Leadership and Innovation. We bring together a diverse set of leading organizations from the public sector, private sector, academia, and civil society to develop new open source technologies and digital public goods that will transform tax systems and enhance local capacity with an initial focus on emerging economies.”

At the event, panelists discussed “Technology and Tax During and Beyond the Coronavirus Pandemic”

Here are some quotes from this event:

“The Prosperity Collaborative will fill a gap by developing digital public goods for the use by the many.”

“This is agenda about great people enabled by great technologies.”

“We need the private sector, civil society, and government to come together to develop technology solutions.”

Mr. Jeffrey Saviano, Global Innovation Lead – Tax, EY

“Many developed markets have a lot to learn from the IT platforms used by developing countries”

Ms. Kate Barton, Global Vice-Chair – Tax, EY

“The Corona-crisis has shifted transfers to mobile payment systems and consumption to low taxable goods.”

“The Corona-crisis is forcing us to rethink our work, for example the role of onsite vs. offsite audits.”

“We are developing a system that will transfer transaction data to the tax authority in real time.”

Dr. Terra Saidimu, Commissioner Intelligence and Strategic Operations, Kenya Revenue Authority

“We need to find a way to tax digital services to pay for the infrastructure that the services rely on.”

“The only way to address the many challenges in modern taxation is through experimentation.”

Mr. Alex Pentland, Professor, MIT

“Tax administrations need to embrace technologies that serve everyone in their ecosystem.”

Ms. Kate Barton, Global Vice-Chair – Tax, EY

“This is agenda about great people enabled by great technologies.”

“We need the private sector, civil society, and government to come together to develop technology solutions.”

Mr. Jeffrey Saviano, Global Innovation Lead – Tax, EY

“We are seeing a convergence of technology and citizen services.”

“We can use the global crisis to show what goes wrong, if we don’t cooperate to develop the global public goods and infrastructure.”

“Tax data can provide insights into our economic levers. Why are we not using this information?

Ms. Jacky Wright, Chief Digital Officer, Microsoft

“We need to build rewards into our tax systems. We need to make it more exciting to pay taxes.”

Mr. Tomicah Tillermann, New America

“Next time in Davos, I would like to hold leaders accountable for digital for public good.”

Ms. Jacky Wright, Chief Digital Officer, Microsoft

“I’d wish there was a way that I as a taxpayer could know what has be done with my money.”

Her Excellency Serey Chea, Director General, National Bank of Cambodia

by Editor | May 31, 2020 | News

The future of artificial intelligence can be either exciting or worrisome depending on who you talk to. Some believe that AI may take over humanity, while others believe that we’re far away from AI achieving anything close to human intelligence. One author recently wrote a book addressing the potentially apocalyptic perspective of AI. James Barrat, author of Our Final Invention: Artificial Intelligence and the End of the Human Era shared insights into his book on a recent AI Today podcast. Despite the title of the book, he is surprisingly more on the fence about it than his book title would imply.

AI is becoming an increasing part of our daily lives. From intelligent assistants to facial recognition, AI technology is starting to permeate all sorts of our personal interactions. Though we might have achieved the grand visions of a singular intelligence system capable of learning any task, so-called Artificial General Intelligence (AGI), we are increasingly living in a world where the everyday person uses AI on a daily basis. With this sudden boost in AI usage, people are beginning to question just how safe this technology actually is. In many instances, AI and machine learning have the potential to provide significant benefit. However, we have reason to be concerned for AI systems that go wrong.

Barrat, however, sees a problem with managing AI. He points out that it is more than possible for the “good guys” to accidentally be “bad guys” and that AI may have unintended consequences from inappropriate application of the technology. Barrat points out that even companies with great technology will use their market strength to suppress competitive technology and perspectives. The notion of “good” in a corporate or government setting can be complex. He also points out that the unique nature of AI is that it learns from experience, and that experience might include human bias. Barrat details how depending on what data you feed a system, it can alter the outcome. His example is that if you feed a system photo of doctors that all look alike, the system might infer that all doctors are only white men. This can lead to problems down the line and imperfect system logic.

The original article can be found here.

Regarding to AI Ethics, Michael Dukakis Institute for Leadership and Innovation (MDI) and Artificial Intelligence World Society (AIWS.net) has developed AIWS Ethics and Practice Index to measure the extent to which a government’s AI activities respects human values and contributes to the constructive use of AI. The Index has four components by which government performance is assessed including transparency, regulation, promotion and implementation.

by Editor | May 31, 2020 | News

Artificial General Intelligence (AGI) – a hypothetical machine capable of any and all of the intellectual tasks performed by humans – is considered by many to be a pipe dream. A long-standing feature of science fiction, AGI has achieved a cultural reputation of both reverence and fear, but above all an appreciation for the possibilities it presents. However, despite what the movies might suggest, there is still considerable debate around what constitutes general intelligence in humans, let alone machines.

Before diving into AGI, it’s worth establishing what has become the accepted meaning of ‘general intelligence’. The term ‘general intelligence’ is an evolving term. When the first electronic computers were created, many leaders in the field attributed their ability to do complicated sums as evidence of a higher intelligence than was previously known. What followed was the ability to best humans at strategy games like chess, and eventually speech and image recognition. It seems likely that this evolution will too apply to Artificial General Intelligence, particularly as the concept becomes increasingly abstract.

However, as it stands, there are some generally accepted factors which determine if a human or machine is capable of artificial general intelligence. First, that they must have the ability to learn from a limited amount of data or experience – often referred to as few shot learning. Secondly, to be able to learn, and improve its ability to learn, from a wide variety of contexts, known as meta learning. This directly feeds into the final factor: causal inference. This is the capability for scenario generation: to be able to plan for future events, or non-events, through an understanding of cause and effect.

The original article can be found here.

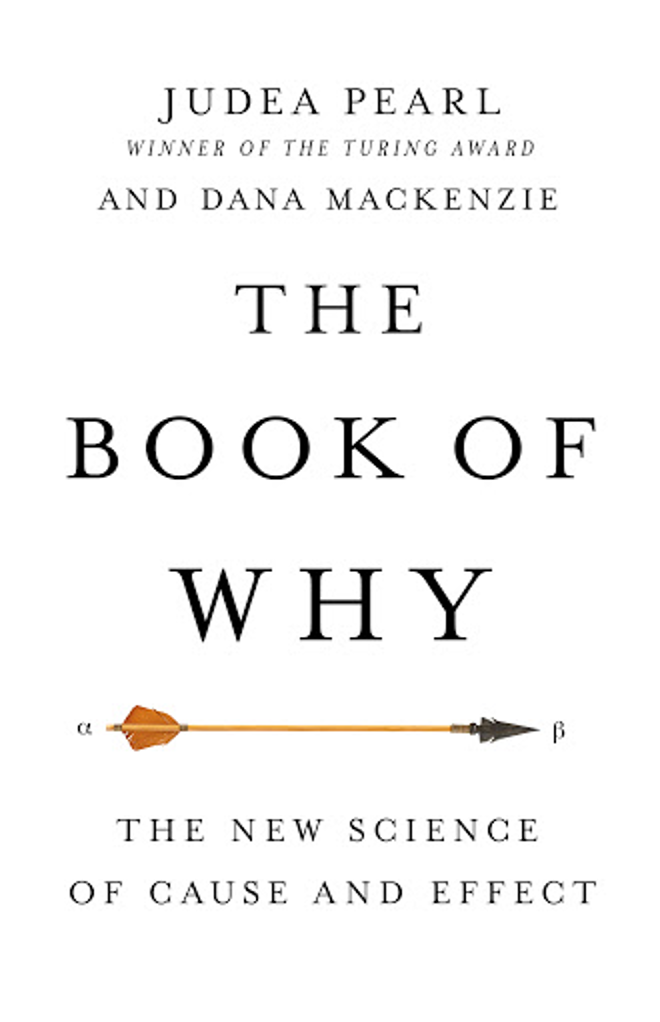

Regarding to Causal Inference and AI, Professor Judea Pearl is a pioneer in this work and was recognized with a Turing Award in 2011. In 2020, Professor Pearl is also awarded as World Leader in AI World Society (AIWS.net) by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF). At this moment, Professor Pearl also contribute on Causal Inference for AI transparency, which is one of important AI World Society (AIWS.net) topics on AI Ethics from by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF).

by Editor | May 25, 2020 | News

On May 20, 2018, “The Book of Why” by professor Judea Pearl was published.

Governor Michael Dukakis said on March 20, 2020:

“The Book of Why: The New Science of Cause and Effect,” provides us with the new tools needed to navigate the uncharted waters of causality for students of statistics, economics, social sciences, mathematics and most urgently today, epidemiology.” The Michael Dukakis institute, under the umbrella of its Artificial Intelligence World Society Innovation Network (AIWS-IN) AIWS.net, is calling for artificial intelligence to be developed and deployed in ways that benefit all mankind. Professor Pearl will serve as Mentor to AIWS Innovation Network programs in support of these goals. To be sure, the AIWS.net is eager to explore and apply your Causal Models to the decision-making process by national governments as well as individual citizens.”

The Boston Global Forum and Michael Dukakis Institute honored Professor Judea Pearl with the 2020 World Leader in AIWS Award and as a Mentor of AIWS Innovation Network (AIWS.net).

On May 24, 2020, Professor Judea Pearl discussed on Twitter:

Judea Pearl:

“Embroiled in choreographing Causal Science Centers I’ve almost forgotten that May 20 marks the 2-year anniversary of the publication of “the Book of WHY” #Bookofwhy. Dana and I are grateful to all readers who contributed to our understanding that the book has made a dent on scientific methodology.

As a token appreciation to readers of “the Book of WHY”, I am sharing the slides I used in a seminar titled: “The Silent History of Cause and Effect” given at UCLA History of Science Reading Group. Feel free to use any of these historical-philosophical snippets: http://web.cs.ucla.edu/~kaoru/hpass-ucla-dec2019-bw.pdf.”

Eoghan Flanagan:

“Prof Pearl I would suggest that the private sector would be more interested in funding causality research than “data science”. Businesses care about the do-calculus!

Judea Pearl:

“I thought so too. So where are the Bill Gates, Jeff Bezos and Mark Zuckerbergs of today? Are visionary business leaders a thing of the past? I am not talking “causality research”; we have it. I am talking Causal Science Centers, to educate next generation Phd’s for decision making.”

Elias Barenboim:

“I recently tweet about Ray Dalio & Causal Inference (CI), Bill Gates appears to be aware of the “Book of Why”. I talked briefly w/@finkdI earlier thi year about it. Elon Musk seem aware of the limits of AI. They may be interested in being serious about CI.”

“Insightful note by investor Ray Dalio @RayDalio on the necessity of understanding cause-effect relations to make robust decision-making & the insufficiency of machine learning:

https://www.youtube.com/watch?v=yrxYhv2O3wU&t=21m40s

We do have a language and tools to encode this understanding today.”

Judea Pearl:

“These wise words of Ray Dalio seem to be taken straight from the manifesto of the first “Causal Science Center”. Hey, Ray Dalio, would you be the Center’s first spokesman? Honorary President? Beneficiary? The algorithms you spoke about are ready to serve humanity. “

Nguyen Anh Tuan:

“Dear Judea, Michael Dukakis Institute and AIWS.net put Modern Causal Inference as course of AI World Society Leadership Master Degree.

Congratulations. We will introduce in AIWS Weekly Newsletter on Monday morning May 26, 2020. AIWS.net with Modern Causal Inference, promotes “the Book of WHY”, encourages research, invests in Causal Inference, applies Causal Inference to develop Decision Making Systems.”

by Editor | May 25, 2020 | Uncategorized

Hosted by the Prosperity Collaborative, a newly formed coalition of World Bank, MIT, EY, New America, and the Michael Dukakis Institute

Public finance is not a typical frontline crisis management function. Yet in recent months, revenue management has been filling a critical role in managing the public sector response to the coronavirus pandemic. As governments provide their citizens with immediate economic relief from the pandemic, public finance officials have been taking on greatly expanded responsibilities such as administering payments and other benefits programs.

The Prosperity Collaborative’s inaugural online event shares strategies that can help tax bureaus manage the crisis and serve their constituents. The first online panel will focus on how tax administrations can remain resilient by transforming their missions and operationalizing relief programs. The second online panel will explore how innovative technology can help leaders in public finance build stronger, more effective institutions.

This event is hosted by the Prosperity Collaborative, a coalition of the World Bank, MIT, EY, the Digital Impact and Governance Initiative at New America, and the Boston Global Forum Michael Dukakis Institute for Leadership and Innovation. They bring together a diverse set of leading partners from the public sector, private sector, academia, and civil society to develop new open source technologies and digital public goods that will transform tax systems and enhance local capacity with an initial focus on emerging economies.

Time: May 27th at 8:45am ET.

Speakers:

Marcello Estevão, Global Director, Macroeconomics, Trade & Investment, World Bank

Chiara Bronchi, Global Practice Manager, Fiscal Policy & Sustainable Growth, World Bank

Kate Barton, Global Vice Chair – Tax, EY

Sandy Pentland, Professor, Connection Science, Massachusetts Institute of Technology

Tomicah Tillemann, Director, Digital Impact and Governance Initiative, New America

Jacky Wright, Chief Digital Officer, Microsoft

Jeff Saviano, Global Tax Innovation Leader, EY

Raul Felix Junquera-Varela, Global Lead on Domestic Resource Mobilization, World Bank

Jeffrey Cooper, Executive Industry Consultant, SAS Institute

by Editor | May 25, 2020 | News

COVID-19 doesn’t create cookie-cutter infections. Some people have extremely mild cases while others find themselves fighting for their lives.

Clinicians are working with limited resources against a disease that is very hard to predict. Knowing which patients are most likely to develop severe cases could help guide clinicians during this pandemic.

We are two researchers at New York University that study predictive analytics and infectious diseases. In early January, we realized that it was very possible the new coronavirus in China was going to make its way to New York, and we wanted to develop a tool to help clinicians deal with the incoming surge of cases. We thought predictive analytics—a form of artificial intelligence—would be a good technology for this job.

In a general sense, this type of AI looks at existing data to find patterns and then uses those patterns to make predictions about the future. Using data from 53 COVID-19 cases in January and February, we developed a group of algorithms to determine which mildly ill patients were likely become severely ill.

Our experimental tool helped predict which people were going to get the most sick. In doing so, it also found some unexpected early clinical signs that predict severe cases of COVID-19.

The algorithms we designed were trained on a small data set and at this point are only a proof-of-concept tool, but with more data, we believe later versions could be extremely helpful to medical professionals.

The original article can be found here.

According to Artificial Intelligence World Society Innovation Network (AIWS.net), AI can be an important technology and a potential tool for COVID-19 prediction. In this effort, Michael Dukakis Institute for Leadership and Innovation (MDI) invites participation and collaboration with think tanks, universities, non-profits, firms, and other entities that share its commitment to the constructive and development of full-scale AI for world society.

by Editor | May 25, 2020 | News

Artificial intelligence won’t be very smart if computers don’t grasp cause and effect. That’s something even humans have trouble with.

In less than a decade, computers have become extremely good at diagnosing diseases, translating languages, and transcribing speech. They can outplay humans at complicated strategy games, create photorealistic images, and suggest useful replies to your emails.

Yet despite these impressive achievements, artificial intelligence has glaring weaknesses.

Machine-learning systems can be duped or confounded by situations they haven’t seen before. A self-driving car gets flummoxed by a scenario that a human driver could handle easily. An AI system laboriously trained to carry out one task (identifying cats, say) has to be taught all over again to do something else (identifying dogs). In the process, it’s liable to lose some of the expertise it had in the original task.

Computer scientists call this problem “catastrophic forgetting.”

These shortcomings have something in common: they exist because AI systems don’t understand causation. They see that some events are associated with other events, but they don’t ascertain which things directly make other things happen. It’s as if you knew that the presence of clouds made rain likelier, but you didn’t know clouds caused rain.

The dream of endowing computers with causal reasoning drew Bareinboim from Brazil to the United States in 2008, after he completed a master’s in computer science at the Federal University of Rio de Janeiro. He jumped at an opportunity to study under Judea Pearl, a computer scientist and statistician at UCLA. Pearl, 83, is a giant—the giant—of causal inference, and his career helps illustrate why it’s hard to create AI that understands causality.

Pearl says AI can’t be truly intelligent until it has a rich understanding of cause and effect. Although causal reasoning wouldn’t be sufficient for an artificial general intelligence, it’s necessary, he says, because it would enable the introspection that is at the core of cognition. “What if” questions “are the building blocks of science, of moral attitudes, of free will, of consciousness,” Pearl told me.

You can’t draw Pearl into predicting how long it will take for computers to get powerful causal reasoning abilities. “I am not a futurist,” he says. But in any case, he thinks the first move should be to develop machine-learning tools that combine data with available scientific knowledge: “We have a lot of knowledge that resides in the human skull which is not utilized.”

The original article can be found here

Professor Judea Pearl is a pioneer on Causal Inference and AI, and his work was also recognized with a Turing Award in 2011. At this moment, Professor Pearl also contribute on Causal Inference for AI transparency, which is one of important AI World Society (AIWS.net) topics on AI Ethics from by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF).

by Editor | May 17, 2020 | News

The WHO has been accused by U.S. and allies of turning a blind eye while China withheld information

Japan will call for an investigation into the World Health Organization’s initial response to the coronavirus pandemic, Prime Minister Shinzo Abe has said.

“With the European Union, (Japan) will propose that a fair, independent and comprehensive verification be conducted,” Abe said on an internet program Friday May 15, 2020.

He said the proposal will be made at the WHO’s general assembly to begin Monday.

Foreign Minister Toshimitsu Motegi also said Friday that Japan is joining a chorus of calls for such an investigation, which should be conducted by an independent body.

“This disease has had a devastating impact on the entire world, and information must be shared between countries in a free, transparent and timely manner, lest we risk it spreading even more quickly,” Motegi said in a parliamentary session, in reference to COVID-19, the respiratory disease caused by the novel coronavirus.

“There’s a lot of discussion in the international community about precisely where the virus came from and the initial response,” he said. “There needs to be a thorough investigation, and it’s crucial that this be carried out by an independent body.”

The original article can be found here.

Japanese Prime Minister Shinzo Abe was honored with the World Leader for Peace and Security Award by the Boston Global Forum at Harvard University Faculty Club on Global Cybersecurity Day December 12, 2015.

by Editor | May 17, 2020 | Event Updates

Professor Cheryl Misak will speak about life of Frank Ramsey at 10:00 am EST, June 6, 2020 on AIWS House, a part of the History of AI, at AIWS.net.

Frank Ramsey, a philosopher, economist, and mathematician, was one of the greatest minds of the last century.

Professor Judea Pearl, Mentor of AIWS.net, said: “Ramsey was definitely one of the clearest forerunners of subjective probabilities and the revival of Bayes statistics in the 20th century, which influenced the 1970-90 debate on how to represent uncertainty in AI systems.”

Cheryl Misak is a Professor of Philosophy and former Vice President and Provost at the University of Toronto.

She is the author numerous papers and five books: Cambridge Pragmatism: From Peirce and James to Ramsey and Wittgenstein (OUP 2016); The American Pragmatists (OUP 2013); Truth, Politics, Morality: Pragmatism and Deliberation (Routledge 2000); Verificationism: Its History and Prospects (Routledge 1995) and Truth and the End of Inquiry: A Peircean Account of Truth (OUP 1991).

She has published a biography of the great Cambridge philosopher, mathematician, and economist, Frank Ramsey, who died in 1930 at the age of 26. It was published in April, 2020 by Oxford University Press, under the title Frank Ramsey: A Sheer Excess of Powers.