by Editor | Sep 12, 2020 | News

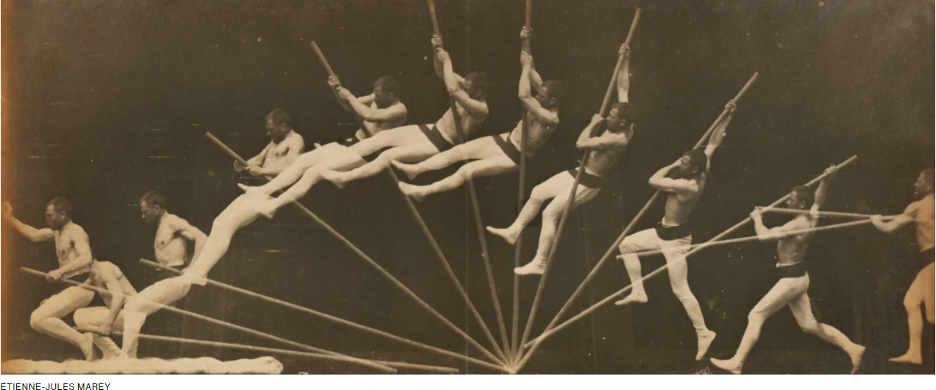

Nobody knows what will happen in the future, but some guesses are a lot better than others. A kicked football will not reverse in midair and return to the kicker’s foot. A half-eaten cheeseburger will not become whole again. A broken arm will not heal overnight.

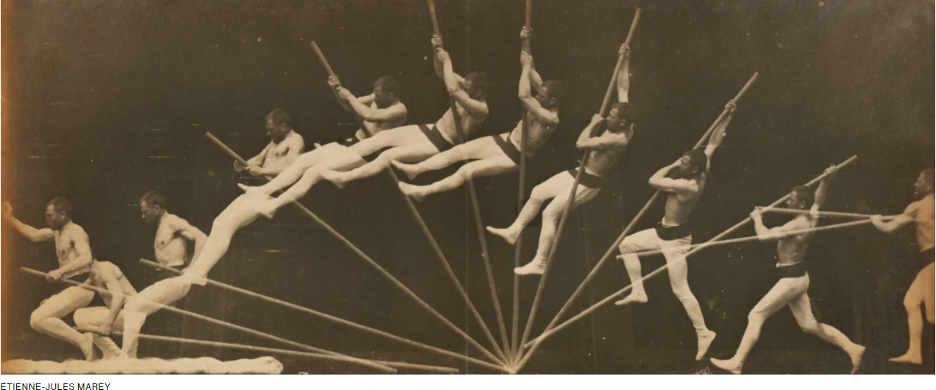

By drawing on a fundamental description of cause and effect found in Einstein’s theory of special relativity, researchers from Imperial College London have come up with a way to help AIs make better guesses too.

The world progresses step by step, every instant emerging from those that precede it. We can make good guesses about what happens next because we have strong intuitions about cause and effect, honed by observing how the world works from the moment we are born and processing those observations with brains hardwired by millions of years of evolution.

Computers, however, find causal reasoning hard. Machine-learning models excel at spotting correlations but are hard pressed to explain why one event should follow another. That’s a problem, because without a sense of cause and effect, predictions can be wildly off. Why shouldn’t a football reverse in flight?

This is a particular concern with AI-powered diagnosis. Diseases are often correlated with multiple symptoms. For example, people with type 2 diabetes are often overweight and have shortness of breath. But the shortness of breath is not caused by the diabetes, and treating a patient with insulin will not help with that symptom.

The AI community is realizing how important causal reasoning could be for machine learning and are scrambling to find ways to bolt it on.

“It’s very cool to see ideas from fundamental physics being borrowed to do this,” says Ciaran Lee, a researcher who works on causal inference at Spotify and University College London. “A grasp of causality is really important if you want to take actions or decisions in the real world,” he says. It goes to the heart of how things come to be the way they are: “If you ever want to ask the question ‘Why?’ then you need to understand cause and effect.”

The original article can be found here.

In the field of causal inference, Professor Judea Pearl is a pioneer for developing a theory of causal and counterfactual inference based on structural models. In 2011, Professor Pearl won the Turing Award, computer science’s highest honor, for “fundamental contributions to artificial intelligence through the development of a calculus of probabilistic and causal reasoning.” In 2020, Professor Pearl is also awarded as World Leader in AI World Society (AIWS.net) by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF). At this moment, Professor Judea also contributes to Causal Inference for AI transparency, which is one of important AIWS.net topics on AI Ethics.

by Editor | Sep 6, 2020 | News

The Social Contract 2020, A New Social Contract in the Age of AI, version 2.0, will be officially completed on September 9, and will be launched at the Policy Lab “Transatlantic Approaches on Digital Governance – A New Social Contract on Artificial Intelligence”, co-organized by World Leadership Alliance-Club de Madrid and the Boston Global Forum, on 16-18 September 2020.

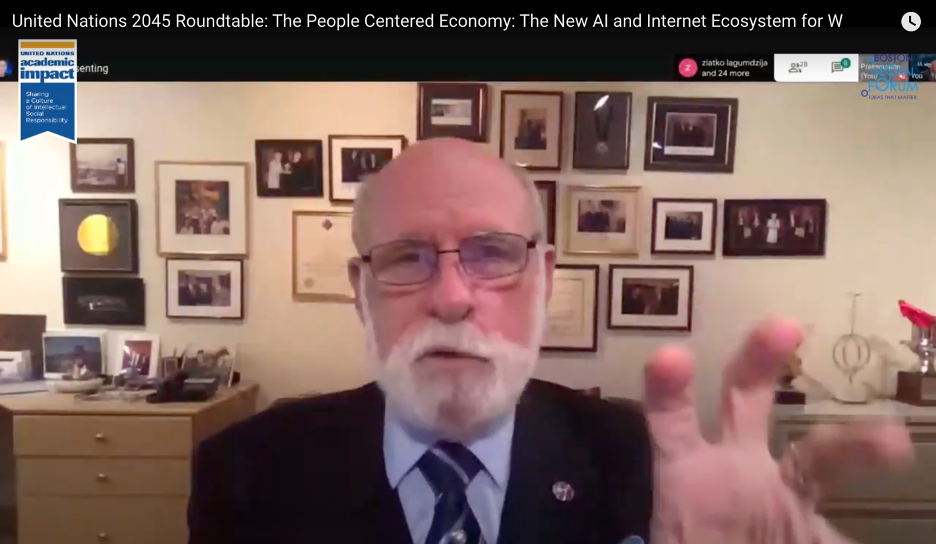

In Session I: The AIWS Social Contract 2020 and AIWS Innovation Network: A Platform for Transatlantic Cooperation, Presidents, Prime Ministers, distinguished thinkers, and innovators together with Nguyen Anh Tuan, CEO of the Boston Global Forum, and Vint Cerf, Father of the Internet, Chief Internet Evangelist of Google, will discuss the Social Contract 2020, AIWS.net, and AIWS City as a practice of the Social Contract 2020 and a platform for Transatlantic Cooperation.

Former Prime Minister of Bosnia and Herzegovina, Zlatko Lagumdžija, joins as co-author of the Social Contract 2020, and a Mentor of AIWS Innovation Network (AIWS.net), and the AIWS City Board of Leaders, the History of AI Board.

An experienced political leader, professor in computer science, and economics, member of World Leadership Alliance-Club de Madrid, and from a special country, PM Lagumdžija plays a significant role and has great contributions to the Social Contract 2020, AIWS.net, and AIWS City.

“We are thrilled that Zlatko Lagumdžija is joining us,” said Governor Michael Dukakis. “We are all very impressed by his breadth of knowledge, achievements and his passionate commitment to fundamental rights and democratic governance.”

Prime Minister Lagumdžija said:” I am very honored to join the Board of AIWS Innovation Network (AIWS.net), AIWS City, the History of AI, and co-author of the Social Contract 2020. We must ensure that new technologies — and AI in particular — promote a better world.”

Lagumdžija is a speaker at Policy Lab “Transatlantic Approaches on Digital Governance – A New Social Contract on Artificial Intelligence”

by Editor | Sep 6, 2020 | News

Club de Madrid, in partnership with the Boston Global Forum, and with the generous support of Tram Huong Khanh Hoa Company (ATC), presents a Policy Lab will be analysing challenges to digital and AI governance from a transatlantic perspective and offer actionable policy solutions as we consider the need to build a new social contract that will adequately tackle these challenges.

Leaders of the Boston Global Forum and AIWS.net, such as Governor Michael Dukakis, Father of the Internet Vint Cerf, Prime Minister Zlatko Lagumdzija, Nguyen Anh Tuan, Marc Rotenberg, professors Thomas Patterson, Nazli Choucri, David Silbersweig, Alex Pentland, will attend and present the Social Contract 2020 at this special event on 16-18 September 2020.

By contrasting North American and European best practices and perspectives, Club de Madrid and the Boston Global Forum aim to delve into innovative ideas and formulate actionable policy recommendations and consider the need to build a new social contract that will adequately tackle the challenges raised by artificial intelligence and digital governance. Both organizations will look to identify ways of engaging technological companies in public policy making while protecting the democratic mandate, which guarantees policies that serve the general interest, rather than that of fewer actors.

https://boston.dialoguescdm.org/

http://www.clubmadrid.org/policy-lab-transatlantic-approaches-on-digital-governance-a-new-social-contract-on-artificial-intelligence/

by Editor | Sep 6, 2020 | Event Updates

Club de Madrid, in partnership with the Boston Global Forum, presents a Policy Lab to analyze current global challenges from a transatlantic perspective and offer ensuing policy solutions on digital technologies and artificial intelligence. This is a special 3-day event from September 16-18, 2020 with attendance of political leaders, distinguished thinkers, and innovators. The Boston Global Forum will launch and present “the Social Contract 2020, A New Social Contract in the Age of AI” and the AIWS City at this event.

World Chess Champion Garry Kasparov, author of “Deep Thinking” is a speaker at “Transatlantic Approaches on Digital Governance – A New Social Contract on Artificial Intelligence” Conference.

World Leadership Alliance-Club de Madrid (WLA-CdM) is the largest worldwide assembly of political leaders working to strengthen democratic values, good governance and the well-being of citizens across the globe.

As a non-profit, non-partisan, international organization, its network is composed of more than 100 democratic former Presidents and Prime Ministers from over 70 countries, together with a global body of advisors and expert practitioners, who offer their voice and agency on a pro bono basis, to today’s political, civil society leaders and policymakers. WLA-CdM responds to a growing demand for trusted advice in addressing the challenges involved in achieving democracy that delivers, building bridges, bringing down silos and promoting dialogue for the design of better policies for all.

by Editor | Sep 6, 2020 | News

Researchers train a model to reach human-level performance at recognizing abstract concepts in video.

The ability to reason abstractly about events as they unfold is a defining feature of human intelligence. We know instinctively that crying and writing are means of communicating, and that a panda falling from a tree and a plane landing are variations on descending.

Organizing the world into abstract categories does not come easily to computers, but in recent years researchers have inched closer by training machine learning models on words and images infused with structural information about the world, and how objects, animals, and actions relate. In a new study at the European Conference on Computer Vision this month, researchers unveiled a hybrid language-vision model that can compare and contrast a set of dynamic events captured on video to tease out the high-level concepts connecting them.

Their model did as well as or better than humans at two types of visual reasoning tasks — picking the video that conceptually best completes the set, and picking the video that doesn’t fit. Shown videos of a dog barking and a man howling beside his dog, for example, the model completed the set by picking the crying baby from a set of five videos. Researchers replicated their results on two datasets for training AI systems in action recognition: MIT’s Multi-Moments in Time and DeepMind’s Kinetics.

The original article can be found here.

In addition to AI for reasoning, AI and causal inference is also an important topic. Professor Judea Pearl is a pioneer for developing a theory of causal and counterfactual inference based on structural models. In 2020, Professor Pearl is also awarded as World Leader in AI World Society (AIWS.net) by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF). In the future, Professor Judea will also contribute to Causal Inference for AI transparency, which is one of important AIWS topics on AI Ethics.

by Editor | Aug 30, 2020 | News

Paul Nemitz, a member of AIWS Standards Committee, co-author of the AIWS-G7 Summit Initiative 2019, launched his new book “The Human Imperative – Power, Freedom and Democracy in the Age of Artificial Intelligence”.

The book is about power in the age of Artificial Intelligence (AI). It looks at what the new technical powers that have accrued over the last decades mean for the freedom of people and for our democracies. Our starting point is that AI must not be considered in isolation, but rather in a very specific context: the concentration of economic and digital technological power that we see today. Analysis of the effects of AI requires that we take a holistic view of the business models of digital technologies and of the power they exercise today. The rise of technology and the power of control and manipulation associated with it leads, in our firm conviction, to the need to reconsider the principle of the human being, to ensure humans collectively benefit from this technology, that they control it, and that a humane future determined by man remains possible.

by Editor | Aug 30, 2020 | Event Updates

The Policy Lab on Transatlantic Approaches on Digital Governance: A New Social Contract in the Age of Artificial Intelligence, will be held in a virtual format over a three-day period between the 16th and the 18th of September, hosted by The World Leadership Alliance-Club de Madrid (WLA-CdM) and the Boston Global Forum (BGF).

The COVID-19 outbreak and ensuing global health crisis have significantly accelerated the deployment and decentralization of digital technologies and Artificial Intelligence (AI). Their role

in almost every facet of today’s life merits in-depth analysis, particularly timely in the current context, when their use will present us with both challenges and opportunities, concerns and solutions.

Our Policy Lab will bring the governance experience of World Leadership Alliance-Club de Madrid Members, democratic former Presidents and Prime Ministers from over seventy countries, together with the knowledge of experts and scholars in a multi-stakeholder, multidisciplinary platform aimed at generating action-oriented analysis and policy recommendations for the development of a new social contract on digital governance. All this, from a Transatlantic perspective and with the experience of a ravaging, global pandemic that has underlined the need to strengthen international cooperation and the multilateral system as we build a digital future for all. Through the voice and agency of WLA-CdM Members, WLA-CdM and BGF will bring the results of this discussion to the global conversation steered by the UN as part of its 75th anniversary and other major action-oriented discussions taking place on this most pressing topic.

Vint Cerf, Father of the Internet; Nguyen Anh Tuan, CEO of BGF; the former Prime Minister of Finland, former President of Latvia, former Prime Minister of Bosnia and Herzegovina, and professors of Harvard and MIT will speak at the Session I: The AIWS Social Contract 2020 and AIWS Innovation Network: A Platform for Transatlantic Cooperation, on September 17th at 9:30 EDT.

by Editor | Aug 30, 2020 | News

A PDF of this letter can be found here.

Boston, 28 August 2020

Prime Minister Shinzo Abe

Cabinet Secretariat,

1-6-1 Nagata-cho, Chiyoda-ku,

Tokyo 100 – 8968, Japan

Dear Prime Minister Abe,

We write to offer our respect and to thank you for your work to promote peace and cyber security. You have worked tirelessly to make cybersecurity a priority in Japan and in the international community.

You supported the Ethics Code of Conduct for Cyber Peace and Security conceived by the Boston Global Forum in December 2015. You also supported the BGF-G7 Summit Initiative – Ise-Shima Norms 2016, when Japan hosted the G-7 Summit. Among the Summit Initiatives was the joint Boston Global Forum-G7 effort to prevent cyberwar and fake news.

You also promoted a human-centric view of Artificial Intelligence that led to the OECD AI Principles which were adopted by the G-20 nations at the Ministerial Summit in Osaka. You also put forward the concept of Data Free Flow with Trust at the World Economic Forum in 2019, a new model for data governance that OECD Secretary General Angel Gurria described as “ambitious and timely.”

And through Abenomics, the “three arrows” of monetary easing, fiscal stimulus, and structural reforms, unemployment had fallen and the ratio of public debt to GDP had leveled off. You brought women to the workforce, grew the economy, and kept deflation under control.

You have long been a great friend of the Boston Global Forum. We were honored to give you the 2015 World Leader in Cybersecurity award for your exemplary leadership and your contributions in promoting cybersecurity in Japan and Asia.

The Boston Global Forum looks forward to future collaboration with the government of Japan as we promote the New Social Contract in the Age of AI, as well as the AI World Society City as a practical model.

Wishing you good health and continued success.

Yours sincerely,

Governor Michael Dukakis

Chair, the Boston Global Forum

Nguyen Anh Tuan

CEO, the Boston Global Forum

Marc Rotenberg

Director, Center for AI and Digital Policy at Michael Dukakis Institute

by Editor | Aug 30, 2020 | News

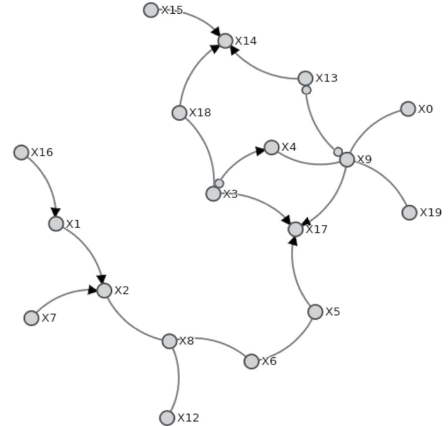

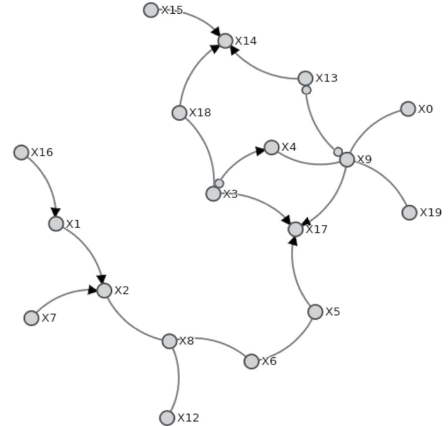

The Tetrad Automated Causal Discovery Platform was awarded the Super Artificial Intelligence Leader (SAIL) award at the World Artificial Intelligence Conference held in Shanghai in July. The award recognizes fundamental advances in the basic theory, methods, models and platforms of artificial intelligence (AI). Tetrad, developed by Peter Spirtes, Clark Glymour, Richard Scheines and Joe Ramsey of Carnegie Mellon University’s Philosophy Department, was one of four projects chosen for a SAIL award from over 800 nominees, including nominations from Amazon, IBM, Microsoft and Google.

“The Tetrad project, including the open-source Tetrad software package and the now standard

reference book, ‘Causation, Prediction, and Search’ (1993), are the basis for the

modern theory of causal discovery,” said Chris Meek, principal researcher at Microsoft Research. “The ideas and software that grew from this project have fundamentally shifted how researchers explore and interpret observational data.” The book has almost 8,000 citations.

The Tetrad project was started nearly 40 years ago by Glymour, then a professor of history and philosophy of science at the University of Pittsburgh and now Alumni University Professor Emeritus of Philosophy at CMU, and his doctoral students, Richard Scheines, now Bess Family Dean of the Dietrich College of Humanities and Social Sciences and a professor of philosophy at CMU, and Kevin Kelly, now professor of philosophy at CMU.

Fundamental to the work was providing a set of general principles, or axioms, for deriving testable predictions from any causal structure. For example, consider the coronavirus. Exposure to the virus causes infection, which in turn causes symptoms (Exposure –> Infection –> Symptoms). Since not all exposures result in infections, and not all infections result in symptoms, these relations are probabilistic. But if we assume that exposure can only cause symptoms through infection, the testable prediction from the axiom is that Exposure and Symptoms are independent given Infection. That is, although knowing whether someone was exposed is informative about whether they will develop symptoms, once we already know whether someone is infected or not — knowing whether they were exposed adds no extra information — a claim that can be tested statistically with data.

Causality, with its focus on modeling and reasoning about interventions, can … take the field [of AI] to the next level … the CMU group including Peter Spirtes, Clark Glymour, Richard Scheines and Joseph Ramsey was at the center of the development, not just in terms of algorithm development, but crucially also by providing Tetrad, the de facto standard in causal discovery software,” said Bernhard Schölkopf, Amazon Distinguished Scholar and chief machine learning scientist and director, Max Planck Institute for Intelligent Systems in Germany.

The original article can be found here.

It is useful to note that their contributions in conjunction with Judea Pearl’s Causality are the two most important texts on causality using Bayesian networks and AI. In 2011, Professor Judea Pearl was awarded Turing Award. In 2020, Professor Pearl was also awarded as World Leader in AI World Society (AIWS.net) by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF). At this moment, Professor Judea Pearl also contribute on Causal Inference for AI transparency, which is one of important AIWS.net topics on AI Ethics.