by Editor | Dec 16, 2019 | News

Evans Data Corporation’s latest study of AI and machine learning development provides insights into the challenges developers face when building enterprise-level, high-quality AI apps. The study, Artificial Intelligence and Machine Learning 2019, Volume 2 is based on interviews with 500 AI and machine learning developers globally. Focusing on the attitudes, adoption patterns and intentions of AI and machine learning developers worldwide, the 187-page study is one of the most comprehensive of its kind. What makes the study noteworthy is the depth of research into AI and machine learning developer’s challenges today.

- AI and machine learning developers most often rely on language and speech-based Application Programmer Interfaces (APIs).

- 50% say gathering or generating data is the most challenging aspect of continually training and fine-tuning AI models.

- AI and machine learning developers say that the complexity of managing operations is the top challenge they face when developing AI applications.

In addition to support for AI and Machine Learning development for enterprise application, Michael Dukakis Institute for Leadership and Innovation (MDI) has established AI World Society (AIWS) to invite participation and collaboration with think tanks, universities, non-profits, firms, as well as enterprise that share its commitment to the constructive and development of AI.

The original article can be found here.

by Editor | Dec 8, 2019 | News

Co-founder of the Boston Global Forum and AI World Society Innovation Network, Professor Thomas Patterson, spoke on CNN about his new book “How America Lost Its Mind”.

Harvard professor Thomas Patterson, author of the new book “How America Lost Its Mind,” says “motivated reasoning” is at work in the impeachment inquiry. People “start with a bias and then we try to find facts that kind of align with that,” he says. “We have an enormous capacity for selective reception.”

The original video from CNN can be found here.

by Editor | Dec 8, 2019 | News

Professor Nazli Choucri, MIT, co-founder of the AIWS Social Contract 2020, will talk in the presentation “On the Drafting for the Cyber safety of the AI World Society Social Contract 2020”. She is one of the key leaders of the Michael Dukakis Institute and the Social Contract 2020.

Nazli Choucri is Professor of Political Science. Her work is in the area of international relations, most notably on sources and consequences of international conflict and violence. Professor Choucri is the architect and Director of the Global System for Sustainable Development (GSSD), a multi-lingual web-based knowledge networking system focusing on the multi-dimensionality of sustainability. As Principal Investigator of an MIT-Harvard multi-year project on Explorations in Cyber International Relations (ECIR), she directed a multi-disciplinary and multi-method research initiative, constructing a cyber-inclusive view of international relations (Cyber-IR System) – with theory, data, analyses, simulations – to anticipate and respond to cyber threats and challenges to national security and international stability. She is Editor of the MIT Press Series on Global Environmental Accord and, formerly, General Editor of the International Political Science Review. She also previously served as the Associate Director of MIT’s Technology and Development Program.

The author of eleven books and over 120 articles, Dr. Choucri is a member of the European Academy of Sciences. She has been involved in research or advisory work for national and international agencies, and for a number of countries, notably Algeria, Canada, Colombia, Egypt, France, Germany, Greece, Honduras, Japan, Kuwait, Mexico, Pakistan, Qatar, Sudan, Switzerland, Syria, Tunisia, Turkey, United Arab Emirates and Yemen. She served two terms as President of the Scientific Advisory Committee of UNESCO’s Management of Social Transformation (MOST) Program.

by Editor | Dec 8, 2019 | News

8:30 am:

Introduction, by Professor Thomas Patterson, Harvard University

Opening Remarks by Governor Michael Dukakis, Co-founder and Chair of the Boston Global Forum, Co-founder of AIWS Innovation Network

8:45 am:

The keynote speech by Mr.Taro Kono, Currently Japanese Minister of Defense and Former Foreign Affair Minister: On a Vison for Ensuring Trusted Data for use by the Japanese Government.

Presentation by Mr.Yasuhide Nakayama, Former Foreign Affair Vice Minister: How AIWS Young Leaders convince governments, corporation respect AIWS norms.

Presentation by Professor Alex Sandy Pentland, Professor and Director of MIT’s Human Dynamics Laboratory and the MIT Media Lab Entrepreneurship Program, Co-founder of AIWS Innovation Network: On Building an AI World Society of Trusted Data

Presentation by Professor Nazli Choucri, Professor of Political Science at MIT, Co-founder of AIWS Innovation Network: On the Drafting for the Cyber safety of the AI World Society Social Contract 2020.

Roundtable Discussion, Moderated by Governor Michael Dukakis

Presentation by Marc Rotenberg, President of EPIC, To Ensure Transparency of AI Policymaking in the US

11:35 AM

Announcement of AIWS Innovation Network, co-founders of AIWS Innovation Network: Governor Michael Dukakis, Mr. Nguyen Anh Tuan, Prof. Nazli Choucri, Prof. Thomas Patterson, Prof. Alex Pentland, Prof. David Silbersweig, Prof. Christo Wilson.

Congratulating Remarks, Mr. Nam Pham, Assistant Secretary of Business Development and International Trade, Massachusetts Government.

Presentation by Ms. Rebecca Leeper, Computer Ethics and Data Policy Advocator, researcher into emerging technologies and their international security implications at the United Nations in Geneva, Switzerland: On AI World Society Innovation Network and Monitor AI developments and uses by governments, corporations, and non-profit organizations.

11:55 AM

Recognition of President Vaira Vike-Freiberga, President of Latvia

(1999-2007) and current President of the World Leadership Alliance – Club de Madrid. President Vike-Freiberga is the recipient of this year’s

BGF World Leader for Peace and Security Award.

by Editor | Dec 8, 2019 | News

Professor Kanter spoke with professor Alex Sandy Pentland about rules and regulations of data for AIWS Social Contract 2020. She called for non-government organizations to build international solution, tools to protect rights of information, data of individuals, fake news issues, and hoped that AIWS Social Contract could solve this issue at AIWS Conference at Harvard University Faculty Club.

Rosabeth Moss Kanter holds the Ernest L. Arbuckle Professorship at Harvard Business School, specializing in strategy, innovation, and leadership for change. Her strategic and practical insights guide leaders worldwide through teaching, writing, and direct consultation to major corporations, governments, and start-up ventures. She co-founded the Harvard University-wide Advanced Leadership Initiative, guiding its planning from 2005 to its launch in 2008 and serving as Founding Chair and Director from 2008-2018 as it became a growing international model for a new stage of higher education preparing successful top leaders to apply their skills to national and global challenges. Author or co-author of 20 books, her latest book, to be published in January 2020, is Think Outside the Building: How Advanced Leaders Can Change the World One Smart Innovation at a Time.

by Editor | Dec 8, 2019 | News

The popularity of AI and ML have wide-reaching effects on your enterprise. Here are three important trends driven by AI to look out for next year.

The Rise of AutoML 2.0 Platforms

As the need for additional AI applications grows, businesses will need to invest in technologies that help them accelerate the data science process. However, implementing and optimizing machine learning models is only part of the data science challenge. In fact, the vast majority of the work that data scientists must perform is often associated with the tasks that preceded the selection and optimization of ML models such as feature engineering — the heart of data science.

The Shift to Automation Will Intensify Focus on Privacy and Regulations

As AI and ML models become easier to create using advanced “AutoML 2.0” platforms, data scientists and citizen data scientists will begin to scale ML and AI model production in record numbers. This means organizations will need to pay special attention to data collection, maintenance, and privacy oversight to ensure that the creation of new, sophisticated models does not violate privacy laws or cause privacy concerns for consumers.

More Citizen Data Scientists Doing Data Science

Big data will continue to be on the upsurge in 2020 with a growing demand for skilled data scientists and a continued shortage of data science talent — creating ongoing challenges for businesses implementing AI and ML initiatives. Although AutoML platforms have alleviated some of the pressure on data science teams, they have not resulted in the productivity gains organizations are seeking from their AI and ML initiatives. As such, companies need better solutions to help them leverage their data for business insights.

To support for AI technology and development for social impact, Michael Dukakis Institute for Leadership and Innovation (MDI) has established AI World Society (AIWS) to invite participation and collaboration with think tanks, universities, non-profits, firms, as well as start-up companies that share its commitment to the constructive and development of AI.

The original article can be found here.

by Editor | Dec 8, 2019 | News

The news: Customers in China who buy SIM cards or register new mobile-phone services must have their faces scanned under a new law that came into effect yesterday. China’s government says the new rule, which was passed into law back in September, will “protect the legitimate rights and interest of citizens in cyberspace.”

A controversial step: It can be seen as part of an ongoing push by China’s government to make sure that people use services on the internet under their real names, thus helping to reduce fraud and boost cybersecurity. On the other hand, it also looks like part of a drive to make sure every member of the population can be surveilled.

How do Chinese people feel about it? It’s hard to say for sure, given how strictly the press and social media are regulated, but there are hints of growing unease over the use of facial recognition technology within the country. From the outside, there has been a lot of concern over the role the technology will play in the controversial social credit system, and how it’s been used to suppress Uighur Muslims in the western region of Xinjiang.

Knock-on effect: How facial recognition plays out in China might have an impact on its use in other countries, too. Chinese tech firms are helping to create influential United Nations standards for the technology, The Financial Times reported yesterday. These standards will help shape rules on how facial recognition is used around the world, particularly in developing countries.

On the other hand, Michael Dukakis Institute for Leadership and Innovation (MDI) and Artificial Intelligence World Society (AIWS) has developed AIWS Ethics and Practice Index to measure the ethical values and help people achieve well-being and happiness, as well as solve important issues, such as SDGs. Thus, it is not recommended to use AI technology including facial recognition for citizen surveillance as well as regulated press and social media.

The original article can be found here.

by Editor | Dec 2, 2019 | News

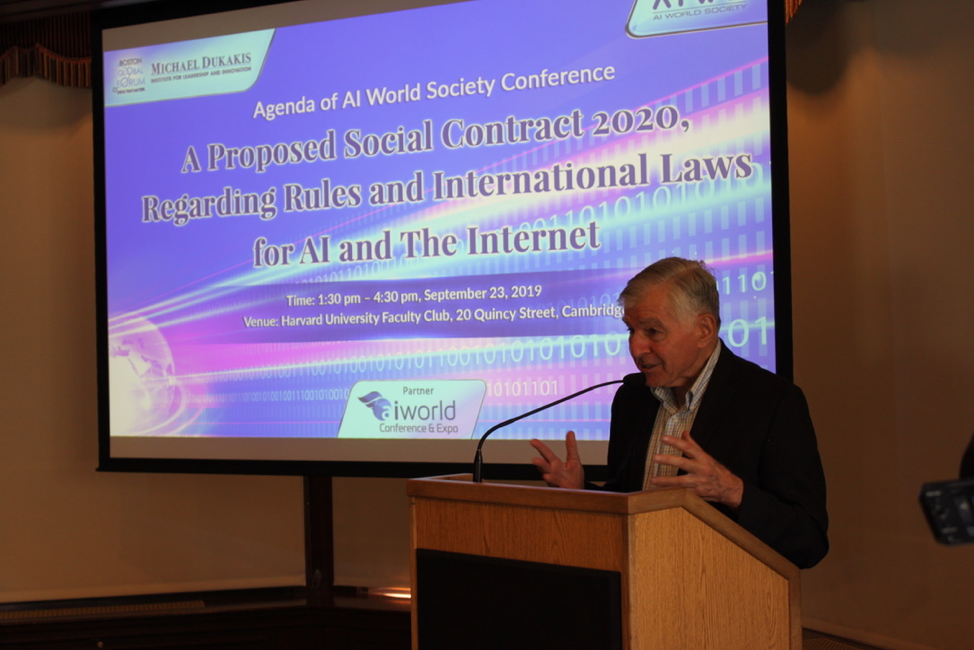

On September 23, 2019, at AIWS Conference at Harvard University Faculty Club, Former President of Ecuador Jamil Mahuah discussed the AIWS Social Contract 2020.

President Jamil Mahuah is a candidate for the Nobel Peace Prize, and now is a visiting professor at Harvard University. He is very interested in the AIWS Social Contract 2020. He enthusiastically contributed ideas from the point of view of governments.

Some important questions need to solve for the AIWS Social Contract 2020 are:

- How can the Citizens, Civic Society, and AI Assistant enforce laws and aid in governmental decision-making.

- Civic Societies: how can civic society, citizens, and AI Assistant impact and effect decisions by governments and business to enforce common values and standards?

Discussion to build the AIWS Social Contract 2020 is continued by group contributors such as world leaders, distinguished thinkers, AIWS Young Leaders. The official version of the AIWS Social Contract 2020 will be launched on April 28, 2020 at Harvard University with participation of world leaders, distinguished thinkers, and AIWS Young Leaders.

by Editor | Dec 2, 2019 | News

As a great governor for three terms of Massachusetts, and wonderful success in public transportation, Governor Michael Dukakis, Co-founder and Chair of the Boston Global Forum, recently talked on Boston.com: “Let’s just get the damn public transportation system working well” and “The answer is clear in my judgment: Don’t spend any more money on highways.”

Governor Dukakis has been fighting highway development since the 1960s. He said “This city would have been paved over if we let these guys do what they wanted to do. And by the time I left office, we had the best public transportation system in the country.”

Governor Dukakis touted improvements to the MBTA during his time in office, including new cars and the Red Line extension to Alewife. But he said the transit system was neglected by subsequent administrations, amid projects like the Big Dig. Now, amid the region’s congestion crisis, Dukakis says a simple, renewed focus on the MBTA could good a long way toward increasing ridership and freeing up the roads.

The original article can be found here.