by Editor | Dec 30, 2019 | News

Techiexperts highlights of the trends and predictions for AI in 2020 include:

Integration of Blockchain, IoT, and Artificial Intelligence

Integration of AI to other technologies is one of the main concerns in the past. Today, experts have found a way to fuse Artificial Intelligence with other systems more effectively.

One of these advancements is the activation and regulation of certain devices, which enables you to gather real-time data. In 2020, you can run more AI features in vehicles. The same thing goes for Blockchains that can generate certain routine using AI to address security, scalability, and other alarming concerns.

The AIWS Innovation Network will connect key AI actors and provide services that can assist in the development of AI.

By key actors, we refer to influential AI end users, including governments, corporations and non- profit organizations, and to AI experts, including thought leaders, scholars, creators, and innovators.

By services, we refer to such activities as providing advice on AI projects, assisting in developing solutions to AI problems, offering training in AI subjects, and connecting AI event organizers with speakers.

Services will also include periodic conferences, forums, and roundtables on AI topics; development of AI apps, etc.

Improved AI System Assistance

This is one of the biggest improvements you should expect in 2020. Experts have predicted that AI system assistance will be more streamlined including the automation for customer service and other sales tasks.

While we now have popular assistants powered by AI such as Siri, Alexa, Waze, and Cortana, more and more investors are still working with some of the best AI software developers.

By next year, you can expect more apps and programs with Artificial Intelligence system, which enables you to perform various tasks. In fact, ComScore mentioned that more than half of all searches can be sorted out using voice commands by 2020.

AIWS Social Contract 2020 introduced 7 centers of power, one of them being AI Assistants.

The original article can be found here.

by Editor | Dec 30, 2019 | News

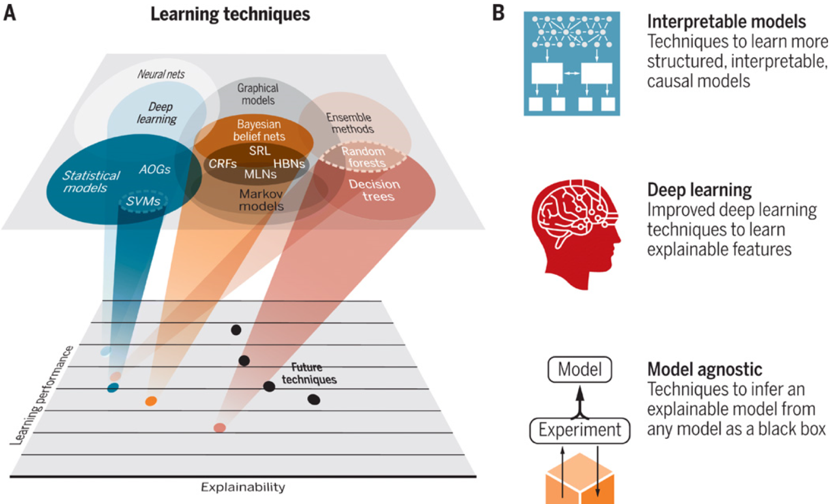

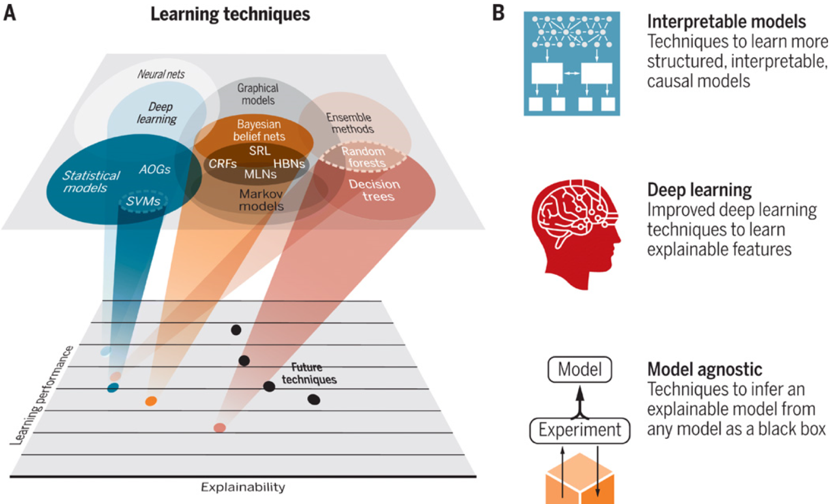

Recent successes in machine learning (ML) have led to a new wave of artificial intelligence (AI) applications that offer extensive benefits to a diverse range of fields. However, many of these systems are not able to explain their autonomous decisions and actions to human users. Explanations may not be essential for certain AI applications, and some AI researchers argue that the emphasis on explanation is misplaced, too difficult to achieve, and perhaps unnecessary. However, for many critical applications in defense, medicine, finance, and law, explanations are essential for users to understand, trust, and effectively manage these new, artificially intelligent partners.

Recent AI successes are largely attributed to new ML techniques that construct models in their internal representations. These include support vector machines (SVMs), random forests, probabilistic graphical models, reinforcement learning (RL), and deep learning (DL) neural networks. Although these models exhibit high performance, they are opaque in terms of explainability. There may be inherent conflict between ML performance (e.g., predictive accuracy) and explainability. Often, the highest performing methods (e.g., DL) are the least explainable, and the most explainable (e.g., decision trees) are the least accurate.

The purpose of an explainable AI (XAI) system is to make its behavior more intelligible to humans by providing explanations. There are some general principles to help create effective, more human-understandable AI systems: The XAI system should be able to explain its capabilities and understandings; explain what it has done, what it is doing now, and what will happen next; and disclose the salient information that it is acting on.

Regarding to explainable AI applications, Artificial Intelligence World Society (AIWS) also designed the AIWS Ethics and Practices Index to track the AI activities in terms of transparency, regulation, promotion and implementation for constructive use of AI.

The original article can be found here.

by Editor | Dec 30, 2019 | News

By now, almost everyone knows a little bit about artificial intelligence, but most people aren’t tech experts, and many may not be aware of just how big an impact AI has. The truth is most consumers interact with technology incorporating AI every day. From the searches we perform in Google to the advertisements we see on social media, AI is an ever-present feature of our lives.

To help nonspecialists grasp the degree to which AI has been woven into the fabric of modern society, 12 experts from Forbes Technology Council detail some applications of AI that many may not be aware of.

- Offering Better Customer Service

- Personalizing The Shopping Experience

- Making Recruiting More Efficient

- Keeping Internet Services Running Smoothly

- Protecting Your Finances

- Enhancing Vehicle Safety

- Converting Handwritten Text To Machine-Readable Code

- Improving Agriculture Worldwide

- Helping Humanitarian Efforts

- Keeping Security Companies Safe From Cyberattacks

- Improving Video Surveillance Capabilities

- Altering Our Trust In Information

According to Michael Dukakis Institute for Leadership and Innovation (MDI), AI applications are important for human daily life to help people achieve well-being and happiness, relieve them of resource constraints and arbitrary/inflexible rules and processes, and solve important issues, such as SDGs.

The original article can be found here.

by Editor | Dec 23, 2019 | News

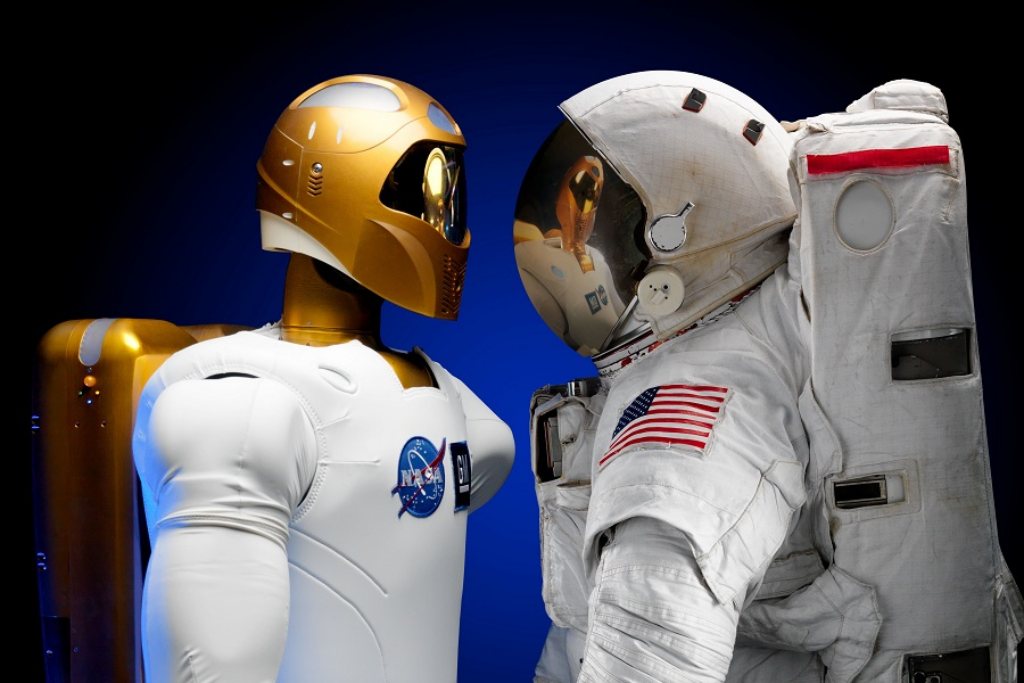

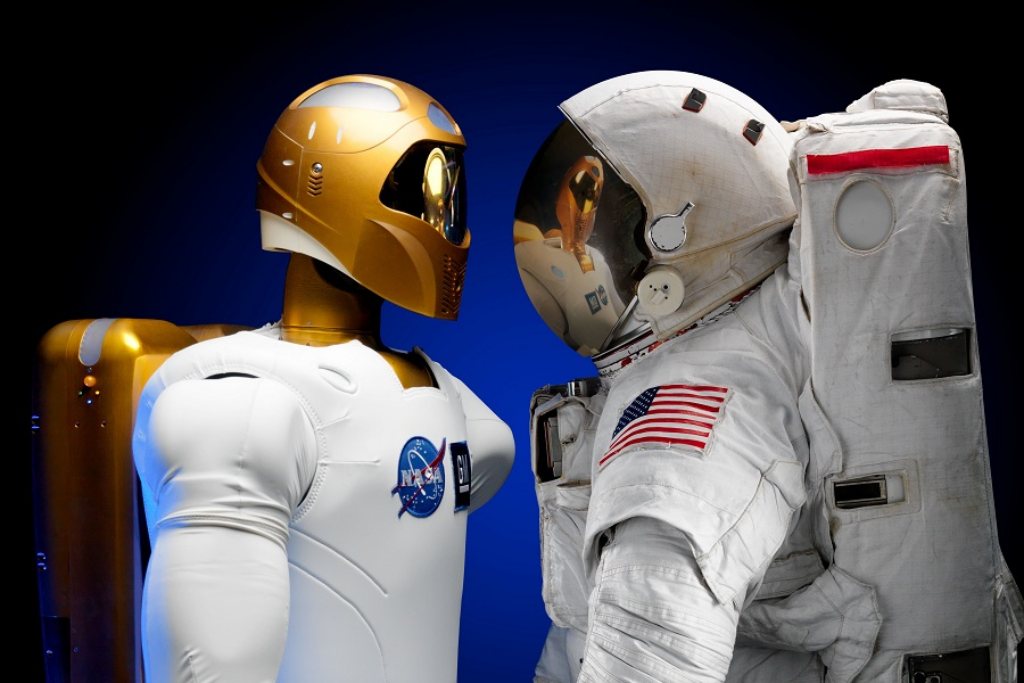

We already see algorithms and hear the headlines about artificial intelligence (AI), yet so many senior managers remain skeptical and are ignoring the issue. Whilst the robot or cyborg may immediately come to mind, neither will emerge as a single change to immediately replace millions of jobs, rather this is going to occur in waves over an extended timeframe. AI will likely be adopted only once it proves to be functional, safe, with favourable business economics and customer acceptance. It is likely that the current logarithms and basic AI functions will advance in the upcoming 5G rollout and faster technology, where the first functional robot may soon emerge.

As with humans, AI and robots will have bias that arises from its programmes and functions, where my conference audiences regularly raise AI bias and error as an issue to consider now. Some may argue bias exists in humans as well, yet AI bias maybe far more serious unless it is recognised and controlled. Sure, every single human has some bias based on their education, family, experiences and socio-economic background, but those are unique to the individual. AI bias will likely be systematic, derived from the system and originating programmer, where those purchasing it will have the same base bias that can permeate through organisations, industries and even the world. It is possible that bias could remain hidden, so potentially locking out millions of workers without managers even knowing about it.

In a competitive, fast moving age it may be tempting for management to offer up the efficiencies and savings to their boards, customers and staff. Although AI adoption without due diligence could lead to value losses, shareholder revolt and customer loss if bias and error are discovered some time after its adoption. If AI is revealed with significant error and bias it may also lead to government enquiries and tighter laws, so limiting its development and functionalities into the future. Managers should beware that AI rollout comes with significant risk if there is insufficient due diligence, or if it lacks an assessment against broader societal and ethical frameworks. The past business assessment tools of financial paybacks and net present values should no longer be the sole determinants of investment in this new age.

Regarding to AI Ethics, Michael Dukakis Institute for Leadership and Innovation (MDI) has established Artificial Intelligence World Society (AIWS) for the purpose of promoting ethical norms and practices by collaborating with think tanks, universities, non-profits, firms, and other entities that share its commitment in the development and use of positive AI for society.

The original article can be found here.

by Editor | Dec 23, 2019 | News

GE Healthcare launched more than 30 new AI applications at RSNA, as well as a new CT solution and the first contrast-enhanced mammography solution for biopsy.

The healthcare tech giant showcased algorithms designed for modalities across its entire equipment portfolio, including the new Revolution Maxima, a CT scanner that can automatically align patients to the isocenter of the bore and determine the location of landmarks for different CT exams.

“Before, the technologist would have to use the foot pedals to raise the patient and move them into the bore. They would have to set the laser, and make sure they had set the right landmarks and the right center position,” Jamie McCoy, chief marketing officer for molecular imaging and computed tomography at GE Healthcare, told HCB News. “Basically we’ve eliminated all those steps, and with the press of one button you can automatically position the patient in the bore in the exact right spot for every exam.”

According to Michael Dukakis Institute for Leadership and Innovation (MDI), AI World Society (AIWS) also promotes AI applications in healthcare for helping people achieve well-being and happiness, relieve them of resource constraints and arbitrary/inflexible rules and processes, and solve important issues, such as SDGs.

The original article can be found here.

by Editor | Dec 16, 2019 | Event Updates

On December 12, at Loeb House, Harvard University, with attending of distinguished thinkers of Harvard, MIT, Naval War College, and leaders of Massachusetts, business leaders, under moderation by Governor Michael Dukakis, Chair of the Boston Global Forum (BGF), Japanese Minister of Defense Taro Kono presented AIWS Distinguished Lecture. Minister Taro Kono spoke about Japan Self-Defense Forces’ efforts in Cyberspace. This is keynote speech of Global Cybersecurity Day Symposium 2019.

December 12, 2012 is birthday of the Boston Global Forum, and from December 12, 2015, it became Global Cybersecurity Day, with statement from United Nations Secretary General Ban Ki-moon.

Prime ministers, presidents presented speeches on Global Cybersecurity Day, and support for this initiative of BGF.

by Editor | Dec 16, 2019 | Event Updates

On Global Cybersecurity Day Symposium December 12, 2019, Marc Rotenberg, a mentor of AIWS Innovation Network (AIWS-IN), contributor of AIWS Social Contract 2020, presented on the topic “To Ensure Transparency of AI Policymaking in the US and China”

Marc attracted and provoked discussants to debate, with special focus on “AI Policy and Public Participation: EPIC v. Natl. Sec. Comm. on AI”, “AI Techniques and Pre-trial Risk Assessment”, and “The Next Campaign: AI and Face Surveillance”

Marc and EPIC will collaborate with Boston Global Forum to build AIWS Social Contract 2020 and present at AIWS Summit 2020 on April 28-29 at Harvard University.

by Editor | Dec 16, 2019 | News

This past year, revelations about the plight of Muslim Uighurs in China have come to light, with massive-scale detentions and human rights violations of this ethnic minority of the Chinese population. Last month, additional classified Chinese government cables revealed that this policy of oppression was powered by artificial intelligence (AI): that algorithms fueled by massive data collection of the Chinese population were used to make decisions regarding detention and treatment of individuals. China failing to uphold the fundamental and inalienable human rights of its population is not new, and indeed, tyranny is as old as history. But the Chinese government is harnessing new technology to do wrong more efficiently.

Concerns about how governments can leverage AI also extend to the waging of war. Two major concerns about the application of AI to warfare are ethics (is it the right thing to do?) and safety (will civilians or friendly forces be harmed, or will the use of AI lead to accidental escalation leading to conflict?). With the United States, Russia, and China all signaling that AI is a transformative technology central to their national security strategy, with their militaries planning to move ahead with military applications of AI quickly, should this development raise the same kinds of concerns as China’s use of AI against its own population? In an era where Russia targets hospitals in Syria with airstrikes in blatant violation of international law, and indeed of basic humanity, could AI be used unethically to conduct war crimes more efficiently? Will AI in war endanger innocent civilians as well as protected entities such as hospitals?

Regarding to AI Ethics and Safety, Michael Dukakis Institute for Leadership and Innovation (MDI) and Artificial Intelligence World Society (AIWS) has developed AIWS Ethics and Practice Index to measure the extent to which a government’s AI activities respects human values and contributes to the constructive use of AI. The Index has four components by which government performance is assessed including transparency, regulation, promotion and implementation.

The original article can be found here.

by Editor | Dec 16, 2019 | News

Evans Data Corporation’s latest study of AI and machine learning development provides insights into the challenges developers face when building enterprise-level, high-quality AI apps. The study, Artificial Intelligence and Machine Learning 2019, Volume 2 is based on interviews with 500 AI and machine learning developers globally. Focusing on the attitudes, adoption patterns and intentions of AI and machine learning developers worldwide, the 187-page study is one of the most comprehensive of its kind. What makes the study noteworthy is the depth of research into AI and machine learning developer’s challenges today.

- AI and machine learning developers most often rely on language and speech-based Application Programmer Interfaces (APIs).

- 50% say gathering or generating data is the most challenging aspect of continually training and fine-tuning AI models.

- AI and machine learning developers say that the complexity of managing operations is the top challenge they face when developing AI applications.

In addition to support for AI and Machine Learning development for enterprise application, Michael Dukakis Institute for Leadership and Innovation (MDI) has established AI World Society (AIWS) to invite participation and collaboration with think tanks, universities, non-profits, firms, as well as enterprise that share its commitment to the constructive and development of AI.

The original article can be found here.