by BGF | Dec 31, 2018 | News

On December 12, 2018 Shivam Parikh, University’s College of Engineering and Applied Sciences presented the result of his work with Associate Professor Pradeep Atrey, University at Albany on detecting fake news in the event of the Global Cybersecurity Day 2018, is organized by Boston Global Forum (BGF) and Michael Dukakis Institute (MDI), at Loeb House, Harvard.

The authenticity of information has become a longstanding issue affecting businesses and society and many researchers around the globe have been trying to understand the basic characteristics of the problem. Shivam Parikh and Pradeep Atrey’s study looks at the challenges associated with detecting fake news and existing detection approaches.

“Fake news can be any anything that is designed to change people’s opinion, convince its readers to believe in something that is not true,” said Parikh, who works as a systems developer analyst for ITS at University at Albany. He showed some specific examples of fake news and emphasized the serious impact of this growing problem “with the social media on the rise, fake news stories are very reachable and have a very high impact factor.”

At the same time, Parikh presented a categorization of some existing potential approaches, their key characteristics and their respective advantages and limitations. Methods mentioned are:

- Linguistic Features based Methods: The approach is about using/extracting key linguistic features from fake news;

- Deception Modeling based Methods: The process of clustering deceptive vs. truthful stories relies on theoretical approaches;

- Clustering based Methods Clustering: a known method to compare and contrast a large amount of data;

- Predictive Modeling based Methods: this method follows a logistic regression model based on training data set of 100 out of 132 news reports;

- Non-Text Cues based Methods: this mainly focused on the non-text content of the news content

In conclusion, the researcher highlights 4 key open research challenges that can guide future research on fake news detection:

- Multi-modal Data-set: This opens up an opportunity for researchers to create a multi-modal data-set that covers all the fake news data types.

- Multi-modal Verification Method: This calls for verification of not just language, but images, audio, embedded content (i.e. embedded video, tweet, Facebook post) and hyperlinks (i.e. links to different URLs).

- Source Verification: Source of the news story has not been done in proposed existing methods, this calls for a new fake news detection method that can perform source verification and considers the source in evaluating fake news stories.

- Author Credibility Check: One of the methods proposes that detecting tone of a news story to detect fake news, research challenge could be that author credibility check allows system to detect chain of news written same author or same group of authors to detect fake news.

Parikh continues working on these issues and he believes better understanding in the field will assist in improving the accuracy of the detection of fake news and misinformation in the future.

by BGF | Dec 31, 2018 | News

A recently published report named Off the Leash: The Development of Autonomous Military Drones in the UK reveals that the UK’s Defense and Security Accelerator (DASA) is funding research for autonomous lethal drone.

The report indicates that the Ministry of Defense (MoD) funded many autonomous weapon programs. Among these projects, the work on the Taranis drone was highlighted: it is the culmination of more than ten years working by BAE Systems, a British multinational defense, security, and aerospace company. The Taranis drone is valued at 200 million pounds with the capability to fly, plan the route, locate and kill target without direct human input.

Despite concrete evidence, the MoD denied its involvement in developing this weapon: “There is no intent within the MoD to develop weapon systems that operate entirely without human input. Our weapons will always be under human control as an absolute guarantee of oversight, authority, and accountability,” said MoD representative.

The result of the study causes the fear of these robots’ deployment in the future and potential arms race to take place. “Without a ban, there will be an arms race to develop increasingly capable autonomous weapons. These will be weapons of mass destruction. One programmer will be able to control a whole army.” said Toby Walsh, Scientia Professor of AI at UNSW Sydney.

In response to the threat, a global consensus in AI development is needed to prevent development of weapons or technologies that are likely to cause harm to humanity or have purpose of causing or facilitating injury to human. At the moment, together with the AIWS Standards and Practice Committee, the Michael Dukakis Institution is also taking actions to draw the attention of UN, OECD countries to call for an AI Peace Treaty that prohibits the creation, stockpiling, and use of those weapons.

by BGF | Dec 31, 2018 | News

IBM has successfully created the first prototype of fingernail sensor, which can supervise a person’s condition.

Skin-based sensors commonly help monitor our motion, muscle, and nerve cells; it can also indicate emotional status.

This new prototype of fingernail sensor was developed by IBM with a unique feature that analyzes fingernail bends, for instance, the tactile sensing of pressure, temperature, surface textures of our fingernails when we use them for gripping, grasping, and even flexing and extending our fingers. “This deformation is on the order of single digit microns and not visible to the naked eye,” said Katsuyuki Sakuma, from IBM’s Thomas J. Watson Research Center in New York.

By attaching strain gauges to the fingernail, it collects accelerometer data and sends to a smartwatch, which is installed machine-learning algorithms. It will evaluate bradykinesia, tremor, and dyskinesia to detect Parkinson’s disease. This creation could contribute significantly to the potential development of “a new device modeled on the structure of the fingertip that could one day help quadriplegics communicate,” noted Sakuma.

However, having an AI device attached to our hand to detect symptoms might result in unwanted consequences if the installment is not done cautiously. To ensure the safety of users, data governance, accountability, development standards, and the responsibility for all practitioners involved directly or indirectly in creating AI must be taken into careful consideration, according to Layer 3 of the AIWS 7-Layer Model developed by the Michael Dukakis Institute.

by BGF | Dec 31, 2018 | News

Sunspring’s first debut was in Sci-fi London Film Festival 2016; the story was set in a miserable world with extremely high unemployment rate, which attracted a certain number of fans. However, the most surprising feature about the film was its screenwriter – an Artificial Intelligence bot.

One of the common reviews about the movie was “amusing but strange”, it was spotted in character’s dialogues that the sentences rather sound unrelated and random. Nevertheless, AI’s work on background music and visual images were excellent. This spurred the idea of human- machine collaboration in storytelling.

The Massachusetts Institute of Technology (MIT) Media Lab recently looked into the potential for this combination in video storytelling. Before getting into the research, it is found that a story’s emotional arc affects the audience engagement greatly. The most fascinating story usually provokes strong emotional response.

By working with McKinsey’s Consumer Tech and Media team to program a machine-learning algorithm to be a moviegoer. Using deep neural networks, it estimates positive or negative emotional content by second harvested from short videos, movies, TV… “Watching” the opening of Up – an animated film which was a popular hit, the computer drew a graph of emotional arc based on its visual effects and sound.

After having the model watched thousands of videos and constructing emotional arcs for each one, researchers classified the stories into families of arcs. Among more than 500 Hollywood movies and another with almost 1,500 short films found on Vimeo, the data was divided into 5 groups of families. The next step was to analyze and predict the audience engagement on social media. After running on test on several of families, two stories that attracted the most engagement both culminate with a positive emotional bang found in the climax. Hence, it could be concluded that positive emotions generate greater engagement. Not only are there more in number of engagement but these comments are also longer and passionate as well.

In general, the idea of human collaborating with machine is no longer science fiction. But it also requires regulations for this co-operation. Developing rules and ethical guidelines for AI are what the AIWS is working on, with the development of Layer 1, AIWS hopes to establish a responsible code of conduct for AI Citizens to ensure that AI is safely integrated into human society.

by BGF | Dec 31, 2018 | News

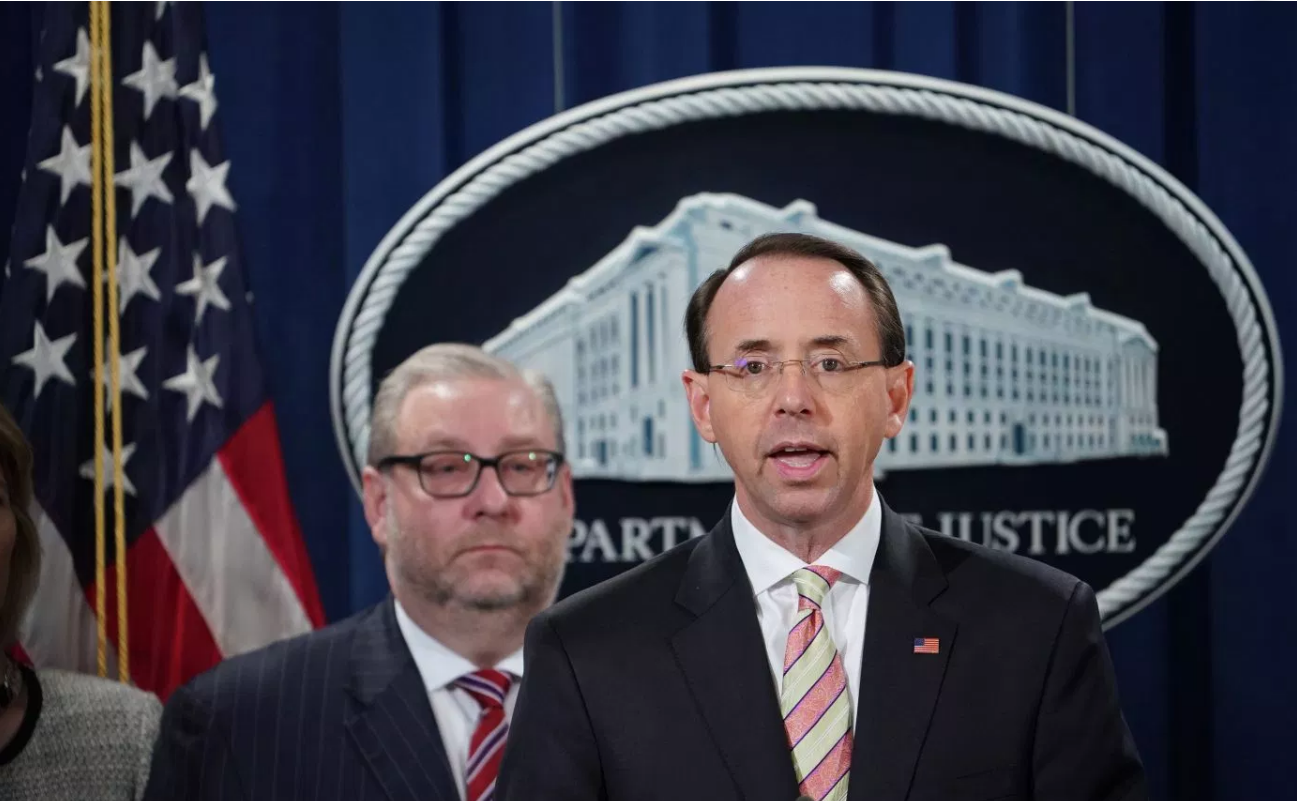

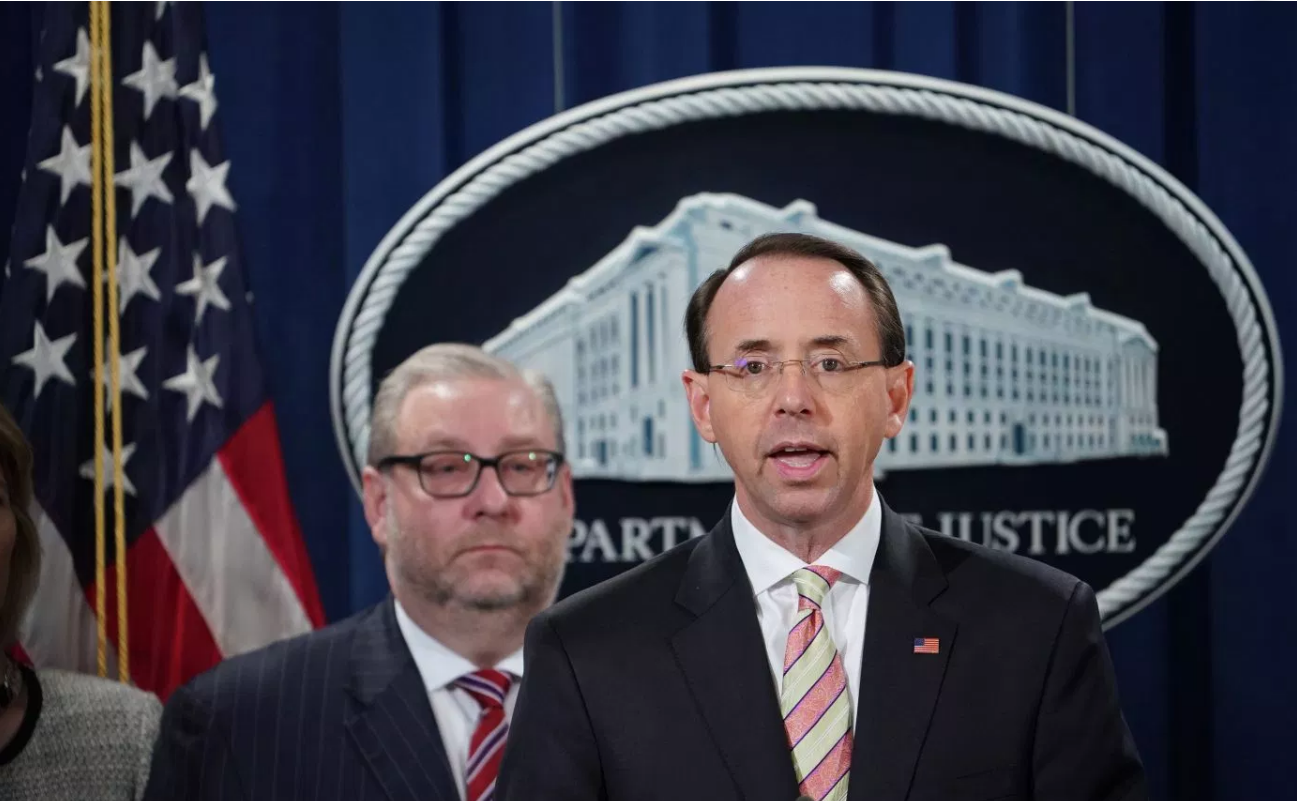

Zhu Hua and Zhang Shilong, nationals and residents of China were inculpated for stealing trade secrets and other sensitive information from U.S. tech companies in the latest indictment unveiled by the Justice Department.

The two hackers from China were charged with three counts including computer hacking, conspiracy to commit fraud and aggravated identity theft.

The prosecutor claimed that they were part of a Beijing-backed group dubbed APT10, which had worked with many security companies in China. The men are accused of stealing “hundreds of gigabytes of sensitive data” in aviation, space and satellite technology manufacturing and many key sectors. The victims of the crime were not mentioned. It was said that the Chinese hackers had targeted the customer’s data of Hewlett Packard Enterprise and IBM as well as personal information including salary, emails, birthday, and social security number of 100,000 U.S Navy Personnel.

Dmitri Alperovitch, Chief Technology Officer at CrowdStrike, who were honored by BGF as the Practitioner in Cybersecurity 2016, which has tracked APT10 in recent years, called the Justice Department’s move “unprecedented and encouraging” to take action against China.

“Today’s announcement of indictments against Ministry of State Security (MSS), whom we deem now to be the most active Chinese cyber threat actor, is another step in a campaign that has been waged to indicate to China that its blatant theft of IP is unacceptable and will not be tolerated,” he said. “While this action alone will not likely solve the issue and companies in US, Canada, Europe, Australia and Japan will continue to be targeted by MSS for industrial espionage, it is an important element in raising the cost and isolating them internationally.”

by BGF | Dec 31, 2018 | News

On 15-16 December, the Doha Forum 2018 took place in a forward-looking platform for policy makers from all over the world to discuss how we enhance and optimize on cooperation between different perspectives, expertise, countries and organizations. Vaira Vike-Freiberga, President of the World Leadership Alliance – Club de Madrid, a member of BGF’s Board of Thinkers, discussed the challenges in implementing the Sustainable Development Goals (SDGs) in a plenary session at the Forum.

Vaira Vike-Freiberga was a member of Independent Team of Advisors, which gave recommendations based on analysis regarding the changing role of the UN development system considering the 2030 Agenda, the development program that is meant to implement the SDGs. She participated in the discussion session with Ahrim Steiner, Administrator of the United Nations Development Programme (UNDP).

Key points highlighted in her recommendation for 2030 Agenda includes:

Achieving the SDGs will take commitment and belief

As the financial resolution addressed by Ahrim Steiner, she claimed additional requirements to achieve the goals, including commitment and belief. “It takes commitment and belief in the ability of the human race to sustain a planet that it will not self-destroy, but that will continue to thrive and develop” said Vike-Freiberga.

A common Agenda for states with different priorities

Since there are 193 UN Member States there is no way all of them could take the same approach to adopting of Agenda 2030. “It would take a bold soul to claim that all nations sitting at the UN General Assembly have hearts beating at the same rhythms and minds committed to the same ideals,” said the President of the World Leadership Alliance.

Technology: ally or foe?

Following the priorities of states, technology was mentioned in her speech. She emphasized the potential result of unequal access to technology between states. “Modern technologies will allow people in remote areas to have access to education. If you couldn’t bring teachers to the students, technology can bring them the information that they need.”

The role of the SDGs in global governance

Rising inequality and the fact that the “GINI index is growing in both developed and undeveloped countries” mean that a common agenda for development is even more necessary. “If this is not reversed, a significant part of the world will not have reached the SDGs by 2030”, said Vaira Vike-Freiberga.

by BGF | Dec 24, 2018 | News

On December 16, 2018, Mr. Nguyen Anh Tuan, CEO of Boston Global Forum; Director of the Michael Dukakis Institute for Leadership and Innovation appeared on the Talkshow “Leadership and Innovative Spirit in the era of Artificial Intelligence” held by Dalat University, Vietnam. The anchor was Journalist Ta Bich Loan, Director of Vietnam Televisions VTV3 Channel.

Mr. Nguyen Anh Tuan, CEO of Boston Global Forum; Director of the Michael Dukakis Institute for Leadership and Innovation appeared on the Talkshow “Leadership and Innovative Spirit in the era of AI”

The program was organized by the leaders of the Dalat University and broadcasted on Vietnamese Lam Dong and VTV3 Television. Being an alumnus of Dalat University; and currently CEO of Boston Global Forum; Director of the Michael Dukakis Institute for Leadership and Innovation (US), Mr. Nguyen Anh Tuan was invited as a guest speaker to share his ideas with many students and professionals in the Dalat University.

Students and lecturers of the Dalat University participating in the Talkshow

Not only did Mr. Nguyen Anh Tuan explained to his audience the concept and importance of artificial intelligence nowsadays, he also noted that the spirit of innovation, daring to think and do differently are the most identified qualities of great leaders to capture the new trend of the future and lead in the society of Artificial Intelligence.

The conversation is all very respectful and at times funny. Toward the end of the interview, Journalist Ta Bich Loan and Mr. Nguyen Anh discussed about the spirit of leadership and why the era of Artificial Intelligence needs a spirit of leadership and innovation.

This event is considered one of the university’s most visited events in recent years.

by BGF | Dec 17, 2018 | News

On December 12, 2018, The Global Cybersecurity Day 2018 was held at Loeb House, Harvard University, under the moderation of Governor Michael Dukakis – Chairman of the Boston Global Forum (BGF) and the Michael Dukakis Institute (MDI).

The goal of Global Cybersecurity Day is to inspire shared responsibility of the world’s citizens to protect the Internet’s safety and transparency. This year’s conference revolved around the theme “AI solutions solve disinformation.” During the discussion, experts explored the current state of cybersecurity and the threat posed by disinformation, anonymous sources, and fake news, as well as the role AI can play as an effective defense mechanism against these threats to truth and the principles of democracy.

Highlighted in this event were the online speeches of prominent speakers: President of Finland Sauli Niinistö, Japanese Minister for Foreign Affairs Taro Kono, and Rt. Hon. Liam Byrne MP, Member of Parliament for Birmingham. Especially, Liam Byrne delivered the first AI World Society Distinguished Lecture, which had strongly impact to the audiences. Their speeches at the symposium stated the current situation as well as called for the public awareness of protecting their safety on Internet.

At this event, Dr. Thomas Creely, Associate Professor of Ethics, Director of Ethics & Emerging Military Technology Graduate Program, U.S. Naval War College, on behalf of the authors group, presented the AIWS Report about AI Ethics and published the Government AIWS Ethics Index. This proposal is expected to build common standards for an AI society around the world, from technology, laws, conventions, etc. to guarantee the interoperability among different frameworks and approaches between countries.

The presentation of Cameroon Hickey from Information Disorder Lab, Shorenstein Center for Media on fake news also received much of the audience’s attention. The report brought people conscience about the definition and classification of information disorder and its challenging impact to our society.

by BGF | Dec 17, 2018 | AI World Society Distinguished Lecture, Michael Dukakis Institute

Rt. Hon. Liam Byrne MP, Member of Parliament for Birmingham, Hodge Hill, Shadow Digital Minister, Chair of the All-Party Parliamentary Group on Inclusive Growth, delivered the first AI World Society Distinguished Lecture at Global Cybersecurity Day 2018.

Liam’s lecture was held at Loeb House, Harvard University, one of the most prestigious universities. Despite his absence at the symposium, he delivered his lecture virtually with all of his spirit. His lecture was extremely inspiring to all audiences, most of whom were scholars from Harvard University, MIT, Tufts University, etc. In the speech, he focused on the changes occurred in our society the last decade, and how it affected the society and market along with the risks and challenges. In conclusion, he called for the join force of lawmakers and changemakers to reshape those constraints.

Watch full AI World Society Distinguished Lecture of Rt. Hon. Liam Byrne MP

After his enlivening lecture, the questions of scholars posed at the conference were delivered and answered directly by Liam Byrne MP online. The atmosphere of the conference became buoyant with challenging issues arising around the management of the slowness of the regulatory mechanisms to adaptive changes, the mental wiring of tech companies to incorporate social benefits, the definition of digital rights, etc.

The AI World Society Distinguished Lectures was proposed to honor those who have made outstanding contributions associated with the Artificial Intelligence World Society (AIWS) 7- Layer Model. These excellent achievements are dedicated to one of the seven layers of the AIWS Initiative. Simultaneously, the honor will help to introduce and increase awareness of the dedication of these outstanding, noble honorees among members of the global elite community.