by BGF | Dec 3, 2018 | News

AI has been thriving and living among us for the last decade. It has enhanced the way human’s efficiency greatly. Even so, there is still a widespread fear over the threat of AI based on people’s common misconceptions. Here are the three key confusions need to be addressed.

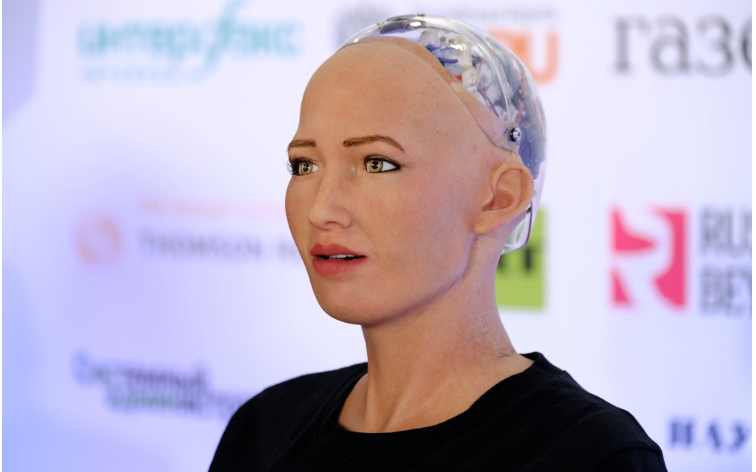

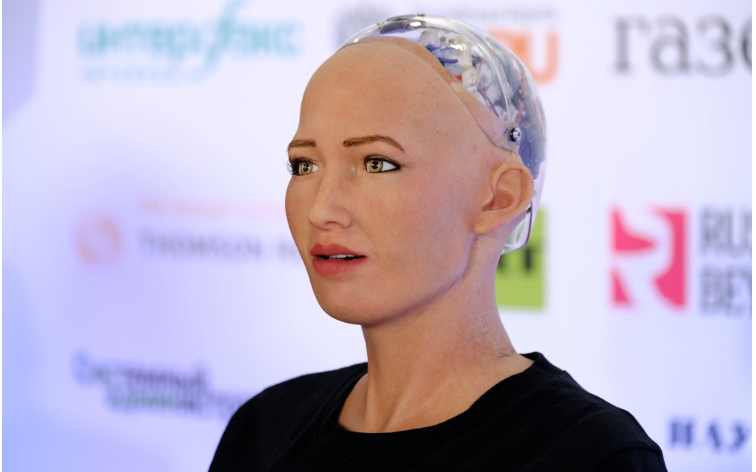

AI is robot

It is the entertainment industry to blame when it comes to human’s notion about AI. When we think of robots, the picture of Bicentennial Man, Wall-E, or Sophia, the digital humanoid, appears. However, AI is much more advanced than that. It can anticipate natural disasters, diseases, do chores, test the efficiency of drug, etc. It also can increase the work’s efficiency and productivity.

AI is going to replace humans

Due to the domination of negative predictions on the news, people are terrorized by statistics. Thus, they believe AI is going take over human’s job markets. In fact, AI is developed by human to serve human needs. Furthermore, it is excellent at repetitive tasks. The same is not true for empathy, judgment, and general life experience.

Another reason that machines can’t replace human is that the rise in number of machines also means rise in the jobs surrounding them as the working progress of AI requires human intervention and supervision.

AI is dangerous in the wrong hands

AI can be used for good or evil as with any other technology. However, the problem is never actually the technology, it is the people who use it. Taking the example of the 2016 Election, it is the responsibility of the platform wielder to ensure its user’s safety.

To sum up, we are developing AI, and it is up to us make sure AI make the best decisions. To give AI the abilities to do all of these tasks, it requires monitoring, regulations as well as ethical frameworks which are something the Michael Dukakis Institution is on.

by BGF | Dec 3, 2018 | News

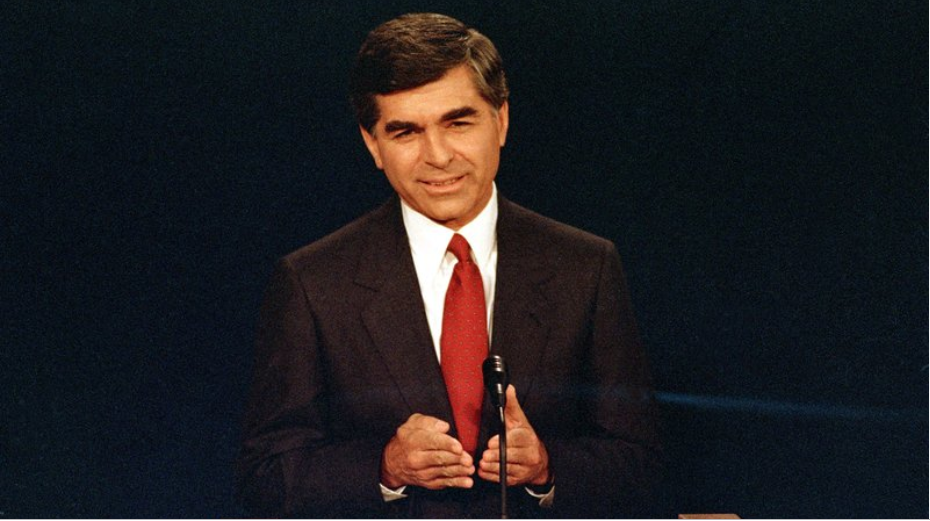

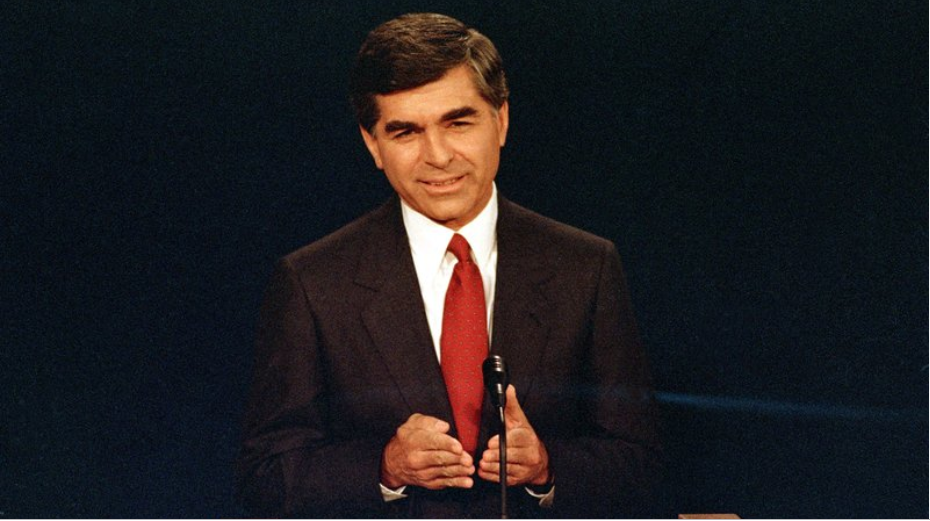

The Former Governor of Massachusetts Michael Dukakis was George H.R Bush’s competitor in 1988 election. He acknowledged Bush’s contribution to ending the Cold War.

On November 30, 2018, George H.R Bush, the 41st President of the United States (1989 – 1993), passed away at the age of 94 at his home surrounded by his family.

In the telephone interview by the Associated Press, Gov. Michael Dukakis, Chairman of Boston Global Forum and Michael Dukakis Institute for Leadership and Innovation, expressed his admiration to Bush’s accomplishment. Although he never had a friendship with Bush, he thought him being a wise and thoughtful man. In addition, Dukakis praised Bush for being willing to work with Democrats and his effort in negotiation with Mikhail Gorbachëv to end the Cold War.

by BGF | Dec 3, 2018 | News

On December 1, World Leadership Alliance – Club de Madrid is celebrating its President Vaira Vike-Freiberga 85th birthday.

Dr. Vaira Vike-Freiberga is the former President of Latvia (1999-2007) and the President of the World Leadership Alliance – Club de Madrid (WLA-CdM) since 2014.

Since the end of her presidency in July 2007, Dr. Vike-Freiberga has been actively participating as an invited speaker at a wide variety of international events. She is a member, board member or patron of 29 international organizations, including the WLA-CdM, the Council of Women World Leaders, the International Criminal Court Trust Fund for Victims, the European Council on Foreign Relations, as well as four Academies. Among her international activities, in December 2007, she was appointed by the European Council’s Vice-Chair of the Reflection Group on the long-term future of Europe. From 2011 to 2012, she chaired the High-level Group on Freedom and Pluralism of the Media in the EU.

Regardless of her age, she continues to make outstanding contribution to the world. She is also a member of Boston Global Forum’s Board of Thinkers. On May 15, 2018, Dr. Vike-Freiberga was honored as the Distinguished Innovation Leader for her distinguished work in the humanities and social sciences by Boston Global Forum and Michael Dukakis Institute.

by BGF | Nov 25, 2018 | News

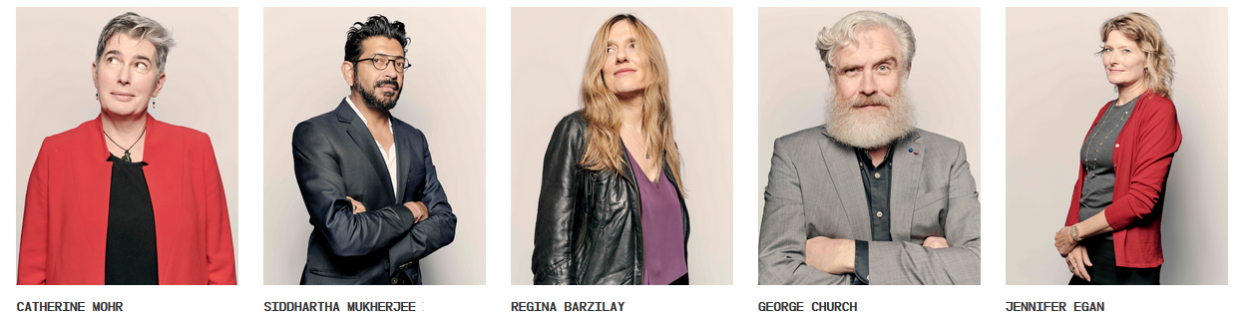

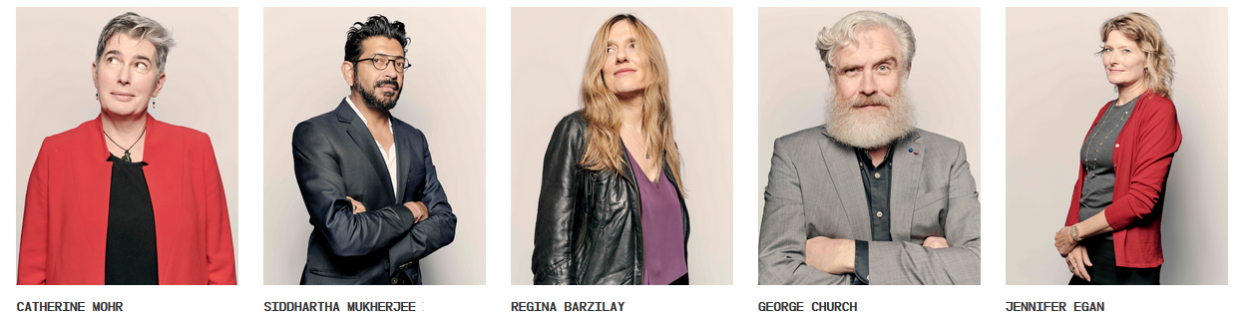

The New York Times gathered five big thinkers in a room at a bar for a three-hour dinner to discuss the future of healthcare and humanity under the moderation of Mark Jannot.

The five guests at the table were:

- Catherine Mohr is an engineer, a medical doctor, Vice President of Strategy at Intuitive Surgical (makers of the Da Vinci surgical robot), and President of the Intuitive Foundation;

- Siddhartha Mukherjee is a physician, biologist, oncologist and author of “The Gene: An Intimate History” and “The Emperor of All Maladies: a biography of cancer,” which won the 2011 Pulitzer Prize for general non-fiction;

- Regina Barzilay is a professor at MIT and a member of MIT Computer Science and Artificial Intelligence Laboratory;

- George Church is a professor of genetics at Harvard Medical School and director of personalgenomes.org, an open-access information resource on human genomic, environmental and trait data;

- Jennifer Egan is a writer whose most recent novel, “Manhattan Beach,” was awarded the 2018 Andrew Carnegie Medal for excellence in fiction.

The topic of the talk varied into many sections from genes editing to AI.

The first topic of genes started with a question: Will we engineer our children, and ourselves?

The idea of editing the sperm cell, collecting the best traits and removing all the diseases fascinates people. “You could edit the sperm, change that allele so that all sperm are healthy, and your offspring will be fine. All sperm come from spermatogonia stem cells in the man’s testes. You can use editing tools and work on stem cells in Petri dishes so that you’re removing the bad allele and replacing it with DNA that has been designed and synthesized on computer-controlled machines,” said Professor George Church.

However, it has not been done since scientists have not figured out the way to apply stem cells and sperm cell. Mukherjee claimed that they edited a gene in human blood stem cells to enable therapy for some form of leukemia, then they encountered technical issues, but the potential of the system is remarkable. In addition, mature people can also have their genes edited by adding and removing DNA parts. Nonetheless, in such complex system, all the adjustment can bring some unexpected side effects we can’t control.

What are the most interesting applications for AI in medicine right now?

In Barzilay’s opinion, the interesting application of AI is the ability to personalize user experience by collecting our data. She told the story of how she found out she had breast cancer. Many questions she had at the time could have been answered by AI better than human doctor who would make you wait for months to diagnose the symptoms in such an unnecessary procedure.

With the help of AI, medicine can be transformed, as it can provide early detection and save many lives. However, it might take time, but with thorough training through a huge amount of database, record, AI could reach human level in diagnosis. “To achieve this, it isn’t just recognizing what is in the picture or the sounds; these algorithms need to understand the context, where you are in the procedure, what’s going to happen and what should ordinarily happen next,” said Catherine Mohr.

Will we know too much?

As the data collected is going to be enormous, we can even actually make even deeper clinical assessment for example life expectancy. This might result in privacy problems as the body can be known too well. Your own data could also go beyond your control and fall into any hand.

Will we live longer and happier?

According to Mohr, as our living condition improves, healthcare is much better, diseases are predicted earlier, it is a fact that the longevity will be extended. However, there might still be a chance that we could only extend the weaker part of our life, which is not what we desire; secondly, our time could be longer but living too long means using more resources of the planet.

Regarding people’s happiness, “we already confront so much less death than people did, say, before antibiotics. But does having fewer of those losses really make us happier?” asked Egan.

Mohr also claimed what bring joy to life were working toward the acquisition of new skills and the ability to make choices for yourself. However, if we live in an era when machines do most of thing, we will have none of this. So what will we do with those machines? We will need an ethical framework for using machines for peace and safety, which is what Michael Dukakis Institute is doing with the AIWS Initiative.

by BGF | Nov 25, 2018 | News

The Open Data Institute (ODI) recently published a report on UK’s geospatial data to address the opportunities and challenges. This report emphasizes the value of map data to autonomous technology.

The report, carried in UK, suggests that the publishing of map data owned by Apple, Google and Uber would result in major development of technologies like autonomous cars and drones.

So far, Internet giants like Google Maps, Uber… have collected a huge amount of geospatial data which shows addresses, boundaries from the services provided by this data. However, the data was not accessible to public. It is argued by the ODI that it should be considered as a part of “national infrastructure”.

The data can remarkably facilitate a bloom of transportation that brings about approximately £11 billion pounds worth of income, including access to schools or hospitals for remote areas, advancements in commercial satellites, connected cars and drones, etc.

Despite the considerable benefits, it is difficult to mandate the access to data as there is no incentive to make these firms give up their competitive advantages. The ODI recommends a politic support of the government to make it possible in the near future.

Sharing geospatial data will facilitate governments in their public services. The sixth layer of the AIWS 7-layer Model developed by the Michael Dukakis Institute is studying how to apply AI effectively to public services and policy-making.

by BGF | Nov 25, 2018 | AI World Society Summit

AI World Conference and Expo on “Accelerating Innovation in the Enterprise”, December 3-5, 2018 in Boston is focused on the state of the practice of AI in the enterprise. The 3-day conference and exposition are designed for business and technology executives who want to learn about innovative implementations of AI in the enterprise through case studies and peer networking.

As enterprises begin to scale AI pilot programs, which often incorporate deep learning (DL), machine learning (ML), and natural language processing (NLP), across the enterprise, the need for a high-performance compute and storage environment becomes clear. High Performance Computing (HPC) environments, with their massive processing capability and low-latency access to data, are seen by many observers as a clear complementary technology to AI. This panel workshop will provide the information on these following sub-topics:

- What are the top AI use cases that are likely to be powered by HPC systems now, and in the future?

- What technological and operational challenges exist with deploying HPC systems that can support AI pilot programs or full-scale rollouts?

- What operational models are enterprises using to deploy AI technology that is powered by HPC?

- What security and regulatory issues need to be considered when using HPC systems to power AI use cases?

The panel will take place under the moderation of Keith Kirkpatrick, Principal Analyst, Tractica with the presence of the following panelists:

- Christopher Carothers, PhD, Technology & Academic Advisor, Research & Development, Lucd

- Gary Tyreman, CEO, Univa

- Margrit Betke, PhD, Professor of Computer Science, Boston University

The Michael Dukakis Institute for Leadership and Innovation is an international sponsor of the event and is collaborating with AI World to publish reports and programs on AI-Government including AIWS Index and AIWS Products.

Please join Governor Michael Dukakis, honorary advisory board member and a featured guest speaker, on Tuesday, December 4 at 8:55 am along with thousands of global 200 business executives at AI World.

For more information about the event, visit aiworld.com.

To register and receive a $200 discount off of a 2-3 day conference registration, click here and enter priority code 186800MDI.

To receive a complimentary pass to attend the expo, click here and enter priority code 186800XMDI.

by BGF | Nov 25, 2018 | News

Ever since the emergence of AI, while it has benefited us greatly in many areas, there are also doubtful prejudices toward AI, especially about how biased AI algorithms are. This concern has opened many discussions of AI ethics to seek common voices from diverse cultures.

However, biases in AI are not likely to completely disappear, since the same biases exist without AI. These discussions should focus on how to make AI trustworthy.

In fact, all the opinions we have on AI are biased. The media tends to give readers shocking experiences; hence, we usually hear more about bad examples than good ones. The press cultivates fear among us while trust is what we need. Instead of trying to prevent from being unfair, we should learn how to help AI to make the right decision.

Another key aspect to building trust is transparency. For instances, firms can take part in AI Ethics challenges initiated by Ministry of Economics Affair and Employment’s AI Steering Group of Finland. The challenges encourage them to write down their ethical codes of AI development.

Beside, it is essential that there are state-laws for how and why an AI is developed. Companies should be active in the discussion and keep their regulators about the achievements they have made.

In general, the current development of AI is not transparent enough to earn trust from people. With rules and orders, what the AIWS is working on, or ethical frameworks, which Michael Dukakis is building with AIWS Initiative, we can take a step closer to transparency and ethics in AI development.

by BGF | Nov 25, 2018 | News

“I think we’re better off thinking about how to both build smarter machines and make sure AI is used for the well-being of as many people as possible,” Professor Yoshua Bengio said in the interview with MIT Technology Review’s senior editor for AI, Will Knight.

Yoshua Bengio is well-known for being an expert in deep learning since the day deep learning was just an academic curiosity. He is a professor at University of Montreal, also the co-founder of Element AI in 2016 which has built a successful company helping firms explore AI applications.

In the interview with Will Knight, Mr. Bengio shared that AI will be the game changer to improve people’s lives everywhere. Yet, a few notes were taken about how AI should be developed.

Firstly, the democracy in AI research might lead to power concentration as big companies tend to get more and more powerful due to people’s priority. Whereas “it’s dangerous to have too much power concentrated in a few hands,” said Professor Yoshua.

Secondly, there is a need of establishing laws and treaties to prevent lethal uses of AI in addition, and set up a defensive system to prevent it as some countries can secretly develop AI weapons.

Furthermore, to achieve a human-level AI that satisfy human’s need in long term, we also need a long-term strategy as well as investment. And machine learning will be the foundation to make a major leap in AI innovation. “We need to be able to extend it do things like reasoning, learning casualty and exploring the world”, said Professor Yoshua. As machines are not able to project themselves in different circumstances, they need model. Hence, we need machines to be able to discover these casual models, enable them to learn from their mistakes.

To avoid casualty, the research of AI needs to follow certain set of rules to keep AI development under control. This is what the AIWS 7-layer Model is developing.

by BGF | Nov 25, 2018 | News, Practices in Cybersecurity

Over the years, machine learning has taken major leaps and become the basis for many security technologies as many of them rely on machine learning system. Regarding the risks, it can be used by attackers in the future. Mikko Hypponen shared his opinion about making preparation to protect user’s privacy and internet safety for the future.

People are now trying to automate, which can be done by connecting furniture to IoT appliances. However, this leaves your home exposed to attackers. To keep it safe from attack, “Put your IoT devices in a separate network segment. Do not allow any connectivity from the IoT segment to your production network. Keep their firmware up-to-date. Change the default credentials. Read the manuals,” said Mikko Hypponen.

In regard to online privacy, he suggested compartmentalizing your online life: separate your online identity from your real-world identity. For instance, name your Reddit account with something apart from your name and keep your information secured, by not revealing it on the internet at all.

Mikko Hypponen is a cybersecurity expert and columnist, the Chief Research Officer at F-Secure, who was awarded the Practitioner in Cybersecurity for his great work and active contribution to the public’s knowledge of cybersecurity by Boston Global Forum and Michael Dukakis Institute in 2015. He has been working with computer security for over 20 years and has fought the biggest malware outbreaks in the net.