by Editor | Apr 19, 2020 | News

Professor John Quelch, co-founder of the Boston Global Forum, Vice Provost, University of Miami, Dean, Miami Herbert Business School and Leonard M. Miller University Professor.

Recently, Professor Quelch has contributed a video to advise people preventing Coronavirus with 7Cs.

by Editor | Apr 19, 2020 | News

Most C-suite executives know they need to integrate AI capabilities to stay competitive, but too many of them fail to move beyond the proof of concept stage. They get stuck focusing on the wrong details or building a model to prove a point rather than solve a problem. That’s concerning because, according to our research, three out of four executives believe that if they don’t scale AI in the next five years, they risk going out of business entirely. To fix this, we offer a radical solution: Kill the proof of concept. Go right to scale.

We came to this solution after surveying 1,500 C-suite executives across 16 industries in 12 countries. We discovered that while 84% know they need to scale AI across their businesses to achieve their strategic growth objectives, only 16% of them have actually moved beyond experimenting with AI. The companies in our research that were successfully implementing full-scale AI had all done one thing: they had abandoned proof of concepts.

To scale value in the AI era, the key is to think big and start small: prioritize advanced analytics, governance, ethics, and talent from jump. It also demands planning. Decide what value looks like for you, now and three years from now. Don’t sacrifice your future relevance by being so focused on delivering for today that you aren’t prepared for the next wave. Understand how AI is changing your industry and the world, and have a plan to capitalize on it.

This is certainly new territory, but there is still time to get ahead if you lay the groundwork now. What you don’t have time to do is waste it proving a concept that already exists as consensus.

The original article can be found here.

According to Artificial Intelligence World Society Innovation Network (AIWS.net), AI can be an important tool to serve and strengthen democracy, human rights, and the rule of law. In this effort, Michael Dukakis Institute for Leadership and Innovation (MDI) invites participation and collaboration with think tanks, universities, non-profits, firms, and other entities that share its commitment to the constructive and development of full-scale AI for world society.

by Editor | Apr 19, 2020 | News

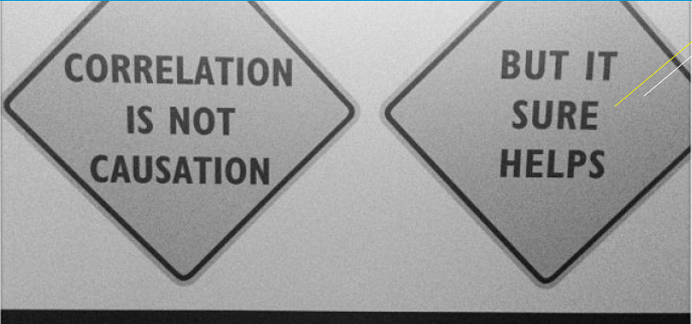

“Correlation is not causation.”

Though true and important, the warning has hardened into the familiarity of a cliché. Stock examples of so-called spurious correlations are now a dime a dozen. As one example goes, a Pacific island tribe believed flea infestations to be good for one’s health because they observed that healthy people had fleas while sick people did not. The correlation is real and robust, but fleas do not cause health, of course: they merely indicate it. Fleas on a fevered body abandon ship and seek a healthier host. One should not seek out and encourage fleas in the quest to ward off sickness.

The rub lies in another observation: that the evidence for causation seems to lie entirely in correlations. But for seeing correlations, we would have no clue about causation. The only reason we discovered that smoking causes lung cancer, for example, is that we observed correlations in that particular circumstance. And thus a puzzle arises: if causation cannot be reduced to correlation, how can correlation serve as evidence of causation?

The Book of Why, co-authored by the computer scientist Judea Pearl and the science writer Dana Mackenzie, sets out to give a new answer to this old question, which has been around—in some form or another, posed by scientists and philosophers alike—at least since the Enlightenment. In 2011 Pearl won the Turing Award, computer science’s highest honor, for “fundamental contributions to artificial intelligence through the development of a calculus of probabilistic and causal reasoning,” and this book sets out to explain what all that means for a general audience, updating his more technical book on the same subject, Causality, published nearly two decades ago. Written in the first person, the new volume mixes theory, history, and memoir, detailing both the technical tools of causal reasoning Pearl has developed as well as the tortuous path by which he arrived at them—all along bucking a scientific establishment that, in his telling, had long ago contented itself with data-crunching analysis of correlations at the expense of investigation of causes. There are nuggets of wisdom and cautionary tales in both these aspects of the book, the scientific as well as the sociological.

Professor Pearl’s book was also highly acclaimed with the praise by Dr. Vint Cerf, Chief Internet Evangelist at Google Inc. and World Leader in AI World Society (AIWS) award, “Pearl’s accomplishments over the last 30 years have provided the theoretical basis for progress in artificial intelligence… and they have redefined the term ‘thinking machine.'” In 2020, AI World Society (AIWS.net) has created a new section on Modern Causal Inference, which will be led by Professor Judea Pearl. Professor Judea’s work will contribute to AI transparency, which is one of important AIWS topics to identify, publish and promote principles for the virtuous application of AI in different domains including healthcare, education, transportation, national security, and other areas.

The original article can be found here.

by Editor | Apr 12, 2020 | News

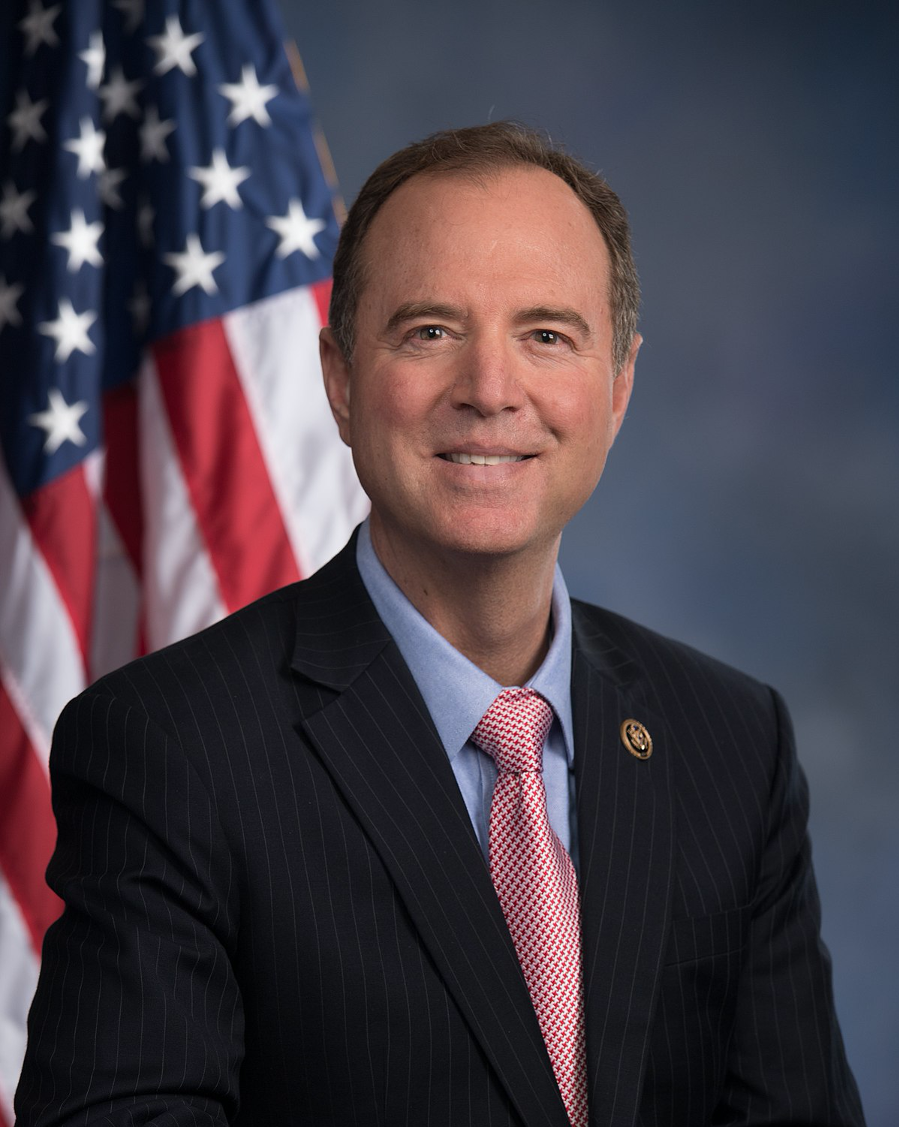

Reps. Adam Schiff (D-CA) and Steve Chabot (R-OH), the co-chairs of the Congressional Freedom of the Press Caucus, released the following statement on the decision by a Pakistani appeals court’s commutation of sentences of four men for convicted of murdering journalist Daniel Pearl:

“It is deeply disturbing that a Pakistani appeals court recently commuted the sentences of four men convicted of brutally murdering Wall Street Journal reporter Daniel Pearl in 2002, a decision that would drastically reduce their sentences. We welcome the announcement that this decision will be further appealed and urge the Pakistani Supreme Court in the strongest terms to ensure this miscarriage of justice does not stand.

“This is also cause to remember and honor the tremendous personal risks that journalists like Daniel Pearl take all around the world to tell stories that must be told. It is our responsibility to stand with them. In 2010, Congress passed the Daniel Pearl Freedom of the Press Act to hold those who persecute reporters accountable. With journalists increasingly under threat around the world, it’s time to strengthen the penalties on those who attack journalists, not commute the sentences of cold blooded murderers.”

On April 9, 2020, CEO of the Boston Global Forum, Mr. Nguyen Anh Tuan, sent a letter to Pakistani Prime Minister to protest the decision on April 2, 2020 of the high court in Pakistan’s Sindh province, which ordered the release of four men convicted of participating in the 2002 murder and kidnapping of Wall Street Journal reporter Daniel Pearl.

Professor Judea Pearl, father of journalist Daniel Pearl, wrote on his twitter: “We are grateful to Congressmen Adam Schiff and Steve Chabot for taking a strong, bipartisan stand on behalf of justice and press freedom.”. Professor Judea Pearl is the Chancellor’s Professor of UCLA and a Mentor of AIWS.net.

by Editor | Apr 12, 2020 | News

Michael Dukakis was discharged from a Los Angeles hospital where he was admitted on March 24 with bacterial pneumonia. In a note to The National Herald he wrote, “doing a lot better after nine days in the hospital and wrapping up the academic quarter here at UCLA. Hope we’ll be heading back to Boston soon, but I think that will be awhile. All the best, and stay in touch.”

Dukakis is 86 years old and in excellent health. He is a distinguished professor of Political Science at Northeastern University in Boston where he teaches the first semester of each year and in the Spring semester he teaches at UCLA. He and his wife Kitty live in a house near the University.

He had experienced respiratory symptoms and was twice tested for the coronavirus but the results came back negative. He was then diagnosed with bacterial pneumonia.

Dukakis served twice as governor of Massachusetts. The first time from 1975 to1979 and the second time from 1983 to1991. In 1988 he was the first Greek-American to run for President if the United States when he and received the nomination of the Democratic Party. Dukakis enjoys teaching at Northeastern and he remains active in the Democratic Party and politics on the local and national level. He encourages young people including Greek-Americans to get involved in politics and public life.

The original article can be found here.

Congratulations to Governor Michael Dukakis, co-founder and Chairman of the Boston Global Forum, co-founder and Chairman of the Michael Dukakis Institute, and co-founder of the AI World Society Innovation (AIWS.net).

by Editor | Apr 12, 2020 | News

It’s too early to quantify the economic impact of the global COVID-19 pandemic, but because of this outbreak compounded with the U.S.-China trade war, global supply chains and businesses linked to the world’s second-biggest economy are being impacted. As I sit here in Singapore and monitoring the spread of the outbreak in Asia and beyond, the mounting human cost is also especially of deep concern to me.

But even amid adversity comes the opportunity for innovation and invention. Chinese tech companies Alibaba, Tencent and Baidu have opened their artificial intelligence (AI) and cloud computing technologies to researchers to quicken the development of virus drugs and vaccines. U.S.-based medical startups are using AI to rapidly identify thousands of new molecules that could be turned into potential cures. Yet another is using the same technology for early warning and detection by analyzing global airline ticketing data.

This has all served as a reminder for me, an AI founder, of the immense potential of AI for improving efficiency, growth and productivity. AI-enabled automation can really make a difference for traditional services and offline businesses transitioning to digital and online channels.

If increasing productivity while lowering cost is vital for your business, then you might benefit from taking another look at these particular areas where AI can really help: automating processes, gaining customer and competitive insight through data and improving customer and employee engagement.

The original article can be found here.

According to the impact of AI to world society, Michael Dukakis Institute for Leadership and Innovation (MDI) established the Artificial Intelligence World Society Innovation Network (AIWS.net) to monitor AI developments and uses by governments, corporations, and non-profit organizations to assess whether they comply with the norms and standards codified in the AIWS Social Contract 2020.

by Editor | Apr 12, 2020 | News

The statistical branch of Artificial Intelligence has enamored organizations across industries, spurred an immense amount of capital dedicated to its technologies, and entranced numerous media outlets for the past couple of years. All of this attention, however, will ultimately prove unwarranted unless organizations, data scientists, and various vendors can answer one simple question: can they provide Explainable AI?

Although the ability to explain the results of Machine Learning models—and produce consistent results from them—has never been easy, a number of emergent techniques have recently appeared to open the proverbial ‘black box’ rendering these models so difficult to explain.

One of the most useful involves modeling real-world events with the adaptive schema of knowledge graphs and, via Machine Learning, gleaning whether they’re related and how frequently they take place together.

When the knowledge graph environment becomes endowed with an additional temporal dimension that organizations can traverse forwards and backwards with dynamic visualizations, they can understand what actually triggered these events, how one affected others, and the critical aspect of causation necessary for Explainable AI.

Investments in AI may well hinge upon such visual methods for demonstrating causation between events analyzed by Machine Learning.

As Judea Pearl’s renowned The Book of Why affirms, one of the cardinal statistical concepts upon which Machine Learning is based is that correlation isn’t tantamount to causation. Part of the pressing need for Explainable AI today is that in the zeal to operationalize these technologies, many users are mistaking correlation for causation—which is perhaps understandable because aspects of correlation can prove useful for determining causation.

Causation is the foundation of Explainable AI. It enables organizations to understand that when given X, they can predict the likelihood of Y. In aircraft repairs, for example, causation between events might empower organizations to know that when a specific part in an engine fails, there’s a greater probability for having to replace cooling system infrastructure.

The original article can be found here.

Regarding to AI and Causality, AI World Society (AIWS.net) has created a new section on Modern Causal Inference in 2020. This section will be led by Professor Judea Pearl, who is a pioneering figure on AI Causality and the author of the well-known book The Book of Why. Professor Judea’s work will contribute to Causal Inference for AI transparency, which is one of important AIWS topics.

by Editor | Apr 5, 2020 | Event Updates

An online preliminary policy discussion about the Transatlantic Approaches on Digital Governance: A New Social Contract in Artificial Intelligence (AI) Age will be held under the AI World Society Innovation Network (AIWS.net) from April 28, 2020.

A face-to-face policy discussion will be held in Boston in September 16-18, 2020.

The World Leadership Alliance-Club de Madrid (WLA-CdM) in partnership with the Boston Global Forum (BGF) is organizing a Transatlantic and multi-stakeholder dialogue on global challenges and policy solutions in the context of the need to create a new social contract on digital technologies and Artificial Intelligence (AI).

Over the years, Transatlantic relations have been characterized by close cooperation and continuous work for common interests and values. This cooperation has been essential to enhance the multilateralism system, considering the shared principles from both sides on democracy, rule of law, and fairness.

By comparing American and European approaches in the creation of a new social contract on AI Age and digital governance, under the critical eye of former democratic Heads of State or Government, this policy dialogue will stimulate new thinking and bring out ideas from representatives of governments, academic institutions and think tanks, tech companies, and civil society, from both regions.

At the same time, the discussion will generate a space to encourage and strengthen Transatlantic cooperation on the new social contract of digital governance in the framework of needed reforms of the multilateral system and will serve as a platform to establish a Transatlantic Alliance for Digital Governance. Besides, the policy discussion aims to discuss the creation of an initiative to monitor governments as well as companies in using AI and generate an AI Ethics Index at all levels.

Given the world health emergency experienced in the first months of the year related to the COVID-19 pandemic and its impact in all actors and spheres of life, digital technologies, and artificial intelligence have been strong allies to face the situation in multiple dimensions (scientific, health, social, etc.). However, digital technologies also bring new challenges to address under these circumstances. New communication channels have contributed to the rapid spread of fake news about COVID-19, generating disinformation, increasing confusion and influencing society’s perception, raising collective concern. On other occasions, the new tools used to track and face the virus could imply a violation of privacy rights.

The relevance of the topic leads us to include a global health security component to the Policy Lab, analyzing the implications of artificial intelligence and new technologies in this regard, as well as the response of governments, international organizations, companies and society, where the situation has demonstrated that a Social Contract on digital governance and the renewal of multilateralism and global cooperation mechanisms are more necessary than ever.

by Editor | Apr 5, 2020 | News

2019 World Leader in AI World Society Award recipient, a mentor of AIWS.net, one of fathers of Internet, Vint Cerf have tweeted:

“Good news – VA Public Health has certified my wife and me as no longer contagious with COVID19. Recovering!”

AIWS.net congratulates Mr. Vint Cerf on this wonderful news and hope the world will defeat COVID-19 soon.

The Boston Global Forum and Michael Dukakis Institute for Leadership and Innovation honored Mr. Vint Cerf as World Leader in AI World Society (AIWS) at the AIWS-G7 Summit Initiative Conference on April 25, 2019 at Loeb House, Harvard University.

At this event, the Boston Global Forum presented the AIWS-G7 Summit Initiative to the French Government, host country of G7 Summit 2019. This initiative conceived AI-government, AI-citizen, and a smart democracy with deeply applied AI.