by Editor | May 4, 2020 | Event Updates

The Boston Global Forum and World Leadership Alliance-Club de Madrid (WLA-CdM) will co-organize the Online AIWS Roundtable with the attendence of President of WLA-CdM, and Former President of Slovenia Danilo Türk, Former Prime Minister of Canada Kim Campbell, and Former Prime Minister of Netherlands Jan Peter Balkenende, Speaker of the Swedish Parliament Andreas Norlén , and leaders of AI World Society Innovation Network (AIWS.net) Professors Thomas Patterson (Harvard), Nazli Choucri (MIT), Alex Pentland (MIT), and David Silbersweig (Harvard).

Participants will discuss the Social Contract 2020, focus on the protection of privacy rights in times of the COVID-19 pandemic.

Time: 9:00 am (EST), May 12, 2020.

World Leadership Alliance-Club de Madrid (WLA-CdM) is the largest worldwide assembly of political leaders working to strengthen democratic values, good governance and the well-being of citizens across the globe. As a non-profit, non-partisan, international organisation, its network is composed of more than 100 democratic former Presidents and Prime Ministers from over 70 countries, together with a global body of advisors and expert practitioners, who offer their voices and agency on a pro bono basis, to today’s political, civil society leaders and policymakers. WLA-CdM responds to a growing demand for trusted advice in addressing the challenges involved in achieving democracy that delivers, building bridges, bringing down silos and promoting dialogue for the design of better policies for all. This alliance, providing the experience, access and convening power of its Members, represents an independent effort towards sustainable development, inclusion, and peace, not bound by the interest or pressures of institutions and governments.

This event is a part of the Policy Dialog 2020: Transatlantic Approaches on Digital Governance: A New Social Contract in Artificial Intelligence Age, sponsored by Mr. Nguyen Van Tuong, co-founder and Executive Chairman of ATC Tram Huong Khanh Hoa.

by Editor | May 4, 2020 | News

In a pair of papers accepted to the International Conference on Learning Representations (ICLR) 2020, MIT researchers investigated new ways to motivate software agents to explore their environment and pruning algorithms to make AI apps run faster. Taken together, the twin approaches could foster the development of autonomous industrial, commercial, and home machines that require less computation but are simultaneously more capable than products currently in the wild. (Think an inventory-checking robot built atop a Raspberry Pi that swiftly learns to navigate grocery store isles, for instance.)

One team created a meta-learning algorithm that generated 52,000 exploration algorithms, or algorithms that drive agents to widely explore their surroundings. Two they identified were entirely new and resulted in exploration that improved learning in a range of simulated tasks — from landing a moon rover and raising a robotic arm to moving an ant-like robot.

In the second of the two studies, an MIT team describes a framework that reliably compresses models so that they’re able to run on resource-constrained devices. While the researchers admit that they don’t understand why it works as well as it does, they claim it’s easier and faster to implement than other compression methods, including those that are considered state of the art.

The original article can be found here.

To support AI application in the world society, Artificial Intelligence World Society Innovation Network (AIWS.net) created AIWS Young Leaders program including some MIT Researchers, as well as Young Leaders and Experts from Australia, Austria, Belgium, Britain, Canada, Denmark, Estonia, France, Finland, Germany, Greece, India, Italy, Japan, Latvia, Netherlands, New Zealand, Norway, Poland, Portugal, Russia, Spain, Sweden, Switzerland, United States, and Vietnam.

by Editor | May 4, 2020 | News

As researchers pursued the inevitable AGI in machines, there has been a renewed interest in the idea of causality in models. There are significant implications to applying machine learning to problems of causal inference in fields such as healthcare, economics and education.

Here are a few top works that acknowledge the challenges and offer solutions to the causal inference in machines:

- The Seven Tools Of Causal Inference

- A Causal Bayesian Networks Viewpoint on Fairness

- Causal Inference And The Data-fusion Problem

- Reinforcement Knowledge Graph Reasoning for Explainable Recommendation

- Double/Debiased Machine Learning for Treatment and Causal Parameters

- Causal Regularization

- Unbiased Scene Graph Generation

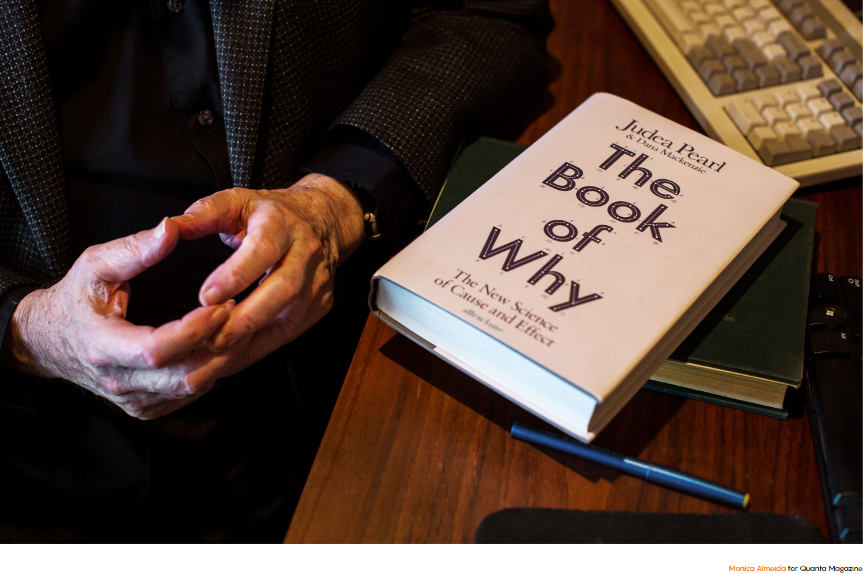

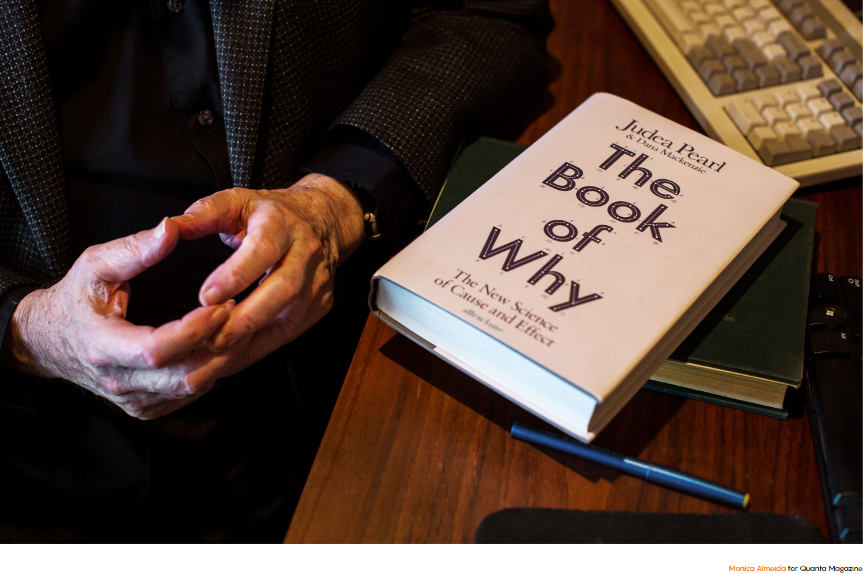

In the paper “The Seven Tools Of Causal Inference”, Judea Pearl who has championed the notion of causal inference in machines, argues that causal reasoning is an indispensable component of human thought that should be formalized and algorithimitized towards achieving human-level machine intelligence. Pearl, in this paper, analyses some of the challenges in the form of a three-level hierarchy, and shows that inference to different levels requires a causal model of one’s environment. He has also described seven cognitive tasks that require tools from those two levels of inference.

The original article can be found here.

In the field of causal reasoning, Professor Judea Pearl is a pioneer for developing a theory of causal and counterfactual inference based on structural models. In 2020, Professor Pearl is also awarded as World Leader in AI World Society (AIWS.net) by Michael Dukakis Institute for Leadership and Innovation (MDI) and Boston Global Forum (BGF). In the future, Professor Judea will also contribute to Causal Inference for AI transparency, which is one of important AIWS topics on AI Ethics.

by Editor | Apr 26, 2020 | Event Updates

EY (Ernst & Young), MIT Connection Science, Michael Dukakis Institute, World Bank, and New America are partners to establish The Prosperity Collaborative to advance practical solutions in the financial crisis from the COVID-19 pandemic. The COVID-19 pandemic constitutes a serious challenge for most countries, particularly those with weak health and social protection systems, and feeble response capacities overall. Governments will have to reprioritize development, service delivery, and administrative activities in order to appropriately – and urgently – allocate financial resources to serve its citizens basic needs. Many countries face fiscal space constraints given these urgent needs.

Revenues are expected to decline faster than GDP. The COVID-19 pandemic and resulting economic slowdown is lowering government revenues. The impact on revenues will likely exceed the hit on economic growth as the fiscal multiplier during economic downturns has exceeded 1 historically. The resulting decline in the revenue-to-GDP ratio reflects: (1) the tendency of some tax bases to decline faster than GDP in the face of an economic downturn (profits, capital gains, excises, and imports tend to decline faster than GDP during a recession); (2) a decline in commodity prices and related revenues; and (3) possible discretionary changes in tax policy in response to the crisis, such as lowering of tax rates, inducing capital and labor investments through tax credits and incentives, or further increasing tax allowances deductions. In the present circumstances, revenue performance may be further harmed by the likelihood of a reduction in taxpayers’ compliance, and the inability of tax administrations to maintain business continuity.

A public-private partnership is needed to advance practical solutions in this crisis. No single institution has the capabilities, capacity and legitimacy to develop and implement solutions to optimize tax administration effectiveness amidst this unprecedented crisis. A public-private partnership can overcome these hurdles by: (1) leveraging the knowledge of policy, technology and taxation residing with leading edge organizations from the private sector, academia, and think /action tanks to discover and deploy practical solutions and navigate the rapidly evolving landscape; (2) devising a strategy to help tax administrations continue operating remotely; (3) combining the legitimacy of an international institution, the private sector, civil society, and national governments to set the necessary principles and standards; and (4) mobilizing the necessary resources to get the job done.

by Editor | Apr 26, 2020 | News

“What we need now is critical thinking. We have brains. God gave us brains. We shouldbe using them. Critical thinking is hard work.”

On April 22, 2020, Vint Cerf, Vice President and Chief Evangelist of Google, Father of the Internet, and World Leader in AIWS Award recipient, shared his perspectives on “Pandemic geopolitics and recovery post-COVID” as part of a live video discussion moderated by David Bray, Atlantic Council GeoTech Center Director, on the role of the tech, data, and leadership in the global response to and recovery from COVID-19.

by Editor | Apr 26, 2020 | News

Blockchain and artificial intelligence (AI) solve different tasks, but they can work together to improve many processes in the financial services industry, from customer service to loan application reviews and payment processing.

Adopting AI and blockchain technologies can make your financial sector smarter and help it to perform more effectively. Blockchains can provide transparency and data aggregation; they also enforce contract terms. Meanwhile, AI can automate decision-making and improve internal bank processes.

AI and blockchain technology can revolutionize critical processes in the financial services industry. However, these technologies have to be carefully calibrated and integrated with existing operations. The low level of dependence between blockchain and AI technologies is helpful, as you can begin by introducing only one of these technologies to your banking processes. This will allow you to focus on the most important things, such as creating a clear road map, developing an MVP and introducing your product to the market faster than your competitors.

The original article can be found here.

To support AI application in the world society including financial services, Artificial Intelligence World Society Innovation Network (AIWS.net) created AIWS Young Leaders program including Young Leaders and Experts from Australia, Austria, Belgium, Britain, Canada, Denmark, Estonia, France, Finland, Germany, Greece, India, Italy, Japan, Latvia, Netherlands, New Zealand, Norway, Poland, Portugal, Russia, Spain, Sweden, Switzerland, United States, and Vietnam.

by Editor | Apr 26, 2020 | News

Artificial intelligence owes a lot of its smarts to Judea Pearl. In the 1980s he led efforts that allowed machines to reason probabilistically. Now he’s one of the field’s sharpest critics. In his latest book, “The Book of Why: The New Science of Cause and Effect,” he argues that artificial intelligence has been handicapped by an incomplete understanding of what intelligence really is.

Three decades ago, a prime challenge in artificial intelligence research was to program machines to associate a potential cause to a set of observable conditions. Pearl figured out how to do that using a scheme called Bayesian networks. Bayesian networks made it practical for machines to say that, given a patient who returned from Africa with a fever and body aches, the most likely explanation was malaria. In 2011 Pearl won the Turing Award, computer science’s highest honor, in large part for this work.

But as Pearl sees it, the field of AI got mired in probabilistic associations. These days, headlines tout the latest breakthroughs in machine learning and neural networks. We read about computers that can master ancient games and drive cars. Pearl is underwhelmed. As he sees it, the state of the art in artificial intelligence today is merely a souped-up version of what machines could already do a generation ago: find hidden regularities in a large set of data. “All the impressive achievements of deep learning amount to just curve fitting,” he said recently.

In his new book, Pearl, now 81, elaborates a vision for how truly intelligent machines would think. The key, he argues, is to replace reasoning by association with causal reasoning. Instead of the mere ability to correlate fever and malaria, machines need the capacity to reason that malaria causes fever. Once this kind of causal framework is in place, it becomes possible for machines to ask counterfactual questions — to inquire how the causal relationships would change given some kind of intervention — which Pearl views as the cornerstone of scientific thought. Pearl also proposes a formal language in which to make this kind of thinking possible — a 21st-century version of the Bayesian framework that allowed machines to think probabilistically.

Pearl expects that causal reasoning could provide machines with human-level intelligence. They’d be able to communicate with humans more effectively and even, he explains, achieve status as moral entities with a capacity for free will — and for evil.

The original article can be found here.

In the support of AI Ethics, Michael Dukakis Institute for Leadership and Innovation (MDI) established the Artificial Intelligence World Society (AIWS.net) for the purpose of promoting ethical norms and practices in the development and use of AI. Besides, Michael Dukakis Institute and the Boston Global Forum honored Professor Judea Pearl as 2020 World Leader in AI World Society for his contribution on AI Causality. In the future, Professor Judea will also contribute to Causal Inference for AI transparency, which is one of important AIWS topics on AI Ethics.

by Editor | Apr 19, 2020 | Event Updates

At 9:00 am (Boston time), April 22, 2020, Professor Alex Pentland, will speak at online AIWS Roundtable: Solutions to reopen in democratic countries

Professor Alex Pentland is the director of MIT Connection Science and co-founder of AIWS.net

Moderator: Dr. Lyndon Haviland, Adviser of United Nations Secretary General

Participants: senior business leaders of global corporation who are alumni of Harvard Business School at AMP program.

Professor Alex Pentland is one of 7 most powerful data scientists according to Forbes Magazine ranking. He will present using personal data to prevent Coronavirus without violate privacy rights and can reopen economy and society.

by Editor | Apr 19, 2020 | News

The world’s current growing pandemic of the Wuhan virus amplifies the paramount and ultimate goal of our AI initiative. Our vision is to establish a universal AI value system ensuring that AI technology advancement would make lives better and not give ruling authority more tools to oppress or exacerbate human suffering.

The number one of our AI Universal World Guidelines states:

“Right to Transparency: All individuals have the right to know the basis of an AI decision that concerns them. This includes access to the factors, the logic, and techniques that produces the outcome.”

While our world is still preoccupied with fighting the Wuhan virus, it has been so clear to all that had the ruling power of China been more fort coming with it people and with the world, this pandemic could have been avoided or its killing impact could have been much more limited. As early as November of 2019 in Wuhan, China there had been many red flags about this new killing disease; doctors had ringed the alarm bell. Unfortunately to all of us, the Chinese government not only kept these warnings secret, but also punished the doctors and suppressed any truthful voice of its people. In Asian culture, there is a saying that “treating an illness is similar to fight a fire”. Time is the essence. Any delay will cause irreparable harm.

During the most critical period of November and December of 2019, China has repeatedly announced that there was nothing to worry about and that it has everything under controlled while Chinese were dying in the thousands. And furthermore, the World Health Organization just repeated the official version of the Chinese government. This lack of transparency from a national government and from an international organization no doubt has contributed terribly to the avoidable suffering of millions of people across our Globe.

Every government has a different set of values. The more common values we have the better. We hope that in the near future, at least in the AI World Society, we all share basic universal principles, of which transparency is valued, respected, and commonly practiced.