BGF Delegation Invited to Present at Vietnamese Cyber-security Conference

Last month, members of the World Leadership Alliance – Club de Madrid hosted a round table to discuss the future of democracy. The group is currently working on their Next Generation Democracy initiative, which seeks to host discussions on the current state and future of democracy, and the challenges it faces worldwide. The core topics were “populism, political mistrust, and misinformation in the digital era.” One participant of the discussion, Newco CEO John Battalle, said that “two years ago we were not worried, now we are. There are a lot of elections this year and also a lot of intention to disrupt them.” A central topic was the use of social media for information campaigns and election interference, a topic that is especially timely now in the wake of the Cambridge Analytica story. You can watch the round table here and listen to the great speeches on the topic given by participants.

AIWS and Boston Global Forum are working closely with Club de Madrid and their Next Generation Democracy initiative. We believe that artificial intelligence will have a significant impact on governance and information. If used beneficially, AI can assist in identifying, removing, and preventing fake news. We are also honored to work with Former Latvian President Vaira Vike-Freiberga, President of the Club de Madrid and a member of our own Board of Thinkers.

Former Estonian President Toomas Hendrik Ilves is warning about the twin dangers of misusing social media and fake news. In a Digital Trends interview, President Ilves discussed how “social media creates a news outlet without oversight,” allowing data and information to be manipulated for malicious use. This is especially apt after the recent news on Cambridge Analytica, which revealed that the political consulting firm illegally harvested Facebook data to influence and manipulate the 2016 U.S. Elections and the Brexit campaign. He also warned of the continuing influence of bots on platforms like Twitter, which can autonomously share misinformation through social media.

Online data and privacy is something President Ilves can certainly speak to. During his tenure, Estonia saw a program of extensive digitization, earning it the nickname “The E-Republic.” For his dedication to online ethics, digital responsibility, and cybersecurity, President Ilves was named a BGF World Leader in Cybersecurity on December 12, 2017.

The tech story of the week: Cambridge Analytica. The London-based consulting firm became a household name last week after the New York Times reported it had improperly harvested Facebook data from millions of users using a third-party app, in order to benefit the Trump campaign. Cambridge Analytica was able to collect information on more than 50 million users, one of the largest data leaks in the history of social media. Stolen data was then coordinated and used under the direction of Trump adviser Steve Bannon to influence the 2016 U.S. elections. This, of course, comes soon after Special Counsel Robert Mueller indicted 13 Russian nationals for using Facebook to interfere in the election.

The scandal has already had major consequences. On Friday, UK authorities raided Cambridge Analytica offices and servers in an investigation related to the Facebook hack and its use not only in the U.S., but on the Brexit campaign as well. Facebook head Mark Zuckerberg posted an apology and explanation on the platform, and has volunteered to testify before Congress. Backlash has been fierce, regardless, with many outraged at the breach of privacy and some leaving Facebook altogether – Elon Musk, included.

This dramatic breach of privacy and misuse of online data raises again the question of how our data is used. The Boston Global Forum has published the Ethics Code of Conduct for Cyber Peace and Security (ECCC), which advises organizations and individuals on how to responsibly manage and navigate cyberspace. Among the many suggestions is that businesses should “take responsibility for handling sensitive corporate data stored electronically.” Cambridge Analytica proves how imperative this is. AIWS encourages companies and individuals to engage in responsible online behavior in line with our ECCC guidelines.

One of the many places you might encounter artificial intelligence is at the doctor’s office. Some doctors and healthcare professionals are using AI algorithms to rapidly scan medical files, notice irregularities like tumors, and even make diagnoses. There are already hopeful signs of using AI to detect Alzheimer’s, a notoriously hard-to-diagnose condition. Typically doctors examine medical records and try to collect observations about the patient, both of which an AI can do at rapid speeds. A device designed by MIT is currently being tested here in Boston at McLean Hospital and Harvard Medical School.

As exciting as these developments are, skeptics are raising another question:Is it ethical? Artificial intelligence and machine learning currently serve an advisory role in healthcare. What happens when they make decisions that affect a patient? If AI makes treatment decisions on its own, even life-or-death ones, how do we prevent malfunction? The medical applications of AI are one of the many topics we consider when we discuss AI ethics. AIWS is working with the tech industry as well as healthcare professionals, including Dr. David Silbersweig of McLean and Beth Israel Hospitals and a member of our Board of Thinkers.

Last Sunday, a self-driving vehicle struck and killed a pedestrian in Tempe, Arizona – the first ever fatal crash involving a fully autonomous vehicle. The vehicle, an SUV, belonged to Uber, which was testing a fleet of self-driving vehicles in several cities throughout the U.S. and Canada. At the time of the accident, the car was in full-autonomous mode, meaning it was driving without any human intervention, although a test driver was behind the wheel as a safeguard. Uber has since suspended it’s self-driving car program and has stated that it is cooperating fully in the investigation.

Our hearts go out to the victim’s family. We’re fully cooperating with @TempePolice and local authorities as they investigate this incident.

— Uber Comms (@Uber_Comms) March 19, 2018

Among the agencies investigating is the National Highway Traffic Safety Administration (NHTSA), which has been in the process of revising its own rules and regulations about letting self-driving cars on the road. Several other companies, including Tesla and Google, are working on their own self-driving car initiatives, and some estimates project that they could be road-ready within the next few years.

AIWS mourns this tragedy and loss of life. We are working closely with policymakers, business leaders, and technologists on the safety and ethical issues surrounding artificial intelligence, including self-driving cars. It is crucial to safely engineer this new technology in order to prevent misuse or malfunction, and to ensure this technology benefits everyone harmlessly.

The Artificial Intelligence World Society will host a round table in Tokyo Japan on April 2nd to discuss the ethics of artificial intelligence. The central topic will be An Ethical Framework for AI, our seven-layer model to ensure the beneficial application of AI technologies. We will be joined by a Special Adviser to Japanese PM Shinzo Abe, chief engineers from Hitachi, as well as thought leaders and representatives of other top Japanese companies involved in developing AI.

An Ethical Framework for AI is our current project, the result of extensive collaboration with policymakers, academics, tech experts, and industry leaders from around the world. We believe that this framework, if followed, will help guarantee that artificial intelligence is used for the betterment of society and not for malicious purposes. The Framework will be officially announced on April 25, 2018 at our annual BGF-G7 Summit Conference. Here are the 7 Layers:

Artificial Intelligence World Society (AIWS) Model

Layer 7 (Application Layer) Application and Services

Layer 6 (Public Service Layer) Public Services: Transportation, Healthcare, Education, Justice System

Layer 5 (Policy Layer) Policy, Regulation, Convention, Norms

Layer 4 (Legislation Layer) Law and Legislation

Layer 3 (Tech Layer) Technical Management, Data Governance, Algorithm Accountabilty, Standards, IT Experts Management.

Layer 2 (Ethics Layer) Ethical Frameworks, Criteria, Principles

Layer 1 (Charter Layer) Charter, Concepts

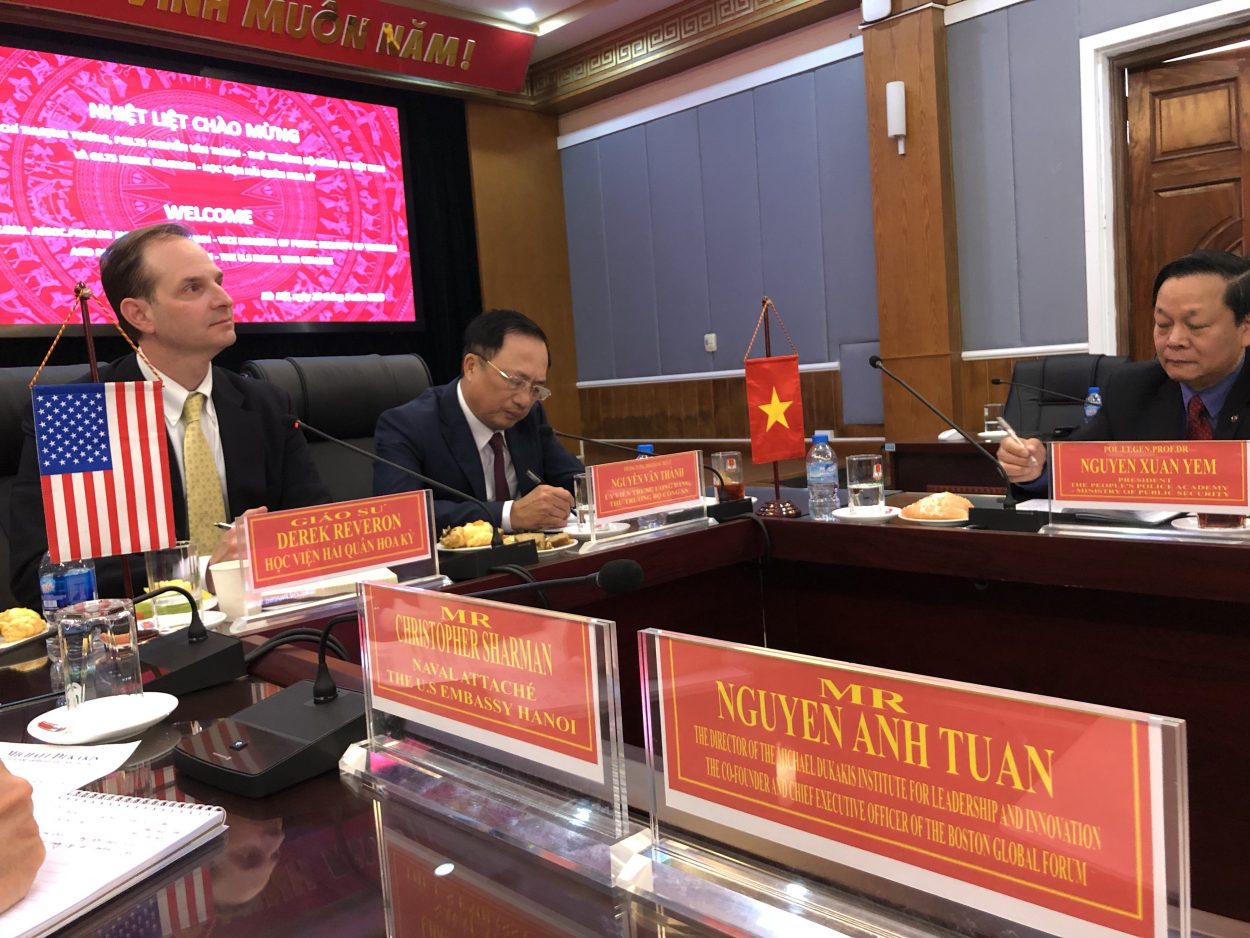

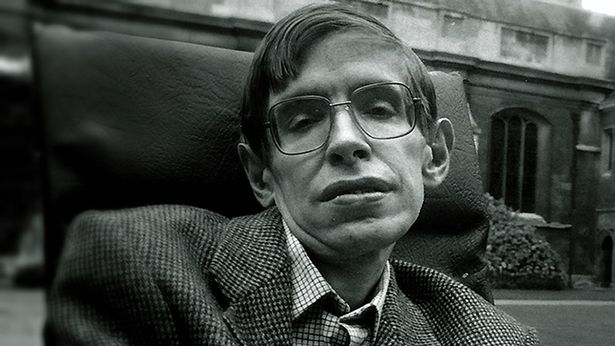

On March 31st, a memorial service for the late Professor Stephen Hawking will be held in Hanoi, Vietnam. The service will be held at the same time as the funeral service being held in Cambridge, UK, where Professor Hawking lived and worked. Among those attending in Hanoi are representatives from the Michael Dukakis Institute for Leadership and Innovation, Boston Global Forum, VietnamNet, and VietNam Reports, as well as a number of Vietnamese ministers, scientists, and thought leaders.

BGF and MDI will be represented by CEO Nguyen Anh Tuan and Professor Derek Reveron of the U.S. Naval War College and our own Board of Thinkers. Professor Hawking was named a Distinguished Global Citizenship Educator by BGF’s Global Citizenship Education Network and AIWS is working with his colleagues to keep his ideas and predictions on artificial intelligence alive. Please join us in honoring and remembering the legacy of Professor Stephen Hawking, who dedicated his life to humanity’s future and place in the vast cosmos.