by BGF | Oct 28, 2018 | News

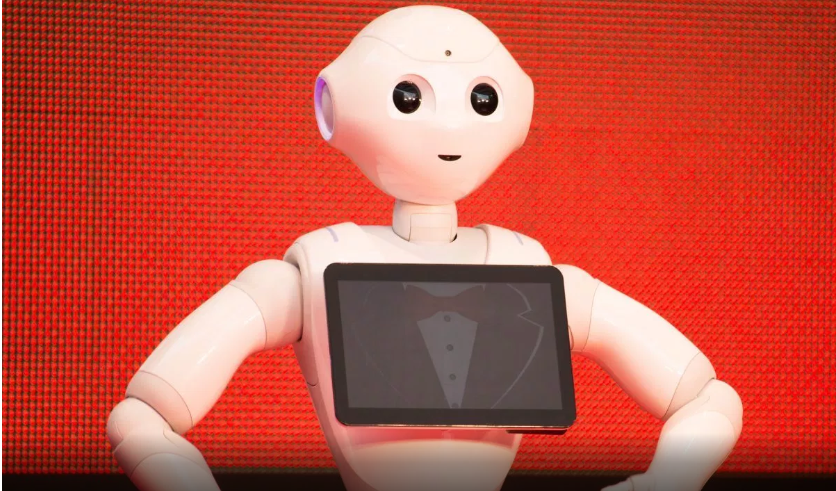

Softbank developed a robot named Pepper to be the first humanoid to testify in front of the UK Parliament about AI.

Since the beginning of the fourth industrial, considerable changes have been made, bringing about benefits as well as challenges for human. One of the biggest debates was around the rise of AI and robotics, which is considered to alter society significantly. There have been a lot of explanation about the development of AI and robotics by leaders, researchers, experts but rarely do we hear it from the robots themselves. “If we’ve got the march of the robots, we perhaps need the march of the robots to our select committee to give evidence,” Committee chair Robert Halfon of The Commons Education Select Committee said.

Hence, Pepper will be the one to testify for AI and Robotics to the Commons Education Select Committee. With microphones, two HD cameras, touchscreen on its chest as illustrators, The Robot will help answering the Committee questions about Industry 4.0.

“This is not about someone bringing an electronic toy robot and doing a demonstration,” said Mr. Halfon. “It’s about showing the potential of robotics and artificial intelligence and the impact it has on skills.”

Whether it is for better or worse, the future of AI will mainly lie on the hand of its developers – we, human to decide. Hence, a certain set of guidelines on ethics and standards is needed for developers to follow, not just what to make but also how to make it ethical. This is exactly what Michael Dukakis Institute for Leadership and Innovation (MDI) is attempting to do. So far, the organization has been working on developing the AIWS Index for governments and enterprises.

by BGF | Oct 28, 2018 | News

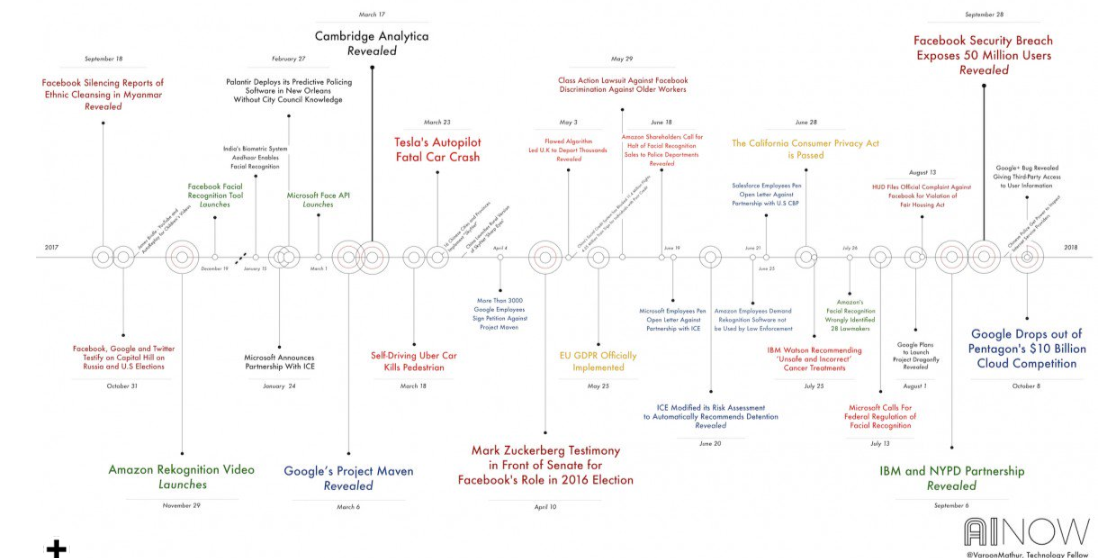

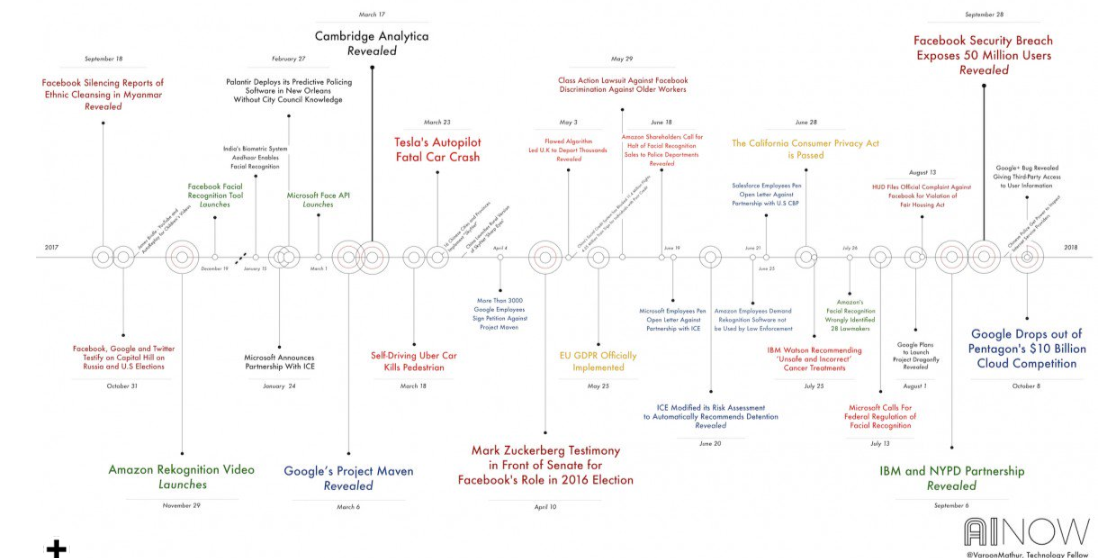

As people’s morals are different depending on their origin and class, some legal scholars argue ethics are too subjective for AI to follow in the conversation at the AI Now 2018 Symposium.

It is essential that AI need to follow standards to ensure the safety of human, the same goes to its developers, which has been emphasized by researchers, leaders, activists around the world. But who gets to define and enforce those ethics?

MIT Researchers have developed a platform known as the Moral Machine, which is used to look into the divergence of people’s opinions on driving situation and ask what they prioritize in an accident. The result from millions of people around the world shows that there is a huge variation across cultures. Moreover, it was proved by North Carolina State University that reading ethical standards actually doesn’t affect people’s behaviors, which means after reading a code of ethics, software engineers still does not do anything differently.

In the discussion, a solution was proposed to deal with the ambiguous and unaccountable nature of ethics: reframing AI-driven consequences in terms of human rights, suggested by an international legal scholar at NYU’s School of Law, Philip Alston. “[Human rights are] in the constitution,” said Alston. “They’re in the bill of rights; they’ve been interpreted by courts, if an AI system takes away people’s basic rights, then it should not be acceptable,” he added.

The Michael Dukakis Institute is currently collaborating with AI World to publish reports and programs on AI-Government, including AIWS Index and AIWS Products to solve key problems in ethical AI faced by businesses, governments and the world at large. Notably, this year the Michael Dukakis Global Initiative is the International Sponsor of AI World – aiworld.com – coming to Boston on Dec 3-5, 2018. AI World Conference & Expo draws over 3,000 businesses and technology professionals from Global 2000 enterprises, and includes 100+ sessions, 200+ speakers and 85+ sponsors and exhibitors. AI World is the largest independent enterprise AI business event in the world. The conference program and special events are focused on how AI is helping to drive business innovation at large companies and covers the complete state of the practice of AI today. AI World is organized around this singular goal, enabling business leaders to build a competitive advantage, drive new business models and opportunities, reduce operational costs and accelerate innovation efforts.

Link of the AI World Conference & Expo Event: https://aiworld.com/

by BGF | Oct 28, 2018 | News

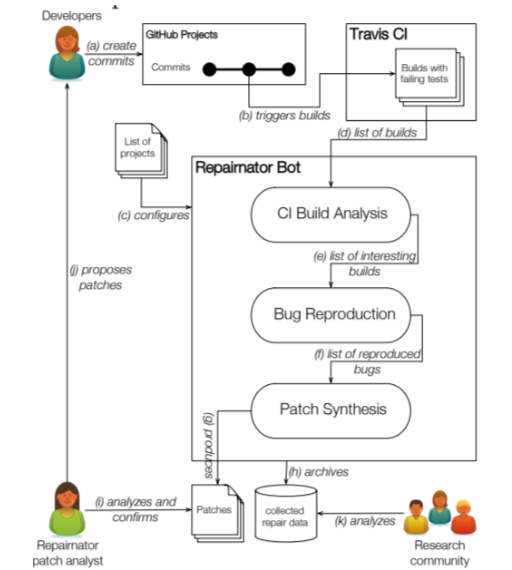

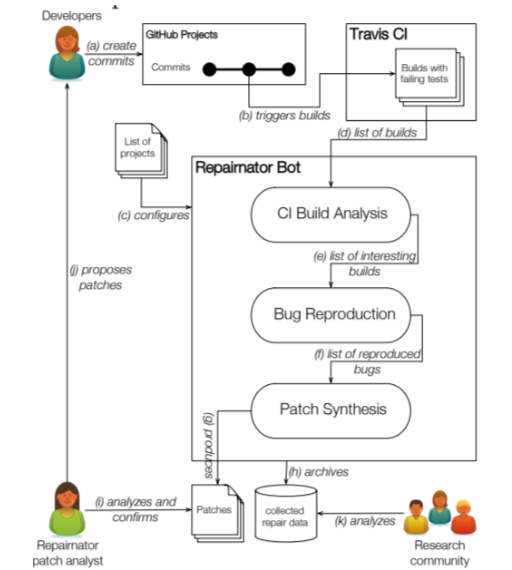

KTH Royal Institute of Technology in Stockholm has built a bot called Repairnator that surpass human in finding bugs and writing bug fixes.

A robot programmer known as Repairnator, can fix bugs so well that it can compete with human engineers. This can be considered a milestone in software engineering research on automatic program repair for developers.

Martin Monperrus and his team – the creators of the bot tested it by having Repairnator pretend to be a human developer and allow it to compete with other human developers to patch on GitHub, a website for developers. “The key idea of Repairnator is to automatically generate patches that repair build failures, then to show them to human developers, to finally see whether those human developers would accept them as valid contributions to the code base,” said Monperrus.

The team created a GitHub user called Luc Escape which disguised as a software engineer at their research facility. “Luc has a profile picture and looks like a junior developer, eager to make open-source contributions on GitHub,” they say. Then, they customized Repairnator as Luc whose appearance like a junior developer.

Repairnator were put through 2 tests. The first took place from February to December 2017, which required the bot to fix 14,188 GitHub projects, and scan for errors. In the given period, Repairnator analyzed over 11,500 builds with failures among 11,500 failures, it was able to reproduce failures in 3,000 cases. and developed a patch in 15 cases. However, none of those was usable because it took Repairnator a great deal of time to develop them and the low-quality of the patches was not acceptable.

In the second test, “Luc” was set to work on the Travis continuous integration service from January to June 2018. After a few adjustments, on January 12 it wrote a patch that a human moderator accepted into a build. In six months, Repairnator went on to produce five patches that are acceptable, which means it can actually compete with human in this field of work. However, they encountered an issue when the team received the following message from one of the developers: “We can only accept pull-requests which come from users who signed the Eclipse Foundation Contributor License Agreement.” Since Luc cannot sign a license agreement. “Who owns the intellectual property and responsibility of a bot contribution: the robot operator, the bot implementer or the repair algorithm designer?” asked Monperrus and his colleagues. The bot could bring about enormous potential to the industry; however, this can pose challenges to rights and intellectual property and responsibility.

The issues related to rights and responsibilities of AI systems are studied in the AIWS Initiative of the Michael Dukakis Institute for Leadership and Innovation (MDI). Layer 3 of The AIWS 7-Layer Model focuses primarily on AI development and resources, including data governance, accountability, development standards, and the responsibility for all practitioners involved directly or indirectly in creating AI.

by BGF | Oct 28, 2018 | News

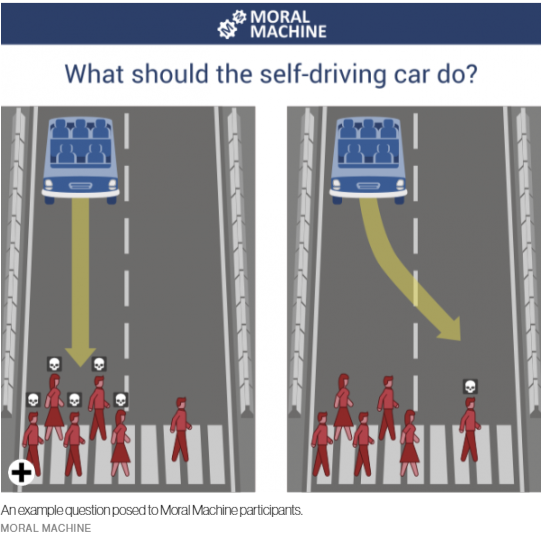

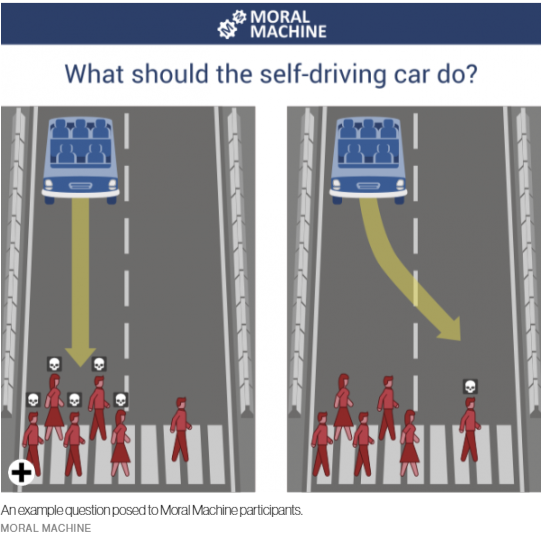

In 2014, The Moral Machine was launched by MIT Media Lab to generate data on people’s insight into priorities from different cultures.

After four years of research, millions of people in 233 countries have made 40 million decisions, making it one of the largest studies ever done on global moral preferences. A “trolley problem” was created to test people’s opinion. It put people in a case where they are on a highway, then must encounter different people on the road. Which life should you take to spare the others’ lives? The car is put into 9 different circumstance should a self-driving car prioritize humans over pets, passengers over pedestrians, more lives over fewer, women over men, young over old, fit over sickly, higher social status over lower, law-abiders over law-benders.

The data reveals that the ethical standards in AI varies across different culture, economics and geographic location. While collectivist cultures like China and Japan are more likely to spare the old, countries with more individualistic cultures are more likely to spare the young.

In terms of economics, participants from low-income countries are more tolerant of jaywalkers versus pedestrians who cross legally. However, in countries with a high level of economic inequality, there is a gap between the way they treat individuals with high and low social status.

Different from the past, today we have machines that can learn through experience and have minds of their own. This requires comprehensive standards to regulate and control unpredictable disruptions from Artificial Intelligence, which is also the shared aim of researches conducted by serious and major independent organizations such as the MIT Media Lab, IEEE, etc. and the Michael Dukakis Institute.

by BGF | Oct 28, 2018 | News, Practices in Cybersecurity

Mikko Hypponen, a renowned authority on computer security and the Chief Research Officer of F Secure, was invited by Tomorrow’s Capital to discuss cybercrime and its pace of thriving.

Mikko Hypponen – The Chief Research Officer of F Secure, who was awarded the Practitioner in Cybersecurity for his great work and active contribution to the public’s knowledge of cybersecurity by the Boston Global Forum and the Michael Dukakis Institute on December 12, 2015.

At the beginning of October, Mikko Hypponen was invited to talk in a podcast of Nasdaq’s Tomorrow’s Capital about cybercrime. In his speech, he addressed the increase of criminal due to cryptocurrencies since it is easier to target and steal. Furthermore, it leads to cases where people who hold crypto are targeted or even killed, and after that everything is untraceable for local authorities because of its autonomous characteristic.

Beyond of that, Internet of Things is also become another concern, now every devices in our daily lives are connected as well, normally through a smart app in your smartphone which connects everything else. “Let’s say they’ve bought an IoT washing machine and they are told that it’s hackable. Well, what they think is that, well, I don’t care. It’s a washing machine…Well, that’s not the point. They are not hacking your washing machine or your fridges to gain access to your washing machine or to your fridge. They are hacking those devices to gain access to your network.” said Mikko.

That means not only financial institution is at risk nowadays but also social infrastructures and even our privacy. Therefore, Hypponen emphasizes that cybersecurity efforts should not be overlooked. It’s a new world, one that requires new ideas, innovations, and tactics to ensure a safer future.

by BGF | Oct 28, 2018 | News

On 27-28 March, Dr. Vaira Vīķe-Freiberga, President of the World Leadership Alliance – Club de Madrid, during her trip to the United Kingdom, she participated in the events that marked the Centenary of Latvia’s statehood in London and Edinburgh.

Dr. Vaira Viķe-Freiberga is in the middle of the photo. She is former President of Latvia (1999-2007) and currently President of the World Leadership Alliance – Club de Madrid – which is in partnership with the Michael Dukakis Institute for Leadership and Innovation to the AIWS 7-Layer Model to Build Next Generation Democracy.

President Vaira Viķe-Freiberga took part in the events that made a milestone of 100-year statehood in London and Edinburgh. In the talk, Vaira Vike-Freiberga put an emphasis on the nation’s key values: freedom, democracy and equality.

On 27 March, the discussion was held by the Embassy of Latvia and the European Bank for Reconstruction and Development (EBRD). In the conversation, with the presence of Sergei Guriev, EBRD Chief Economist, and Alexia Latortue, EBRD Managing Director for Corporate Strategy. Dr. Vīķe-Freiberga presented about the theme of “Women, Development and Democracy”. She explained the mechanisms of social stigmatization and their negative influence on the development of society.

On the next day, Dr. Vīķe-Freiberga delivered a lecture on “Values and Democracy in the Contemporary World” to students and professionals in the University of Edinburgh. Not only did Dr. Vīķe-Freiberga explained to her audience the place of the Latvian people in the context of the development of European civilisation and values, but also she noted the controversial debate on the application in Latvia of the Istanbul Convention (the Council of Europe Convention on preventing and combating violence against women and domestic violence). She strongly emphasized that liberal values did no run counter to family values. This event is considered one of the university’s most visited events in recent years.

by BGF | Oct 21, 2018 | 7-Layer Model of AI World Society

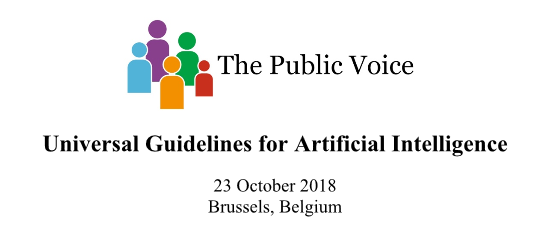

Professor Marc Rotenberg, President of Electronic Privacy Information Center (EPIC), Member of Michael Dukakis Institute’s AIWS Standards and Practice Committee recently published the Universal Guidelines for AI. It will be opened for public sign-on and later released at the Public Voice event in Brussels on October 23, 2018.

The emergence of AI is transforming the world, steering science and industry to government administration and finance on a whole new direction. The rise of AI decision-making also implicates fundamental rights of fairness, accountability, and democracy. Many of them are unclear to users, leaving them unaware whether the decisions were accurate.

Aware of the current situation, Prof. Marc Rotenberg – President of Electronic Privacy Information Center (EPIC) proposed these Universal Guidelines to guide the design and use of AI, which serve as a part of the first layer of AIWS 7-layer Model built by Michael Dukakis Institute. The goals of this model are to create a society of AI for a better world and to ensure peace, security, and prosperity.

These guidelines should be incorporated into ethical standards, adopted in national law and international agreements, and built into the design of systems. The guidelines include twelve terms:

- Right to Transparency. All individuals have the right to know the basis of an AI decision that concerns them. This includes access to the factors, the logic, and techniques that produced the outcome.

- Right to Human Determination. All individuals have the right to a final determination made by a person.

- Identification Obligation. The true operator of an AI system must be made known to the public.

- Accountability Obligation. Institutions must be responsible for decisions made by an AI system.

- Fairness Obligation. Institutions must ensure that AI systems do not reflect bias or make impermissible discriminatory decisions.

- Accuracy, Reliability, Validity, and Replicability Obligations. Institutions must ensure the accuracy, reliability, validity, and replicability of decisions.

- Data Quality Obligation. Institutions must ensure data provenance, quality, and relevance for the data input into algorithms. Secondary uses of data collected for AI processing must not exceed the original purpose of collection.

- Public Safety Obligation. Institutions must assess the public safety risks that arise from the deployment of AI systems that direct or control physical devices.

- Cybersecurity Obligation. Institutions must secure AI systems against cybersecurity threats.

- Prohibition on Secret Profiling. No institution shall establish or maintain a secret profile on an individual.

- Prohibition on National Scoring. No national government shall establish or maintain a score on its citizens or residents

- Termination Obligation. An institution that has established an AI system has an affirmative obligation to terminate the system if it will lose control of the system

The Universal Guidelines for AI is currently open to public sign-on by individuals and organizations. On October 23, it will be introduced at the Public Voice event in Brussels.

by BGF | Oct 21, 2018 | News

The AIWS Standards and Practice Committee of Michael Dukakis Institute welcomes two new members: Prof. Mikhail Kupriyanov – Saint Petersburg Electrotechnical University “LETI”, Russia and Prof. Thomas Creely – U.S. Naval War College.

The Committee is established with responsibilities to:

- Update and collect information on threats and potential harm posed by AI.

- Connect companies, universities, and governments to find ways to prevent threats and potential harm.

- Engage in the audit of behaviors and decisions in the creation of AI.

- Create both an Index and Report about AI threats – and identify the source of threats.

- Create a Report on respect for, and application of, ethics codes and standards of governments, companies, universities, individuals and all others…

There are 23 members of the AIWS Standards and Practice Committee found by Governor Michael Dukakis. We are delightful to introduce our 2 newest members.

The first one is Prof. Mikhail Kupriyanov. He is an expert in the field of intellectual methods for data and process analysis, artificial intelligence, and embedded systems. Since 1993, he has been working in the position of professor at the Department of Computer Engineering, LETI. And since 2015, he is a head of the Department. Since 2010, he has been occupying the position of the head of the Computer Technologies and Informatics Faculty at LETI. He has been occupying the position of the Director of Education Department since 2018.

The second is Prof. Thomas Creely – Associate Professor of Ethics, Director of Ethics & Emerging Military Technology Graduate Program. He serves on NATO Science and Technology Organization Technical Team. At Brown University Executive Master of Cybersecurity, he is lead for leadership and ethics. He also serves the Conference Board Global Business Conduct Council, Association for Practical & Professional Ethics Business Ethics Chair, and Robert S. Hartmann Institute Board.

by BGF | Oct 21, 2018 | News

Are we letting AI make Life-or-Death Judgments?

At the Cybernetic AI Self-Driving Car Institute, AI software for self-driving cars is being developed. One crucial aspect to the AI of self-driving cars is the need for the AI to make “judgments” when in specific driving situations; some of them might lead to life-and-death outcome.

During our driving, it is possible to encounter unexpected situation, indicated by many factors: traffics, sign, pedestrians, and the terrain many actions can be taken result in multiple outcomes and we only have seconds to decide with the balance of people’s lives at stake. In the analysis given by Dr. Lance Eliot, CEO, Techbrium Inc. and the AI Trends Insider, in a circumstance, as we are driving on the highway with cars behind and in other lanes, then suddenly a shadowy figure of a pedestrian appears. In this case, no matter what the action is, casualty and damage is inevitable as we might hit the pedestrian or other cars. It is said that AI driver could experience the same situation human driver is involved in. Despite arguments says that self-driving car will ensure nothing like that will happen, but it is impossible to tell during the limitation of sensors, and incompletion of technologies.

Should we leave the decision making to AI?

If we do, we need to design tests for ethical decision-making or judgment of AI to avoid potential destruction, since automated softwares do not have human sense of reasoning, it will not be able to perform action when the situation arises. Furthermore, we tackle another challenge in term of ethics when comes to factor prioritizing. How can we tell which one is the best course of action when a certain moment occurs?

To avoid similar case in the future, we should not leave this to the technology developer; an Ethics Review Board should be used. Ethics Review Boards might be established at a federal level and/or a state level. They would be tasked with tasks of trying to guide how the AI should do when encountering moments (providing the policies and procedures, rather than somehow “coding” such aspects). They might also be involved in incident assessment with AI self-driving cars going outside the scope of the learned knowledge.

As technology is developing in unprecedented speed, not many people pay attention to the ethics. Regarding the automation of car, it will also require careful consideration and sampling and to figure out monitoring regulations; and the ethical framework for AI which is something that Michael Dukakis Institute’s experts in partnership with AI Trends are working on.