by BGF | Apr 22, 2019 | News

China is developing artificial intelligence on an unparalleled scale, but the US aims to beat it by inventing the next big ideas.

This week, the Defense Advanced Research Projects Agency (DARPA) showcased projects that are part of a new five-year, $2 billion plan to foster the next round of out-there concepts that will bring about new advances in AI. These include efforts to give machines common sense; to have them learn faster, using less data; and to create chips that reconfigure themselves to unlock new AI capabilities.

Speaking at the event, Michael Kratsios, deputy assistant to the president for technology policy at the White House, said the agency’s efforts are a key part of the government’s plan to stay ahead in AI. “This administration supports DARPA’s commitment, and shares its intense interest in developing and applying artificial intelligence,” Kratsios said.

President Trump signed an executive order last month to launch the US government’s AI strategy, called the American AI Initiative. Kratsios, who is also the government’s deputy chief technology officer, has been the driving force behind White House strategy on AI. The American AI Initiative calls for more funding and will make data and computing resources available to AI researchers. “DARPA has a long history of making early investments in fundamental research that has had amazing benefits,” Kratsios said. “[It] is building on this success in artificial-intelligence research.”

Since DARPA’s inception in 1957, it’s had something of a mixed track record, with many projects failing to deliver big breakthroughs. But the agency has had some notable successes. In the ’60s, it developed a networking technology that eventually evolved into the internet. More recently, it funded a personal-assistant project that led to Siri, the AI helper acquired by Apple in 2011.

But many of the algorithms now considered AI were developed many years ago, and they are fundamentally limited. “We are harvesting the intellectual fruit that was planted decades ago,” says John Everett, deputy director of DARPA’s Information Innovation Office. “That’s why we’re looking at far forward challenges—challenges that might not come to fruition for a decade.”

Through its AI Next program, DARPA has launched nine major research projects meant to tackle those limitations. They include a major effort to teach AI programs common sense, a weakness that often causes today’s systems to fail. Giving AI a broader understanding of the world—something that humans take for granted—could eventually make personal assistants more helpful and easier to chat with, and it could help robots navigate unfamiliar environments.

Another DARPA project will seek to develop AI programs that learn using less data. Training data is the lifeblood of machine learning, and algorithms that can ingest more of it can leap ahead of the competition. An innovation in this area could knock out a key advantage of tech companies operating in China, for example, which thrive on their access to an abundance of data. Other projects being funded focus on designing more efficient AI chips; exploring ways to explain the decision-making of opaque machine-learning tools; and making AI programs more secure.

To some degree, though, the AI Next initiative shows how tricky it is to gauge progress and prowess in AI. Much has been made of China’s efforts, and its government has declared an ambitious plan to “dominate” the technology. Other countries have also announced AI plans, and are pouring billions into them. But the US still spends more than any other nation on technology research and development.

Total investment matters, of course—but it’s only one part of the equation. The US has long been focused on funding emerging research through academia and agencies like DARPA. And that, in turn, has shaped the technological landscape in ways that weren’t always evident at first.

Take self-driving cars, for example. A decade ago, DARPA organized a series of driverless-vehicle contests in desert and urban settings. The competitions triggered a wave of excitement about the potential for automated driving, and a huge wave of investment followed. Many researchers who took part went on to start Google’s driverless-car effort. It’s still unclear how automated driving will change transportation, but some cars, such as those sold by Tesla, already offer limited forms of automation.

“Without DARPA coming in, [the self-driving-car boom] probably wouldn’t have happened at that scale at that time,” says Peter Stone, a professor at the University of Texas who took part in the car contest. He believes it’s vital for the US government to identify an unsolved AI problem and tackle it. “It may not happen, but if it works it will have huge implications,” he says.

by BGF | Apr 22, 2019 | News

Startup OpenAI spent years developing an AI that could play the classic 5v5 game Dota 2.

In a remarkable breakthrough for artificial intelligence, a team of AI has defeated the world champions of the competitive video game Dota 2. While this victory over humans isn’t the first for a game-playing AI, given the success of software in playing Go and poker, the Dota-playing AI had to master the art of teamwork to work alongside both other AI and human players.

Collaborative Intelligence

When Deepmind’s Go-playing AI, AlphaGo, recently defeat the world Go champion, that victory was remarkable because of the sheer number of possible moves and combinations in the game. Go is so complex even a supercomputer can’t calculate good moves by brute force. Instead, AlphaGo had to rely on intuition—or at least the machine learning equivalent, learning the game from scratch and then inventing moves humans never would have considered. But Dota 2 is a different kind of challenge for AI, which tends to struggle with concepts such as abstract reasoning and teamwork, qualities the game has in spades.

In Dota 2, ten players form two teams of five who fight to take objectives on the map. Neither team has a full vision of everything going on at all times. Players must work together to be victorious. These qualities that make Dota 2 a challenging game for many humans also make it an ideal testing ground for next-gen AI.

Startup OpenAI has been developing a Dota-playing AI for a few years now. This weekend, its team of AIs faced the ultimate test: playing a five-on-five match against OG, the Dota 2 reigning world champions, in a best-of-three series. In an exhibition match in San Francisco on Saturday, OpenAI claimed victory with a clean sweep.

Perfect Strangers

Although both games were close, the human players were eventually outmaneuvered. In the human players’ defense, however, OpenAI limited the complexity of the game somewhat, banning several strategies including some that the members OG like to use. In that sense, OG came into this game with a handicap.

Still, this competition isn’t really about who won and who lost. OpenAI’s goal is to build an artificial intelligence that could make judgement calls based on incomplete data and could cooperate with a stranger, the kinds of things humans do all day but which are terribly difficult to teach to a machine. Each of OpenAI’s five bots worked independently, so they might as well have been playing with perfect strangers.

In fact, in some of the matches they were. During another match at the exhibition, OpenAI changed up the sides, allowing two teams, each made up of two humans and three AI, to battle. OpenAI says even their own researchers were surprised at how well the bots were able to work with humans they’d never met.

OpenAI is done with Dota 2 for now, but the lessons learned could to design collaborative AI for all sorts of applications. The AI the company builds will likely have to work alongside humans someday, and they’ll be well-equipped to do so.

by BGF | Apr 22, 2019 | News

AI is set to aid the development of nuclear fusion reactors in a big way by predicting when major disruptions could halt reactions and damage the reactor.

While some claim that a stable nuclear fusion reactor capable of producing near-limitless, clean energy could be just over a decade away, researchers are still very much in the experimental stage of development. The biggest obstacle to achieving commercial energy production is controlling the intense, highly unstable plasma within the reactor with attempts so far only lasting a matter of minutes.

However, a team at the US Department of Energy’s Princeton Plasma Physics Laboratory (PPPL) has found a way to use the power of deep learning artificial intelligence (AI) to predict any disruptions that halt fusion reactions and damage doughnut-shaped tokamak reactors.

Image: © Korn V./Stock.adobe.com

“This research opens a promising new chapter in the effort to bring unlimited energy to Earth,” said Steve Cowley, director of PPPL, about the study published to Nature. “AI is exploding across the sciences and now it’s beginning to contribute to the worldwide quest for fusion power.”

Crucial to this new deep learning algorithm – called the Fusion Recurrent Neural Network (FRNN) – has been its access to 2TB of data provided by two major fusion facilities: the DIII-D National Fusion Facility in California and the Joint European Torus (JET) in the UK.

These facilities the largest in the US and the world, respectively, and the PPPL trained the AI system using these vast databases to reliably predict disruptions on other tokamaks.

‘Fusion science is very exciting’

Speaking of the importance of deep learning to the project, the team said it can achieve what other forms of AI can’t within nuclear fusion development.

For example, while non-deep learning software might consider the temperature of a plasma at a single point in time, the FRNN considers profiles of the temperature developing in time and space.

So far, FRNN is able to predict true disruptions within the 30 millisecond warning time frame required by the International Thermonuclear Experimental Reactor (ITER), an international nuclear fusion megaproject underway in France. However, it is closing in on the additional requirement of 95pc correct predictions with fewer than 3pc false alarms.

Bill Tang, co-author of the research and a principal investigator at PPPL, said: “AI is the most intriguing area of scientific growth right now, and to marry it to fusion science is very exciting.

“We’ve accelerated the ability to predict with high accuracy the most dangerous challenge to clean fusion energy.”

The next step in the research will be to move from prediction to the control of disruptions, but this will be quite the challenge.

“We will combine deep learning with basic, first-principle physics on high-performance computers to zero in on realistic control mechanisms in burning plasmas,” Tang said. “By control, one means knowing which ‘knobs to turn’ on a tokamak to change conditions to prevent disruptions. That’s in our sights and it’s where we are heading.”

by BGF | Apr 21, 2019 | News

Many countries are now competing to utilize AI, or artificial intelligence in the military sphere. But that may lead to a nightmare– a world where AI-powered weapons kill people based on their own judgement, and without any human intervention.

What are full autonomous lethal weapons?

Full autonomous lethal weapons powered by AI are now becoming the biggest issue as they are becoming an actual possibility with rapid advancement in technology.

It’s different from Armed UAVs(unmanned aerial vehicles) already deployed in actual warfare… the UAVs are remotely controlled by human, who make final decisions about where and when to attack.

On the other hand, autonomous AI weapons would be able to make decisions without human intervention.

It is estimated that at least 10 countries are developing AI-equipped weapons. The United States, China, and Russia, in particular, are engaging in fierce competition. They believe that AI will be key in determining which country will be better positioned than others. Concerns are growing that the competition could lead to a new phase of the arms race.

Ban Lethal Autonomous Weapons is an NGO trying to highlit the danger of the weapons.

A non-governmental organization calling for a ban on such weapons produced a video to demonstrate how dangerous these AI weapons could be.

The video shows a palm-sized drone that uses an AI-based facial recognition system to identify human targets and kill them by penetrating their skulls.

A swarm of micro-drones is released from a vehicle flying to a target school, killing young people one after another as they try to flee.

The NGO warns that AI-based weapons may be used as a tool in terrorist attacks, not just in armed conflicts between states.

This video is a complete fiction, but there are moves toward using swarms of such drones in actual military activities.

In 2016, the US Department of Defense tested a swarm of 103 AI-based micro-drones launched from fighter jets. Their flights were not programmed in advance. They flew in formation without colliding, using AI to assess the situation for collective decision-making.

Radar imagery shows a swarm of green dots — drones — flying together, creating circles and other shapes.

An arms maker in Russia developed an AI weapon in the shape of a small military vehicle and released its promotional video. It shows the weapon finding a human-shaped target and shooting it. The company says the weapon is autonomous.

The use of AI is also eyed to be applied to the command and control system. The idea behind it is to have AI help identify the most effective ways of troop deployment or attacks.

The United States and other countries developing AI arms technology say the use of fully autonomous weapons will avoid casualties of their service members. They also say it will also reduce human errors, such as bombing wrong targets.

Warnings from scientists

But many scientists disagree. They are calling for a ban on autonomous AI lethal weapons. Physicist Stephen Hawking, who died last year, was one of them.

Just before his death, he delivered a grave warning. What concerned him, he said, is that AI could start evolving on its own, and “in the future, AI could develop a will of its own, a will that is in conflict with ours.”

There are several issues concerning AI lethal weapons. One is an ethical problem. It goes without saying that humans killing humans is unforgivable. But the question here is whether robots should be allowed to make a decision on human lives.

Another concern is that AI could lower the hurdles to war for government leaders because it would reduce the war costs and loss of their own service men and women.

Proliferation of AI weapons to terrorists is also a grave issue. Compared with nuclear weapons, AI technology is far less costly and more easily available. If a dictator should get access to such weapons, they could be used in a massacre.

Finally, the biggest concern is that humans could lose control of them. AI devices are machines. And machines can go out of order or malfunction. They could also be subject to cyber-attacks.

As Hawking warned, AI could rise against humans. AI can quickly learn how to solve problems through deep learning based on massive data. Scientists say it could lead to decisions or actions that go beyond human comprehension or imagination.

In the board games of chess and go, AI has beaten human world champions with unexpected tactics. But why it employed those tactics remains unknown.

In the military field, AI might choose to use cruel means that humans would avoid, if it decides that would help to achieve a victory. That could lead to indiscriminate attacks on innocent civilians.

High hurdles for regulation

The global community is now working to create international rules to regulate autonomous lethal weapons.

Arms control experts are seeking to use the Convention on Certain Conventional Weapons, or CCW, as a framework for regulations. The treaty bans the use of landmines, among other weapons. Officials and experts from 120 CCW member countries have been discussing the issue in Geneva. They held their latest meeting in March.

They aim to impose regulations before specific weapons are created. Until now, arms ban treaties have been made after landmines and biological and chemical weapons were actually used and atrocities were committed. In the case of AI weapons, it would be too late to regulate them after fully autonomous lethal weapons come into existence.

International officials and experts are discussing regulating autonomous lethal weapons but haven’t reached a conclusion.

The talks have been continuing for more than five years. But delegates have even failed to agree on how to define “autonomous lethal weapons.”

Some voice pessimism that regulating AI weapons with a treaty is no longer viable. They say as talks stall, technology will make quick progress and weapons will be completed.

Sources say discussions in Geneva are moving toward creating regulations less strict than a treaty. The idea is for each country to pledge to abide by international humanitarian laws, then, create its own rules and disclose them to the public. It is hoped that this will act as a brake.

In February, the US Department of Defense released its first Artificial Intelligence Strategy report. It says AI weapons will be kept under human control, and be used without violating international laws and ethics.

But challenges remain. Some question whether countries will interpret international laws in their favor to make regulations that suit them. Others say it may be difficult to confirm that human control is functioning.

Humans have created various tools that are capable of indiscriminate massacres, such as nuclear weapons. Now, the birth of AI weapons that could be beyond human control are steering us toward an unknown dangerous domain.

Whether humans will be able to recognize the possible crisis and put a stop to it before it turns into a catastrophe is critical. It appears that human wisdom and ethics are now being tested.

by BGF | Apr 21, 2019 | News

Facebook is working on a voice assistant to rival the likes of Amazon’s Alexa, Apple’s Siri and the Google Assistant, according to several people familiar with the matter.

The tech company has been working on this new initiative since early 2018. The effort is coming out of the company’s augmented reality and virtual reality group, a division that works on hardware, including the company’s virtual reality Oculus headsets.

A team based out of Redmond, Washington, has been spearheading the effort to build the new AI assistant, according to two former Facebook employees who left the company in recent months. The effort is being lead by Ira Snyder, director of AR/VR and Facebook Assistant. That team has been contacting vendors in the smart speaker supply chain, according to two people familiar.

It’s unclear how exactly Facebook envisions people using the assistant, but it could potentially be used on the company’s Portal video chat smart speakers, the Oculus headsets or other future projects.

Mark Zuckerberg, CEO of Facebook.

Jaap Arriens | NurPhoto | Getty Images

The Facebook assistant faces stiff competition. Amazon and Google are far ahead in the smart speaker market with 67% and 30% shares in the U.S. in 2018, respectively, according to eMarketer.

In 2015, Facebook released an AI assistant for its Messenger app called M. It was supposed to help users with smart suggestions, but the project depended heavily on the help of humans and never gained traction. Facebook killed the project last year.

The company in November began selling its Portal video chat device, which lets users place video calls using Facebook Messenger. Users can say “Hey Portal” to initiative very simple commands, but the device also comes equipped with Amazon’s Alexa assistant to handle more complex tasks.

by BGF | Apr 19, 2019 | News

TEL AVIV, Israel, 16 April 2019–Israel’s reams of electronic medical records –health data on its population of around 8.9 million people– are proving fruitful for a growing number of digital health startups training algorithms to do things like early detection of diseases and produce more accurate medical diagnoses.

According to a new report by Start-Up Nation Central, the growth in the number of Israeli digital health startups –537 companies, up from 327 in 2014—has drawn in new investors, including Israeli VCs who have never previously invested in healthcare. This has driven financing in the sector to a record $511M in 2018, up 32% year on year. By the first quarter of 2019 the amount raised was already at $214M.

Of the $511M, over 50% ($285M) went to companies in decision support and diagnostics which rely heavily on data crunching. Overall , 85% ($433M) of the sector’s total financing went to health companies relying on some form of machine learning–a clear trend showing AI in the ascendancy. AI medical use cases include but are not limited to decision support tools for physicians, medical imaging analysis using computer vision, and big data analytics for population health management.

Also in 2018, new dedicated healthcare VCs were set up, and in early 2019 the largest ever venture capital fund raised in Israel, aMoon’s $660M fund, was earmarked for late stage health investment. Local and global hospital systems have started creating new joint ventures to test local startup technology, and the HMOs themselves are also establishing new innovation partnerships.

The electronic medical records have been gathered gradually over the past 25 years from the country’s 4 main health maintenance organizations (HMOs), allowing startups an increased ability to train and test artificial intelligence solutions, and partner with HMOs to validate their technology from early stages of development.

“With the combination of strong technological expertise and access to data, Israeli decision support companies, most of which utilize AI technologies, have been able to flourish, and have attracted increased levels of funding,” the report states.

Other important developments noted in the report:

An increase in Israeli investor activity in the sector: 124 investors invested in digital health companies in 2018 compared to 100 in 2017, with the growth coming mostly from a 66% increase in the involvement of Israeli investors, from 33 in 2017, to 55 in 2018. The data indicates increased local confidence in the sector, providing start-ups with support and expertise on the ground.

An increase in the number of later stage rounds: rising from 7 disclosed B and C+ rounds in 2017 to 12 in 2018. The combined capital raised in disclosed B and C rounds amounted to 50% of the total financing for the year compared with 30% in 2017. 17 investment rounds raised more than $10M each (80% of sector funding) in 2018 compared to 12 rounds in 2017 (68% of total funding). This indicates the sector’s maturation and availability of later stage funding, mostly from institutional investors.

Foreign hospitals and universities are increasingly coming to Israel to look for Digital Health technologies and to invest in local companies. For example, in 2018, three major US hospitals engaged with Israeli digital health: Intermountain Healthcare’s investment in Zebra Medical, Mt. Sinai Ventures’ contract with digital speech therapy company Novotalk, and Thomas Jefferson University’s pilot validation program in conjunction with the Israeli Innovation Authority for clinical care and hospital operations solutions.

Start-Up Nation Central’s report on Israel’s Digital Health industry offers a comprehensive and up-to-date analysis of the state of the Israeli Digital Health ecosystem and its trends.

by BGF | Apr 19, 2019 | News

For Chinese bureaucrats, getting a promotion isn’t just tied to their performance on the job — it’s increasingly about how well they behave in their leisure time.

For Chinese bureaucrats, getting a promotion isn’t just tied to their performance on the job — it’s increasingly about how well they behave in their leisure time.

Last month, the southeast city of Quanzhou became the latest to start rating civil servants’ personal behavior. Earlier Wenzhou — a commercial hub in the east — began equally weighting behavior at work and at home for promotions and other rewards. The coastal city Zhoushan also keeps files on the so-called social credit of public servants to assess them.

China is increasing pressure on its public servants, who are constantly expected to prove their loyalty to President Xi Jinping and the party. A new emphasis on personal behavior being rewarded over competence and ability is leaving bureaucrats disillusioned, as Xi curbs dissent and tightens his grip on power.

A member of the Armed Police stands guard under red flags at the Tiananmen Square in Beijing, China on Thursday, March 02 2017. Photographer: Qilai Shen/Bloomberg

The government is ramping up efforts to stamp out corruption among public servants and dissuade them from taking advantage of their positions and influence, amid an ambitious government plan to build a nationwide social credit system by 2020 to assign lifelong scores to citizens based on their behavior.

In January, China’s top judge Zhou Qiang vowed to strengthen rules barring government employees who defied court orders from making investments and holding certain jobs.

China’s already monitoring civil servants through a number of avenues, including a mobile app used to test Communist Party members’ loyalty to the party. Millions of citizens have downloaded the program to score points with the government.

‘Dishonest Records’

China’s State Council listed public servants as a crucial test group for building personal credit systems in a 2016 document that was later adopted by 20 provinces. The council separately ordered all court decisions, penalties or disciplinary actions taken against civil servants be recorded in a system of “dishonest records” to be collated on a national platform naming and shaming individuals.

Public servants who end up on the list will face consequences in their performance reviews and when being considered for promotions. Provinces and cities across the country have since formulated local versions of the plan.

The advances made by Wenzhou to monitor government workers parallels others in social credit pilot programs across China. The city’s authorities said in August public servants who defied or obstructed local court orders would face disciplinary action and have their wages withheld. In February, the courts teamed up with 41 government departments to share government employees’ social credit information.

by BGF | Apr 15, 2019 | News

President Trump and a top U.S. general spoke with Google’s CEO about the U.S. tech company’s AI ventures in China

President Donald Trump and his top U.S. military adviser met with Google’s CEO about concerns that Silicon Valley’s AI collaborations in China may benefit the Chinese military. Such worries reflect awareness of how certain technologies developed for civilian purposes can also provide military advantages in the strategic competition playing out between the United States and China.

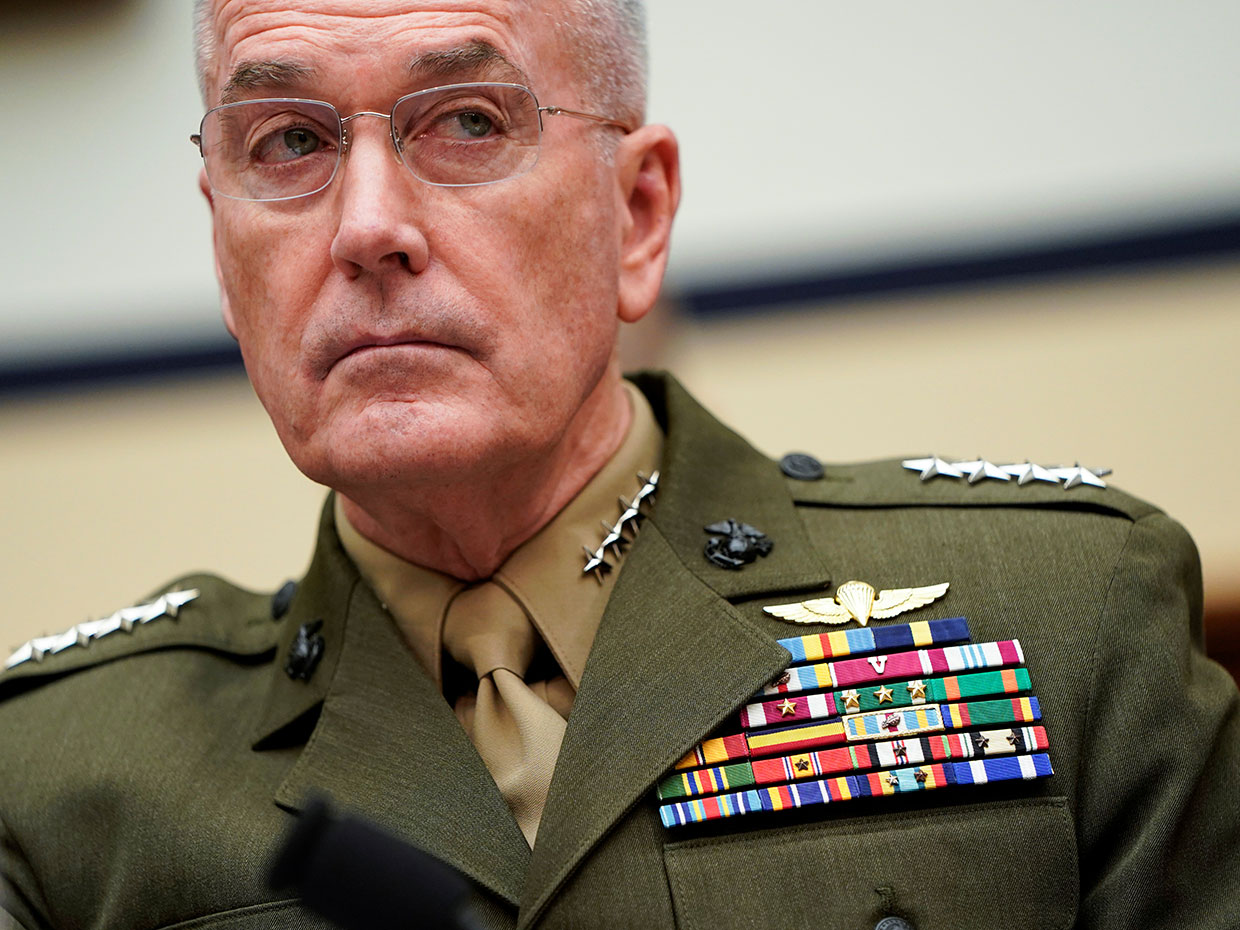

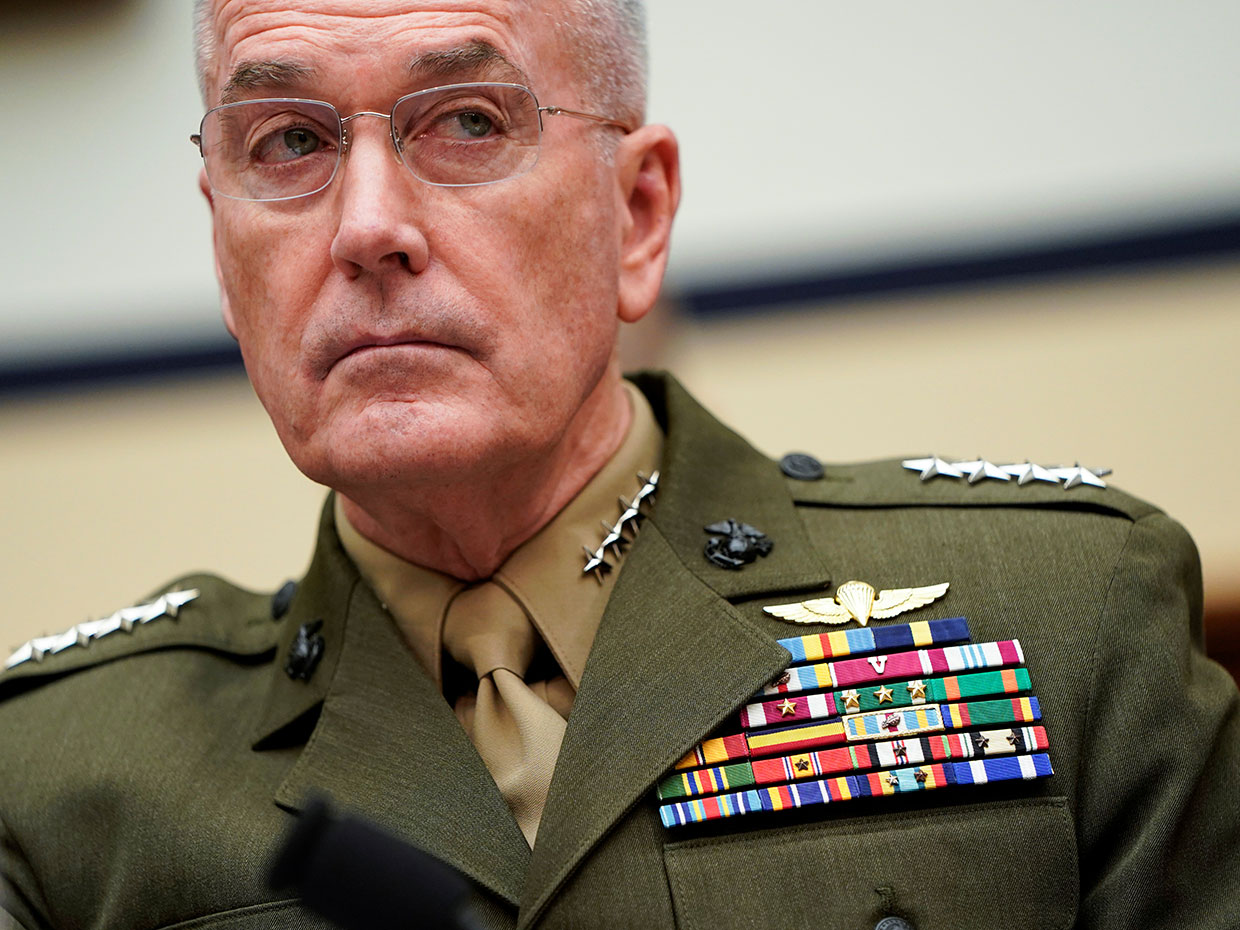

Photo: Joshua Roberts/Reuters Chairman of the Joint Chiefs of Staff General Joseph Dunford waits to testify to the House Armed Forces Committee on Capitol Hill in Washington, D.C., 26 March 2019.

The meeting comes after General Joseph Dunford, chairman of the Joint Chiefs of Staff, leveled pointed criticism at Google for pursuing technological collaborations with Chinese partners, during his testimony before the Senate Armed Services Committee on 14 March. The spotlight’s glare on Google grew harsher when President Trump followed up on Twitter: “Google is helping China and their military, but not the U.S. Terrible!” But beyond the focus on Google, the Pentagon seems more broadly concerned about U.S. tech companies inadvertently giving China a leg up in developing AI applications with military and national security implications.

“We watch with great concern when industry partners work in China knowing there is that indirect benefit, and frankly ‘indirect’ may be not a full characterization of the way it really is,” Dunford said. “It’s more of a direct benefit to the Chinese military.”

The chair of the Joint Chiefs of Staff elaborated on his comments about Google and China during another event held at the Atlantic Council, a think tank focused on international affairs, in Washington, D.C., on 21 March. U.S. tech companies working with China on AI initiatives “will help an authoritarian government assert control over its own people…and it will enable the Chinese military to take advantage of the technology that is developed in the United States,” Dunford said.

Dunford eventually met with Sundar Pichai, chief executive officer of Google, in Washington, D.C., on 27 March, according to Bloomberg. In an unexpected twist, President Trump also talked with Pichai and later tweeted about his satisfaction with the meeting: “He stated strongly that he is totally committed to the U.S. Military, not the Chinese Military.”

Even before the meeting, Google had already emphasized its willingness to work with the Pentagon on certain projects and denied working with the Chinese military. A Google spokesperson pointed to a statement first given to Wall Street Journal reporter Vivian Salama: “We are not working with the Chinese military. We are working with the U.S. government, including the Department of Defense, in many areas including cybersecurity, recruiting, and health care.”

There does not seem to be definitive evidence that Google’s activities in China have directly benefited Chinese military tech development so far, at least based on open sources, says Elsa Kania, an adjunct fellow with the Center for a New American Security (CNAS) in Washington, D.C. Her research focuses on Chinese military innovation in emerging technologies as part of the center’s Artificial Intelligence and Global Security Initiative.

Inspired in part by U.S. successes in defense innovation, China has established a national strategy of “military-civil fusion” (or “civil-military integration”) that aims to mobilize and leverage its burgeoning tech sector for military innovation, Kania explains. The United States and China are among many countries that have generally emphasized development of AI, quantum computing, and other futuristic technologies as national priorities.

But even if Google has not directly worked with the Chinese military or intentionally sought to aid Chinese military innovation, it’s “noteworthy that some of Google’s current and potential partners in China, including Fudan University and Tsinghua University, are involved in and committed to this national strategy of military-civil fusion,” Kania says.

The Chinese government’s direct control through state-owned companies—and influence over private Chinese companies—provides a “direct pipeline” to readily harness private-sector technologies originally developed for civilian applications and repurpose them for the military, said Patrick Shanahan,acting U.S. Secretary of Defense, in his testimony alongside Dunford during the Senate hearing.

“The U.S. too has been actively seeking to leverage commercial technologies to support defense innovation, but, as General Dunford’s evident frustration with Google highlights, not all American companies are eager to contribute to this agenda,” Kania says.

Intensive scrutiny of Google may seem unfair when it’s hardly alone in pursuing business ventures that could be seen as benefiting China’s broader AI ambitions. Other U.S. tech giants such as Amazon, Apple, IBM, and Microsoft all operate research centers in China that include projects focused on developing AI applications or cultivating local Chinese engineering talent. And at the end of the day, the Pentagon’s broader concerns about Silicon Valley’s business interests in China may not be unwarranted.

The Pentagon’s big-picture worries clearly go beyond Google. But Google may have attracted more ire and attention because it has proven warier than some other tech giants in lending its expertise to U.S. military projects.

It wasn’t always this way. Google’s top brass initially seemed enthusiastic about helping the U.S. military in deploying AI algorithms to help automate drone video surveillance in Project Maven, according to internal emails shared with Gizmodo. That changed when a public relations backlash and internal opposition from Google employees, some of whom signed an open letter or even resigned in protest, prompted Google’s leadership to wind down participation in the Project Maven after the contract expires this year.

That fallout from Google’s participation in Project Maven led the leadership to lay out the company’s ethical principles on what it sees as acceptable uses of AI and related technologies. The company cited such values as the reason it would not compete for the Pentagon’s lucrative Joint Enterprise Defense Infrastructure (JEDI) contract—worth up to US $10 billion—focused on building cloud computing infrastructure for the U.S. Department of Defense.

“Certainly, there is a real risk that Google’s engagement in China could benefit the Chinese military and government, including through facilitating tech transfer and contributing to talent development.” —Elsa Kania, Center for a New American Security

Google’s newfound reticence regarding certain U.S. military projects prompted Acting Defense Secretary Shanahan to describe Google’s stance as “a lack of willingness to support DOD programs” during the recent Senate hearing. But Google’s stance does not seem to have ruled out doing business with the Pentagon. Furthermore, former Google Chairman and CEO Eric Schmidt is currently heading the Pentagon’s Defense Innovation Board, which advises the U.S. military, even as he serves as technical adviser to Google’s parent company Alphabet and sits on the board of directors.

The fact is that Google is merely the latest lightning rod to attract controversy in the midst of several bigger debates roiling the U.S. national security and tech communities. From the standpoint of geopolitical competition, the Pentagon is alarmed by the possibility of U.S. tech innovation directly or indirectly aiding an authoritarian country that is increasingly portrayed in hostile terms as the main challenger to U.S. military dominance.

“This is about us looking at the second and third order effects of our business ventures in China, the Chinese form of government, and the impact it will have on the United States’ ability to maintain a competitive military advantage and all that goes with it,” Dunford said during the Atlantic Council event.

Both Silicon Valley and the Pentagon are also caught up in a different debate about the ethical use of AI and related technologies in both civilian life and on the battlefield. Many pioneering and prominent AI researchers have actively come out against the weaponized deployment of AI algorithms in so-called “killer robot” military technologies or surveillance applications that could infringe on privacy at home. Some engineers have become more public about turning down tech recruiters who represent companies such as Google and Amazon, citing ethical concerns about how such companies offer up their tech products and services to the U.S. military and law enforcement.

Differences of opinion on ethics can exacerbate the Pentagon’s existing challenges in attracting the best and brightest engineers to develop AI for military applications. The defense industry has already fallen behind the commercial sector in recruiting computer science and engineering talent because of salary and budget gaps, wrote Missy Cummings, director of the Humans and Autonomy Laboratory at Duke University, in a 2017 Chatham House report titled “Artificial Intelligence and the Future of Warfare.” On top of that, an increasingly vocal segment of the engineering workforce seems publicly disinclined to help the U.S. military pursue certain uses of AI technologies.

The U.S. military’s ongoing struggle to win hearts and minds in Silicon Valley may be feeding back into its anxiety about seeing U.S. tech giants reach out to China. From the Pentagon’s standpoint, it may seem like Silicon Valley is pouring talent and resources into helping a rival authoritarian power while turning up its nose at a U.S. military that sees itself as the defender of democracy. But the Pentagon’s focus on military competitive advantage lends itself to a certain institutional secrecy—such as declaring all 5,000 pages of Google’s work on Project Maven exempt from public disclosure—that is unlikely to convince skeptics who want more transparency in how the U.S. military uses AI and related technologies.

For now, the Pentagon can take heart from several factors. The “China model” may make it easier for that country’s government to harness the civilian AI technologies for military purposes but also imposes a heavy-handed agenda and ideology on Chinese tech companies in a way that could “prove self-defeating, as a drive for innovation comes into conflict with a propensity to reassert Party control,” Kania says. She also sees the “openness of the American innovation ecosystem” that sometimes include global research partnerships with Chinese counterparts as a huge competitive advantage for the United States. Still, she advocates caution for such U.S.-Chinese partnerships.

“When Google or any American company or university chooses to engage with a Chinese counterpart, I hope that this this increased blurring of boundaries between academic and military-oriented research in China, particularly in artificial intelligence, will be a consideration,” Kania says.

by BGF | Apr 15, 2019 | News

China’s aggressive artificial intelligence plan still does not match up to US progress in the field in many areas, despite the hype.

Chances are you’ve seen the stories, with headlines like “AI-driven technologies reshape city life in Beijing” or “Robots serving up savory food at Chinese artificial intelligence eateries” splashed across the page, a photo of a robot ominously beckoning you to believe one message: China is winning the artificial intelligence (AI) race in its quest to become the global superpower.

You would be wrong.

Since 2017, China has made an aggressive push to position itself as a global AI superpower, with a government plan investing billions of dollars in the field. But upon digging deeper, it’s not difficult to find that the US remains at the forefront of the AI race, with more investment sources, a larger workforce, more thorough research papers, and more advanced chipsets.

“There are countless industries where they said ‘We want to become world leaders,’ and it did not work—they basically burned billions,” said Georg Stieler, managing director of Stieler Enterprise Management Consulting China, referencing China. “You need an institutional framework and cultural foundations so that many independent actors can coordinate their work. China’s still not there yet.”

TIANJIN, CHINA – 2018/05/18: People are communicating with robots on the 2nd World Intelligence Congress, which was held in Tianjin Meijiang Exhibition Center from May 16-18, 2018. (Photo by Zhang Peng/LightRocket via Getty Images)

Here is the inside story of how China fooled the world into believing it is winning the AI race, when really it is only just getting started.

On your mark, get set, AlphaGo

Two moments in recent history catalyzed China’s grand AI plans.

The first came in March 2016, when AlphaGo—a machine learning system built by Google’s DeepMind that uses algorithms and reinforcement learning to train on massive datasets and predict outcomes—beat world champion Lee Sedol at the game.

“That was a watershed moment, because it was broadcast all throughout China,” said Jeffrey Ding, the China lead for the Center for the Governance of AI at the University of Oxford’s Future of Humanity Institute. “If you look at Baidu Trends, which is similar to Google Trends in that you can track the history of a term, the search history for ‘artificial intelligence’ spikes up after that match.”

The win highlighted how rapidly AI was advancing, said Elsa B. Kania, adjunct fellow with the Center for a New American Security’s Technology and National Security Program, focused on Chinese defense innovation in emerging technologies in support of the AI and Global Security Initiative. And since the game of Go is roughly approximate to warfare in terms of strategizing and tactics, “the success of AI in Go could imply that you could develop an AI system to seek decisions regarding warfare,” Kania said.

Threats from the US

The second moment that kicked off China’s grand AI plans came later that year, when former US President Barack Obama’s administration released three reports: Preparing for the Future of Artificial Intelligence, the National Artificial Intelligence Research and Development Strategic Plan, and Artificial Intelligence, Automation, and the Economy.

“There was a similar spike in the Baidu Trends data after that—some of the Chinese policy makers thought that the US was much further ahead in terms of AI planning and recognizing the strategic value of this technology than them,” Ding said.

The reports received more attention in China than in the US, Kania said. “Those plans were taken as an indication that the US was about to launch its own major national strategy in AI, which has not quite materialized since, but a lot of those ideas and policies have shown up to varying degrees in Chinese plans and initiatives that have come out since,” Kania said.

In July 2017, the Chinese government under President Xi Jinping released a development plan for the nation to become the world leader in AI by 2030, including investing billions of dollars in AI startups and research parks.

Meanwhile, in the US, President Donald Trump released a long-awaited American AI Initiativeexecutive order in February 2019. The order calls for heads of implementing federal agencies that perform or fund AI R&D to prioritize this research when developing budget proposals for FY 2020 on. However, it does not provide new funding to support these measures, or many details on how the plans will be implemented.

Cutting through the hype

Despite the ambitious plan and the hyped headlines, China is not as far along in its AI ventures as its state media would lead you to believe, Stieler said.

“There are a lot of half-truths and clear exaggerations that I see every day,” Stieler said. “Things that don’t work in the West also don’t work in China yet.”

These are the key elements of AI development where China lags behind the US, despite rampant media coverage.

Chips

Chinese companies are quick to apply new technologies and test their commercial viability, Stieler said, but the different building blocks involved are not all domestic.

China’s biggest roadblock to AI dominance is in its chip market, as high initial costs and a long creation cycle have made processor and chip development difficult, Ding said. China is still largely dependent on America for the chips that power AI and machine learning algorithms.

“China has been heavily reliant upon the import of the hardware required for AI, and is deeply dependent on semiconductors and struggles to develop specialized chips of its own,” Kania said. “So far, China has poured a lot of money into that industry without a lot of results.”

However, there are motivations for China to become more self-dependent in this area, particularly considering political tensions between the nation and the US, Kania said. In February 2019, Chinese chip maker Horizon Robotics announced that it was now valued at $3 billion, and expected progress in the coming year for third-generation processor architecture.

Research

Some of the fear of China’s growing AI dominance has stemmed from research stating that the number of AI research papers from China has outpaced those from the US and other nations in recent years. A December 2018 study from information analytics firm Elsevier found that between 1998 and 2017, the US published 106,600 AI research papers, while China published 134,990.

However, “When you measure the quality of the papers by self-citations, and when you apply an index that takes into consideration the reputation of the journals where the articles have been published, suddenly the number of Chinese papers drops, and falls below the numbers of the US,” Stieler said. “The quality of the papers is still higher in the US.”

The US also has a structural advantage for research due to the number of top universities, Ding said. “Stanford, Carnegie Mellon, and MIT attract some of the best and brightest Chinese researchers, who then end up working in the US,” he added.

Workforce

While five of the top 10 global machine learning talent-producing universities are in China, their graduates are not staying there, according to a 2018 Diffbot report. Four of these schools—Tsinghua University, Peking University, Shanghai Jiao Tong University, and the University of Science and Technology of China—produced a total of 12,521 graduates in recent years; however, only 31% of these graduates stayed in China, while 62% left for the US, the report found.

“If there is an arms race in AI right now, the battlefield is talent,” Kania said. The war for talent is occurring both among major tech companies and between a number of Chinese government initiatives trying to recruit students and researchers, she added. “The US has a major advantage here, because the majority of the world’s top universities and critical mass of talent remain in the US,” Kania said.

Global distribution of machine learning talent is heavily centered in the US, according to the Diffbot report. While there are about 720,000 people skilled in machine learning across the globe, nearly 221,600 of them—representing 31% of the total talent pool—live in the US. That means America is home to more top AI talent than the rest of the top 10 nations combined, including India, the UK, and Canada.

While China is rapidly scaling up AI education initiatives to build a more robust workforce of engineers and researchers, it’s still too early to know if it will be successful, Kania said.

“Certainly, China has the potential to become a major leader in AI, both technologically and in terms of building up the pool of top AI talent,” Kania said. “That’s motivated Google and others to start to explore setting up offices in China, and ways to access that market and that talent.”

Funding

AI startups in China raised nearly $5 billion in venture capital (VC) funding in 2017, compared to $4.4 billion in the US, according to an ABI Research report.

“Even though China has outpaced the US in terms of funding, the US still sees higher numbers of investment deals,” said ABI Research analyst and report author Lian Jye Su. While the US raised its money from 155 investments, China’s came from only 19 investments—indicating that investment in the East is more concentrated on certain sectors, Jye Su said.

People can view the AI race from two perspectives, Jye Su said: Technology and implementation. In terms of technology, the US still leads, in terms of being home to major companies like Google, Amazon, Facebook, and Microsoft, whose AI development frameworks and tools are widely used in the industry.

However, China has the edge over the US when it comes to implementation, Jye Su said. “The Chinese government has made it a priority to accelerate the development, adoption, and deployment of AI technologies in key areas, such as smart cities, industrial manufacturing, and healthcare,” he said. “Investors value the commercial viability and market potential of Chinese startups.”

Data and regulations advantages

China’s major advantage in AI research and implementation is the sheer quantity of data created by its population of 1.4 billion and far more lax regulations on that data than exist in the US.

“China has approximately 20% of the world’s data, and could have 30% by 2030,” Kania said. “Because data is the fuel for the development of AI, particularly for machine learning, that could provide China a critical advantage.”

While certain elements of AI, like facial recognition, require massive quantities of data, others require more advanced algorithms, which the US has an advantage over China on, Kania said.

US tech companies also have access to a greater variety and diversity of data than Chinese companies, due to their more global presence, Kania added. “As the broader globalization of Chinese tech companies occurs, it may give them more access to different sources of data along the way, too,” she said. “Data is an advantage for China, but one that also has limitations.”

It’s difficult to tell whether in the future AI will still require such massive amounts of data, or if the development of new algorithms and techniques will be more important, Kania said.

Major Chinese tech companies like WeChat have created an ecosystem around a flow of data that could take advantage of the AI boom, collecting user data on payments, interests, and messages, Ding said.

And China has made a major push to apply facial recognition to policing and surveillance, with an estimated 200 million surveillance cameras set up nationwide that use the technology to identify and arrest criminal suspects. By 2020, the nation plans to give all of its citizens a personal score based on their behaviors captured using facial recognition, smartglasses, and other technologies.

“There’s less of a willingness to do that in the US,” Ding said. “At the same time, some of these Chinese facial recognition companies just have better tech, and have been at the leading edge of some major competitions in computer vision—so it’s not just surveillance that is the application realm of facial recognition technology, it’s also being used in securities, finance, and payments. This is a multifaceted story.”

Many have raised serious concerns about how China is developing and deploying AI in terms of potential abuses to human rights and threatening the future of democracy, Kania said. “Surveillance technology is becoming very pervasive in China, but also diffusing to other countries that might see these options as quite attractive,” she added.

While US tech companies are wary about working on military and surveillance applications, Chinese companies and universities are often eager to support the Chinese government and military on such applications, Kania said.

However, though Chinese companies have succeeded in applying facial recognition in these realms, it doesn’t mean they can apply related AI technologies to autonomous driving or smart manufacturing, where the needs are more specific, Stieler said.

“There are not so many AI use cases I’m seeing here [in China] beside facial recognition and voice recognition,” Stieler said. “They have by far the largest data pool, but without logistics, they will drown in it.”

An interdependent system

Ultimately, AI is an umbrella term—the US and China are each ahead in certain elements of the technology, but are both still extremely limited in its implementation, Kania said. Both nations remain extremely interdependent upon each other in developing this technology, so one making progress is not necessarily a loss for either.

“Understanding US-China competition and collaboration in AI requires understanding that it’s not necessarily a zero-sum game,” Ding said. “There’s a lot of mutual interdependencies and cross-border investment. It’s an interwoven system where we should be trying to emphasize the mutual interdependencies and check our worst impulses to compete in a zero-sum way.”

While there are many reasons to celebrate the synergies among US and Chinese AI development, and much room for cooperation, it remains to be seen whether trade tensions and geopolitical competition will begin to jeopardize that—particularly in terms of military technology developments, Kania said.

US tech companies should keep an eye on China’s AI work, but avoid taking claims that seem outrageous too seriously, Stieler said.

“Take it with a grain of salt, but serve it carefully, because the aspirations are there,” Stieler said. “Somebody who has a bold idea and knows the right people will have enough capital to try it out.”